Sharing together has never been easier.

One of the nice things about the internet is the way it brings people together – especially with WebRTC. One of the interesting companies out there that is using this capability is Rabbit. They have taken WebRTC and baked it into a service where several people can meet together to share an experience on the web – watch a movie, collaborate online, etc.

I had the opportunity to chat with Philippe Clavel, Founder and CTO of Rabbit, using their own platform. What intrigued me most was their use of virtual machines for co-browsing.

I had the opportunity to chat with Philippe Clavel, Founder and CTO of Rabbit, using their own platform. What intrigued me most was their use of virtual machines for co-browsing.

Philippe was kind enough to answer a few of my questions.

What is Rabbit all about?

Rabbit is about bringing people together. Plain and simple. In Rabbit, you can video and text chat with up to 10 of your friends, and at the same time synchronously watch videos, play games or collaborate, right from your browser.

What are the use cases you are targeting with Rabbit?

Our use case is to solve the problem of sharing experiences when you’re in disparate locations. There’s nothing better than being in the same room as someone to watch a movie, work on a project or just talk. With Rabbit, you can do all of those things – the room may be in your browser, but it’s an all-in-one experience.

It’s been fun to hear directly from users as to how they’re using Rabbit. They’re in long-distance relationships and are looking for ways to stay connected. They’re using it to teach and coach. Their companies have stopped using other chat and teleconferencing solutions and are using Rabbit instead.

What excites you about working in WebRTC?

When I started looking for a technical solution for Rabbit in June 2011, I realized quickly that we had to build our own audio/video pipeline to be able to deliver the experience on the scale that we wanted.

This had two major implications:

- We had to build a native solution and could not have an installation-free process.

- A fair amount of our development efforts were focused on getting the real-time pipeline right.

When WebRTC came out last year, it changed everything. For the first time we had a stable and reliable API that provides everything we wanted within the browser. WebRTC has allowed us to focus on the experience and deliver a great product without even worrying about all the very complex things needed to capture, transmit and deliver a live video stream to a user.

As WebRTC is a defined standard, we have been able to define our own server component to extend the typical in-browser capabilities and support far more streams and a unique co-watching feature that could not have been done without it.

Personally, I love the fact that it is a very active ecosystem with so many potential applications that could not be solved without it. It is not only about video chat but data channel addition for example is a perfect example of something that can be used for so many different things on the web.

What signaling have you decided to integrate on top of WebRTC?

When we started using WebRTC to build Rabbit, we looked at signaling options and none of them were able to integrate nicely with our back-end solution.

We had to build our own in order to fit our needs.

From a technology standpoint the client opens a websocket to our state server using a simple JSON based protocol.

We use Redis as a messaging bus, and our state server forwards the information to our SFU.

From there, the web client and the SFU negotiate WebRTC session establishment together.

Backend. What technologies and architecture are you using there?

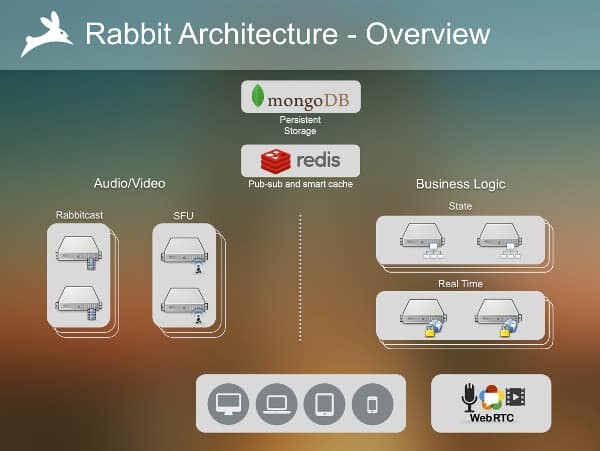

We have two main stacks, one for audio/video and one for our business logic:

Our audio/video stack is built in Java on top of Netty:

- Our SFU allows us to use WebRTC with much larger groups than the normal use case.

- For our shared viewing feature (called Rabbitcast™), we had to build a native extension to capture and delivery an HD stream with audio from our virtual machines.

- Both of them use our own WebRTC server stack to talk to the clients.

Our Business Logic stack is built on top of Node.js using a promise-based approach to keep our sanity.

Lastly we use Redis both for intelligent caching and pub/sub. MongoDB is our persistent storage.

The shared viewing feature – how does it work?

When you start sharing in your room (what we call a Rabbitcast), you control a remote virtual machine (VM) where you can browse and view anything you want. We stream an HD video of the VM in real time to all participants of the video chat. Audio is also synchronized so you can watch a movie together as if you were in front of the same display.

We do in fact use WebRTC for this. Our virtual machines are WebRTC end points which stream directly to the browser. As with the video chat, we have tight controls on quality to guarantee optimum delivery in real time for all participants. Lastly we use the Data Channel to control the remote machine with minimal latency.

How long do users tend to stay in a Rabbit session and what do they usually do in it?

Our average group session time is more than an hour!

Not surprisingly, the majority of people are watching movies and television shows from sites like Netflix, Amazon, Crunchyroll and others.

It’s usually couples or small groups of friends, but we also have groups organizing and promoting movies and watch parties.

Where do you see WebRTC going in 2-5 years?

From a technology standpoint, we are going to see a lot of new additions.

I am very interested in VP9 and SVC as it will help a lot with quality and bandwidth adaptation.

Browser support will increase as IE and hopefully Safari will join.

If they don’t we will see more and more plugins that allow you to have the functionality transparently.

Lastly we will also definitely have a stable native mobile API for both Android and iOS.

From an application standpoint, every time I read about a new product that uses WebRTC, I am surprised by how they use it.

As WebRTC matures, I expect to be even more surprised in the next few years.

If you had one piece of advice for those thinking of adopting WebRTC, what would it be?

Think outside the box.

If you are used to building web applications a certain way, you need to think that now you can easily have communications directly between two endpoints.

This is true both from a design standpoint and a technology standpoint.

When you are past that, as with any good technology the right question is how you use it to provide the best experience to your users.

Given the opportunity, what would you change in WebRTC?

There a few items that I would really like to see changed in WebRTC:

- Browser support: This one is a no brainer and I am sure that the first thing that come to mind: I would love to have Safari and IE support WebRTC.

- Browser standardization: On the top of more browsers, we need to have the same behaviors between browsers. Right now, Firefox and Chrome are not the same. Their API and the behavior is different which is even trickier when you have a SFU in the middle.

- Expose the internals: We should be able to control the bandwidth consumption from the API level. Same for jitter buffer. Some of the streams in the UI do not need to be real time, other do not need 2 Mbps quality because they are 100 x 100 pixels.

- Better flow control: While the current WebRTC flow control is working, ideally I would love to have a TCP-like behavior where you can control the retransmission. This will allow it to behave better under tough network conditions and improve the overall audio/video quality.

What’s next for Rabbit?

We’ve got big plans! In the near term, our goal is to increase accessibility by expanding to other browsers and devices.

We’re constantly adding new features and making improvements to give users the best possible experience.

Long term…you’ll have to discover what’s next.