Unlock the power of WebRTC in the era of generative AI. Explore the perfect partnership between these groundbreaking technologies.

I am working on my WebRTC for Business People update. Going through it, I saw that the slide I had depicting the evolution of WebRTC had to be updated and fit to today’s realities. These realities are… well… generative AI.

Here are some questions I want to cover this time

- Why do I think we’re entering a generative AI era?

- Is an era marked by technology and not a concept the right approach?

- What makes generative AI and WebRTC fit so well together? Are they the new avocado and toast? Peanut butter and jelly? Macaroni and cheese? Pizza and pineapple? (maybe scratch out that last one)

Let’s dive into it, shall we?

Table of contents

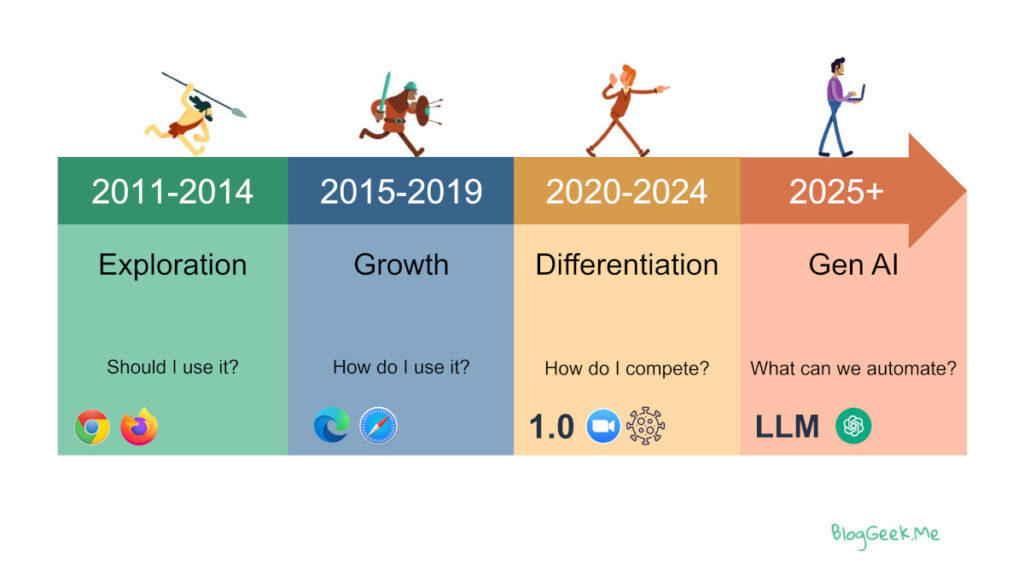

The evolution of WebRTC, divided into 4 eras

I had the above done about 5 years ago for the first time. Obviously, it had only the first 3 eras in it: Exploration, Growth and Differentiation –

- EXPLORATION: In its first phase of exploration, only Chrome and Firefox supported WebRTC. The main question we were asking was should we use it? Is this a real thing? Is this the technology we should rely on moving forward?

- GROWTH: During the second phase of growth, that has changed. With the introduction of WebRTC in Microsoft Edge and Apple Safari, along with a better understanding of the WebRTC technology, the question has shifted. We were now more interested in how to use it – which use cases is WebRTC good for? What is the best way to employ this technology?

- DIFFERENTIATION: During the third phase of differentiation, which is coming to an end, we focused on how to break through the noise. WebRTC 1.0 got officially released. Zoom showed tremendous growth (without using WebRTC), and we had the pandemic causing an increase of remote communications use, indoctrinating billions of people on how to make video calls. Here the focus was on how we compete with others in the market? What can we do with WebRTC that will be hard for them to copy?

As we are nearing the end of 2024, we are also closing the chapter on the Differentiation era and starting off the Generative AI one. The line isn’t as distinct as it was in the past, but it is there – you feel the difference in the energy inside companies today and where they put their focus and resources 👉 it is ALL about Generative AI.

Why Generative AI? Why now?

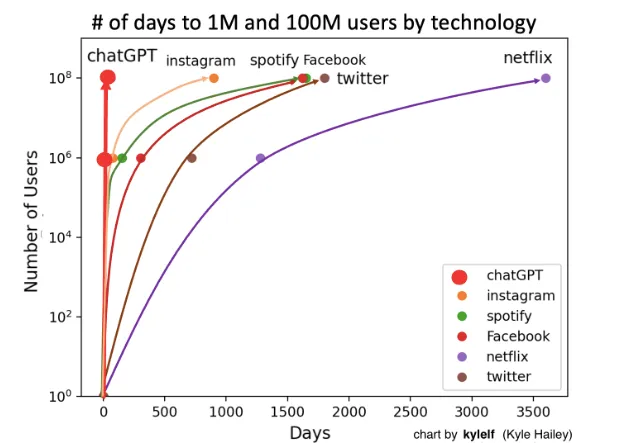

OpenAI introduced ChatGPT in November 2022, making LLMs (Large Language Models) popular. ChatGPT enabled users to write text prompts and have “the machine” reply back with answers that were human in nature. The initial adoption of ChatCPT was… instant – faster than anything we’ve ever seen before.

Source: Kyle Hailey

This validated the use of AI and Generative AI in a back and forth “prompted” conversation between a human and a machine. From there, the market exploded.

If you look at the WebRTC domain these days, it is kinda “boring”. We’ve had the adrenaline rush of the pandemic, with everyone working on scaling, optimization and getting to a 49-view magic squares layout. But now? Crickets. The use of WebRTC has gone drastically down after the pandemic. Still a lot higher than pre-pandemic time, but lower than what we had earlier. This had companies’ use going back down and their investments shrinking down with it. The world’s turmoils and instabilities aren’t helping here, and the inflation is such that stifles investments as well.

So a new story was needed. One that would attract investment. LLM and Generative AI were then, powered by the popularity of OpenAI’s ChatGPT.

This is such a strong pull that I believe it is going to last for quite a few years, earning it an era of its own in my evolution of WebRTC view.

The need for speed: how GenAI and LLM fit so well with WebRTC

ChatGPT brought us prompting. You ask a question in text. You get a text answer back. A ping pong game. Conversations are somewhat like that, with a few distinct differences:

- There’s voice involved, which is more nuanced than just text

- You can cut off people in mid-sentence (I know I do it a lot more than I should)

- It needs to be more real time. Latency is critical – no time to wait for the answers because we need the conversation to be natural

So there’s a race going on, where work is being invested everywhere in the Generative AI pipeline to reduce latency as much as possible. I touched on that when I wrote about Open AI, LLM and WebRTC a few months back.

Part of that pipeline is sending and receiving audio over the network, and that is best served today using WebRTC – WebRTC is low latency and available in web browsers. How vendors are designing their interfaces for audio and LLM interactions isn’t the most optimized or simple to use for actual conversations, which is why there are many CPaaS vendors who are adding that layer on top today. I am not quite sure that this is the right approach, or how things will look like a few months out. So many things are currently being experimented and decided.

What does that mean for WebRTC in 2025 and beyond

WebRTC has been in a kind of maintenance status for quite some time now. This isn’t going to change much in 2025. The most we will see is developers figuring out how to best fit Generative AI with WebRTC.

Some of the time this will be about the best integration points and APIs to use. In other times, it is going to be about minor tweaks to WebRTC itself and maybe even introducing a new API or two to make it easier for WebRTC to work with Generative AI.

More on what’s in store for us in 2025, in a few weeks time. Once I actually sit down and work it out in a separate article.

I am here to help

If you are looking to figure out your own way with Generative AI and WebRTC, then contact me.

I am working on a brand new workshop titled “Generative AI and WebRTC” – you can register to the webinar now and reserve your spot.

Thank you

This update on WebRTC’s evolution is insightful! The shift to the Generative AI era is well-explained, highlighting its transformative impact. Excited to see how AI enhances real-time communication.