Understanding API and SDK: Dive into their definitions and learn why both are crucial for effective software development with CPaaS and LLM.

An API and an SDK. They are similar but different. Both are interfaces used by services to expose their capabilities to developers and applications. For the most part, we’ve been happy enough with APIs that are based on REST, probably with an OpenAPI specification based definition for it.

But for things like WebRTC, communications and WebSocket based interfaces, an API just isn’t enough.

Let’s dive in to see why.

Table of contents

What are API and SDK?

We will start by a quick definition of each.

Keep in mind that the actual definitions are rather fluid – the ones below are just those that are common today in our industry (networking software).

API

API stands for Application Programming Interface. In this day and age, such an interface is usually one that gets used by remote invocation – from one machine to another over an IP network.

The most common specification for an API? REST

REST is a rather simple mechanism built on top of HTTP. For me, it is a way to formalize how a URL can be used to retrieve values, push values or execute “stuff” on the server.

Why REST? Because it uses HTTP, making it easily accessible and usable by web applications running inside web browsers.

Then there’s OpenAPI which is simply a specification of how to express interfaces using REST in a formal way. This enables using software tools to create, document, deploy, test and use APIs.

While there are other types of APIs, which don’t rely on REST or OpenAPI, most do.

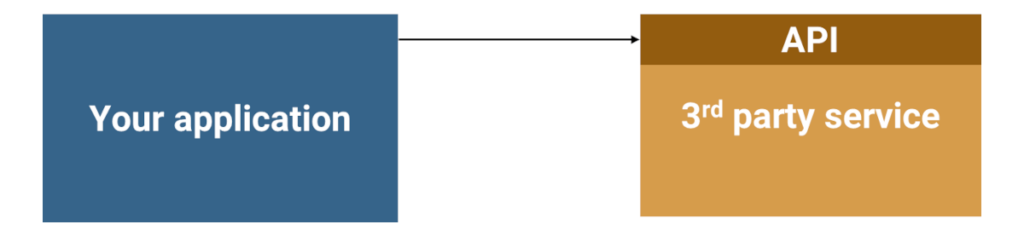

The unique thing about an API? It sits “inside” or “on top” of the service we want to interface with and we call/invoke that API by calling it from a separate process/machine.

SDK

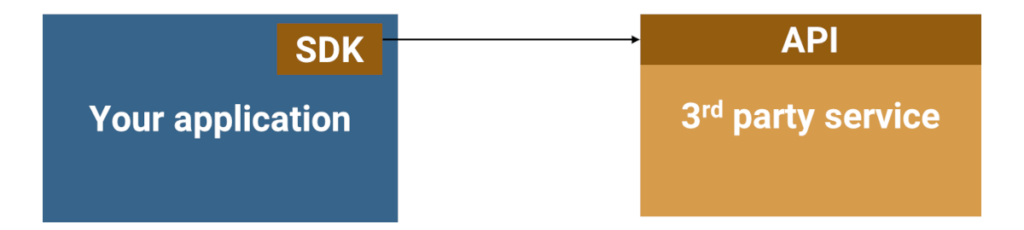

SDK stands for Software Development Kit. For me, that’s a piece of code that gets embedded in your application as a library that you use directly.

Where an API gets communicated remotely, over the network; an SDK gets invoked directly, from inside a software application.

In many cases, an SDK is built on top of an API, to make it easier to integrate with.

CPaaS and Programmable Communication interfaces

Lets see what Twilio does as an example of the various interfaces they have on offer. The ones on offer are:

- An API. REST based. The classic definition of an API as given above

- Helper libraries. Also known as server-side SDKs. These libraries are written in various languages and simply call the APIs, so you don’t have to deal with REST calling directly

- TwiML. A kind of XML structure that can be used to define what is done when a phone number is dialed. It is a kind of a lowcode technique that have been introduced by Twilio years ago

- Client-side SDKs. These SDKs are needed when you want to run complex code on client devices

- To implement voice and video calling using WebRTC. Because here, REST just isn’t enough…

- When using Conversations and Sync Twilio services. Because these likely use WebSocket instead of REST and are more challenging to implement directly using something like REST (they are likely more stateful in their nature)

The moment in time that a client side SDK is needed is when explaining how to interact with the server’s interface (think REST) is going to be complicated. Remember – CPaaS vendors are there to simplify the development. So adding SDKs to simplify it further where needed makes total sense.

WebRTC almost forces us to create such client side SDKs. Especially since signaling isn’t defined for WebRTC – it is up to the vendor to decide, and here, the vendor is the CPaaS vendor. So if he defines an interface, it is easier to implement the client side of it as an SDK than to document it well enough and assume customers will be able to do the implementation properly without wasting too much time and too many support resources.

Programmable LLM interfaces

Time to look at LLM and Generative AI interfaces that are programmable. We do that by reviewing OpenAI’s developer platform documentation. Here’s what they have available at the moment:

- REST API and an SDK, for the text based prompting technology. The SDKs are available for JavaScript and Python

- WebSocket interface/API, for voice prompting. No SDK (yet)

- WebRTC interface/API, for voice prompting. No SDK (yet)

With voice, OpenAI Realtime API started by offering a WebSocket interface. Google Gemini followed suit.

Why WebSocket?

- Because the data that needs to pass through the connection is audio and not just a text request. HTTP is a bit less suitable for this

- It is bidirectional in nature – voice goes both ways here

- There are extra events that have to go alongside the voice itself

- The connection needs to stay open for long periods of time

- There is no real “request-response” paradigm like the one we’re used to on the web and with HTTP. While it is “there” to some extent, human interactions aren’t just a back and forth ping pong game

- Also, all online TTS and STT services out there offer a WebSocket interface, so this was just following an adjacent industry’s best practices (showing why sometimes best practices just aren’t the best)

Why no SDK? Because this is still in beta…

They quickly followed with a WebRTC interface. Which makes total sense – WebSocket isn’t really real time and comes with its own set of limitations for an interactive voice interface (on that, in another time).

What they didn’t do here was add an SDK either.

And while with WebSocket this is “acceptable”, for WebRTC… I believe it is less so.

👉 Here’s what I wrote about OpenAI, LLMs, voice and WebRTC a few months back

Is an SDK critical to “hide” a WebRTC interface

Yes it is.

WebRTC has an API surface that is quite extensive. It includes APIs, SDP, network configuration, etc.

Leaving all these exposed and even more – with no direct implementation other than an example in the documentation – isn’t going to help anyone.

WebRTC as a development interface suffers from a few big challenges:

- Low level, suitable for experience WebRTC developers only

- Exposes internals of the implementation, which are better kept out of a third party engineer’s hands (for example, controlling the iceServers configuration)

- Expansive interface with 100s of APIs that make up WebRTC. Which ones should a developer use? Which ones were tested against?

- Varied interface that includes APIs, callbacks, promises, configurations and SDP munging

- No defined signaling means someone needs to define it. And then developers need to understand and use that definition. Tricky (trust me)

This means that without having an SDK to a WebRTC interface (be it for a Programmable Video or Voice service, or for an LLM / Generative AI service), you are going to be left with a solution that is hard to adopt and easy to break:

- Hard to adopt because it takes a long time for developers to integrate with, and in the process eats up expensive support resources on your end (not to mention frustration for both the customer and the support people)

- Easy to break because there are just too many things that developers can do that you haven’t thought about that they are bound to fail or even cause outages on your end

Oh, and we didn’t go into the discussion of what to do with Android and iOS developers that might want to integrate with the services inside a native application (they need native SDKs…).

If you’re aiming to have an API for a WebRTC interface, then you should also work towards having an SDK for it. And if not, be very very clear to yourself why you don’t need an SDK.