Why and where do we use SVC exactly?

[When Alex Eleftheriadis, Ph.D., the Chief Scientist & Co-founder of Vidyo, approached me about writing a piece about SVC and WebRTC – how could I refuse? Someone had to give the explanation, and what better person than Alex to do that?]

Just when the infamous WebRTC video codec debate appears to have been settled, with both H.264 and VP8 being set as mandatory-to-implement by browsers, VP9 has started making inroads into the WebRTC software stack and into browsers themselves. Indeed, Chrome 48 includes, for the first time, VP9 support for WebRTC. Firefox also includes support for it in WebRTC in the Developer Version of Firefox 46.

Why is this relevant for the WebRTC community – users and developers? First off, VP9 codec offers significantly better compression efficiency compared with H.264, and even more so compared with VP8. This translates to better quality for the same bit rate, or a lower bit rate for the same quality (as low as 50%). This by itself is a big plus, but it does not tell even half of the story.

The Need for Scalability

When using WebRTC beyond two-way, peer-to-peer calls, or in networks with significant quality problems, system architects are encountering the same design issues that the videoconferencing industry has been dealing with for a long time now. It is not accidental then that WebRTC solutions designed for multi-point video gravitate towards those offered in videoconferencing, or that videoconferencing companies are adapting their systems to become WebRTC solutions. For the latter, this typically entails aligning with transport-level, security, and NAT traversal specifications, and of course providing a JavaScript library that enables WebRTC-enabled browsers to use their system’s facilities.

If we look at today’s architectural landscape for high-quality multi-point video, there are two main designs. One is based on transmission of a single stream of scalable coded video. Scalable means that the same bitstream contains subsets, called layers, that allow you to reconstruct the original at different resolutions. If you get the lowest, or base, layer you can decode the video at a certain resolution, whereas if you also get a higher, or enhancement layer, you can decode the video at a higher resolution. This is great for robustness and adaptability, because you do not need to process the video at all to get at the different resolutions.

The second design is based on simulcast transmission of two separate streams that encode the same video at different resolutions. Contrary to the scalable design, here we have two encoding passes rather than one, with the associated streams requiring a higher bitrate compared with scalable coding. It is also less error resilient. On the plus side, however, simulcast allows the use of older, non-scalable decoders. This has been an important consideration for systems that interface with legacy devices (not relevant for WebRTC).

Single Layer, Scalable, and Simulcast Coding of Video. In scalable coding the various layers (“a” and “A”) are multiplexed in a single stream. In simulcast two or more independently encoded streams are produced and are transmitted separately.

Both of these designs utilize a special type of server for which I have coined the term “Selective Forwarding Unit” (SFU). This type of server was not known when the original RTP Topologies RFC was published in 2008 (RFC 5117), but it is now included in its 2015 update, RFC 7667.

The operation of the SFU, using the VidyoRouter as an example. In the diagram the SFU receives three scalable streams, and it selects to forward the full resolution for the blue participant (base and enhancement layers), but only the base layer for the green and yellow participants.

The SFU works in the following way: it receives scalable or simulcast video, and it decides which layer or which stream to forward to a receiving participant. There is no signal processing involved, and the operation incurs very little delay (less than 10 ms is typical). If we contrast this with the traditional architectures that are still being used and involve transcoding of multiple videos, the advantages are obvious – both in terms of processing complexity but also in terms of delay (150 ms delays would be typical for the traditional architectures). Minimizing delay is hugely important for perfecting the end-user experience.

What is interesting is also how the receiving endpoint operates. Contrary to legacy videoconferencing systems, it receives multiple streams that it has to individually decode, compose, and display on the screen. This multi-stream architecture perfectly matches WebRTC’s design.

The multi-stream architecture of an SFU endpoint – the endpoint receives multiple video streams that it has to individually decode, and composite on the user’s screen.

To appreciate the significance of these architectures it suffices to point out that both Skype for Business and Google+ Hangouts use simulcasting (of H.264 and VP8, respectively). So does the open source VideoBridge by Jitsi. Vidyo, which first introduced the concept in its VidyoRouter product in 2008, is using scalability (with H.264 SVC). Simulcast support is now in the scope of the WebRTC 1.0 specification and it is being actively worked upon. Scalable coding is already supported by the ORTC specification, and will be addressed in WebRTC-NV (post 1.0).

Scalability, SVC and WebRTC VP9

Now we can turn back to our original question regarding scalability and VP9. If you want to be able to use an SFU architecture with scalable coding, the codec itself must support scalability. That’s why back in 2013 Vidyo announced that it would be collaborating with Google to develop a scalable extension for the VP9 codec. This effort is now bearing fruits.

One may ask, “why care about VP9, I will just use whatever stock codec my browser has and be done with it.” The answer is that you do want to care, when quality matters. Depending on the codec used, and the type of multi-point server architecture deployed, the end user will get a vastly different quality of experience.

We can think of the WebRTC endpoint as a kitchen that has a bunch of ingredients. If your expectations are low, you can go for the raw vegetables and have a meal in no time. If you want a fine meal, you will want both the right ingredients as well as the right recipe. The standardization process will ensure that the WebRTC kitchen has all the right ingredients. The recipe and, in fact, the cook, are all part of whoever is offering the service. By taking into account all the realities of imperfect network transmission, heterogeneous clients, mobility, etc., they make sure that the users enjoy a great experience. If you go with a proprietary solution, you can then add plenty of secret sauce.

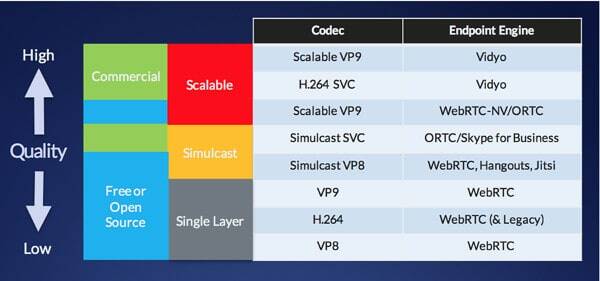

Endpoint Quality Scale: One ordering of relative quality of different codec and endpoint engine combinations.

Taking into account the different combinations of video codecs and endpoint engines, I put together an “Endpoint Quality Scale” diagram, shown above. You can think of it as the skeleton of the multi-point video kitchen menu. Vidyo is vigorously trying to be the three Michelin star restaurant; its proprietary engine uses a lot of secret sauce in addition to the standard ingredients. But together with the industry as a whole we want to make sure that the menu, especially when it comes to WebRTC, offers something for all tastes and price ranges.

Bottom line, when people select platform providers for their WebRTC-based solutions they need to be aware of these differences and, especially when quality matters, make an educated and well-informed choice. Bon appetit.

Read this also if you want to know if VP9 codec is the right choice for your WebRTC application.

On the horizon: AV1

A lot of the discussions and debates around VP9 haven’t matured into real adoption. It seems that actual adoption of SVC technology, which is available today already in Chrome as part of WebRTC, will just have to wait for the HEVC vs AV1 debate and its outcomes.

That was a nice explanation of SVC, particularly with the contrast to simulcast.

and if you’d like to hear more about this https://www.vuc.me/2014/vuc484-the-future-of-video/ is still relevant!