I say it doesn’t matter what the technique is as long as you go through the motion of upgrading your WebRTC Media Servers…

Here’s the thing. In many cases, you end up with a WebRTC deployment built for you. Or you invest in a project until its launch.

And that’s it.

Why Upgrade WebRTC Media Servers?

With WebRTC, things become interesting. WebRTC is still a moving target. Yes. I am promised that WebRTC 1.0 will be complete and published by the end of the year. I hear that promise since 2015. It might actually happen in 2017, but it seems browser vendors are still moving fast with WebRTC, improving and optimizing their implementations. And breaking stuff at times as they move along.

Add to that the fact that media servers are complex, and they have their own fixes, patches, security updates, optimizations and features – and you find yourself with the need to upgrade them from time to time.

Upgrade as a non-functional feature is important for your WebRTC requirements. I just updated my template, so you don’t forget it:

I’ll take it a bit further still:

- With WebRTC, the browser (your client) will get upgraded automatically. It is for your own safety 🙂 This in turn, may force you to upgrade the rest of your infrastructure; and the one prone the most?

- Your WebRTC media server needs to be upgraded. First to keep pace with the browsers, but also and not less important, to improve; but also

- The signaling server you use for WebRTC. That one may need some polish and fine tuning because of the browser. It may also need to get some care and attention – especially if and when you start expanding your service and need to scale out – locally or geographically

- Your TURN/STUN servers. These tend to go through the least amount of updates (and they are also relatively easy to upgrade in production)

Great. So we need to upgrade our backend servers. And we must do it if we want our service to be operational next year.

Talking Production

But what about production system? One that is running and have active users on it.

How do you upgrade it exactly?

Gustavo García in a recent tweet gave the techniques available and asked to see them by popularity:

Just curious about how do you upgrade your #WebRTC mediaservers?

— Gustavo Garcia (@anarchyco) August 4, 2017

I’d like to review these alternatives and see why developers opt for “Draining first”. I’ll be using Gustavo’s naming convention here as well. I will introduce them in a different order though.

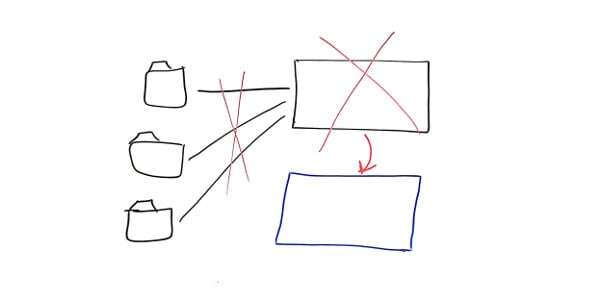

#1 – Immediate Kill+Reconnect

This one is the easiest and most straightforward alternative.

If you want to upgrade WebRTC media servers, you take the following steps:

- Kill the existing server(s)

- Upgrade their software (or outright replace their machines – virtual or bare metal)

- Reconnect the sessions that got interrupted – or don’t…

This is by far the simplest solution for developers and DevOps. But it is the most disruptive for the users.

That third step is also something of a choice – you can decide to not reconnect existing sessions, which means users will now have to reconnect on their own (refresh that web page or whatever), or you might have them reconnected, either by invoking it from the server somehow or having the clients implement some persistency in them to make them automatically retry on service interruption.

This is also the easiest way to maintain a single version of your backend running at all times (more on that later).

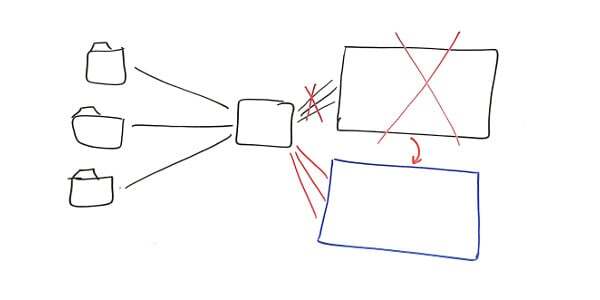

#2 – Active/Passive Setup

In an active/passive setup you’ll have idle machines sitting and waiting to pick up traffic when the active WebRTC media servers are down (usually for whatever reasons and not only on upgrades).

This alternative is great for high availability – offering uptime when machines or whole data centers break, as the time to migrate or maintain service continuity will be close to instantaneous.

The downside here is cost. You pay for these idle machines that do nothing but sit and wait.

There are variations of this approach, such as active-active and clustering of machines. Not going to go in the details here.

In general, there are two ways to handle this approach:

- Upgrade the passive machines (maybe even just create them just before the upgrade). Once all are upgraded, divert new traffic to them. Kill the old machines one by one as the traffic on them whanes

- Employ rolling upgrade, where you upgrade one (or more) machines each time and continue to “roll” the upgrade across your infrastructure. This will reduce your costs somewhat if you don’t plan on keeping 1:1 active/passive setup at all times

(1) above is the classic active/passive setup. (2) is somewhat of an optimization that gets more relevant as your backend increases in its size – it is damn hard to replace everything at the same time, so you do it in stages instead.

Note that in all cases from here on you are going to have at least two versions of your WebRTC media servers running in your infrastructure during the upgrade. You also don’t really know when the upgrade is going to complete – it depends on when people will close their ongoing sessions.

In some ways, the next two cases are actually just answering the question – “but what do we do with the open sessions once we upgrade?”

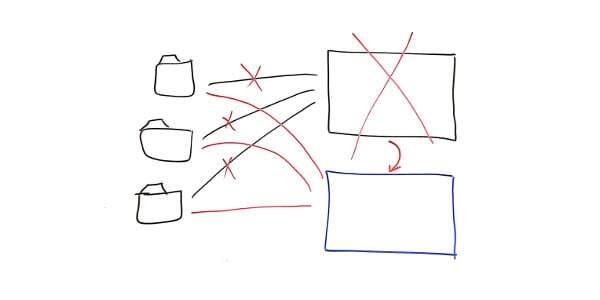

#3 – Sessions Migration First

Sessions migration first means that we aren’t going to wait for the current sessions to end before we kill the WebRTC media server they are on. But we aren’t going to just immediately kill the session either (as we did in option #1).

What we are going to do, is have some means of persistency for the sessions. Once a new upgraded WebRTC media server machine is up and running, we are going to instruct the sessions on the old machine to migrate to the new one.

How?

Good question…

- We can add some control message and send it via our signaling channel to the clients in that session so they’ll know that they need to “silently” reconnect

- We can have the client persistently try to reconnect the moment the session is severed with no explanation

- We can try and replicate the machine in full and have the load balancer do the switchover from old to new (don’t try this at home, and probably don’t waste your time on it – too much of a headache and effort to deal with anyways)

Whatever the technique, the result is that you are going to be able to migrate rather quickly from one version to the next – simply because once the upgrade is done, there won’t be any sessions left in the old machine and you’ll be able to decommission it – or upgrade it as well as part of a rolling upgrade mechanism.

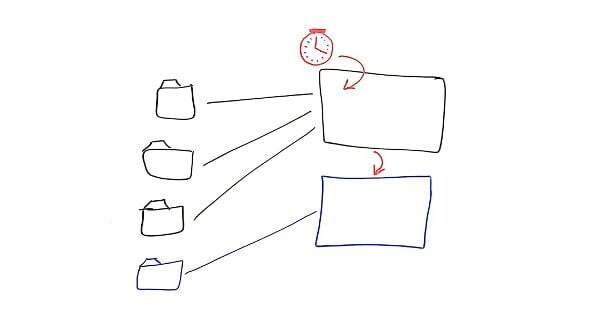

#4 – Draining First

Draining first is actually draining last… let’s see why.

What we are going to do here is bring up our new upgraded WebRTC media servers, route all new traffic to them and… that’s about it.

We will keep the old machines up and running until they drain out of the sessions that they are handling. This can take a couple of minutes. An hour. A couple of hours. A day. Indefinitely. Depending on the type of service you have and how users interact with it will determine how long on average it will take for a WebRTC media server to drain its sessions with no service interruption.

A few things to ponder about here (some came from the replies to that original tweet):

- WebRTC media servers can’t hold too much traffic (they don’t scale to millions of sessions in parallel)

- With a large service, you can easily get to hundreds of these machines

- Having two installations running in parallel, one with the new version and one with the old will be very expensive to operate

- The more servers you’ll have, the more you’ll want to practice a rolling upgrade, where not all servers are upgraded at the same time

- You can have more than two versions of the WebRTC media server running in parallel in your deployment. Especially if you have some really long lived sessions

- You can be impatient if you like. Let session drain for an hour. Or two. Or more. And then kill what’s left on the old WebRTC media server

- Media servers might be connected to other types of services – not only WebRTC clients. In such a case, you’ll need to figure out what it means to kill long lived sessions – and maybe decouple your WebRTC media server to further smaller servers

Why Most Developers Lean Towards Draining First?

Gustavo’s poll garnered only 6 answers, but they somehow feel right. They make sense from what I’ve seen and heard from the discussions I’ve had with many vendors out there.

And the reasons for this are simple:

- There’s no additional development on the client or WebRTC media servers. It is mostly DevOps scripts that need to reroute new incoming traffic and some monitoring logic to decide when to kill an empty old WebRTC media server

- There’s no service disruption. Old sessions keep running until they naturally die. New sessions get the upgraded WebRTC media servers to work on

What’s next?

If you are planning on deploying your own infrastructure for WebRTC (or have it outsourced), you should definitely add into the mix the upgrade strategy for that infrastructure.

This is something I overlooked in my WebRTC Requirements How To – so I just added it into that template.

Need to write requirements for your WebRTC project? Make sure you don’t miss out on the upgrading strategy in your requirements:

Hmm, browser upgrades and signaling should have nothing to do with each other, after all, signaling is not specified in WebRTC.

Silvia,

You make a good point. That said, signaling is intertwined between what goes on in the signaling server and the client software running on the device (be it JS code or native on iOS or Android). What I’ve noticed is that any signaling server for WebRTC that hasn’t been updated in the last 12 months with either not work or malfunction in obvious scenarios – probably due to changes in browser behavior.

signaling servers should be pass-through, unless you are a telco engineer… wait… brb 😉

I agree Tsahi, the movement in browser WebRTC behaviour impacts timing assumptions in the signalling stack, that can’t be taken for granted. This is amplified by high availability signalling architecture.

Interestingly, recent work on supporting Safari, shows how more browsers in the mix complicate the connection timing assumptions, leading to even more signalling upgrades.

Yap. Every new browser that joins adds headaches until things settle down.

Some time in 2018 we will be able to sit and analyze the current state of browsers and their WebRTC support, but at least until then things will stay dynamic and interesting.