WebRTC for M2M? Think backwards.

If you think about it, M2M has two things it handles:

- Devices. Of various shapes and sizes

- Networking. Between those devices, with the help of a backend

What should be WebRTC’s role in the Internet of Things? Does it have a place there? Does it make any sense, or is this gut feeling of mine just me hyping myself around with this new toy?

I can’t really be sure of the answer, but I think most of us have been looking at WebRTC in the wrong way. It is why I took the time to discuss the data channel uses and why I am stabbing at M2M this time.

Here’s what I think:

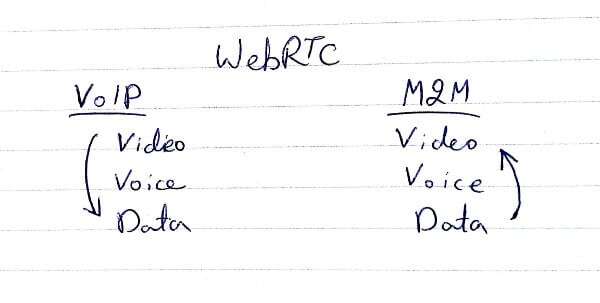

Most of us look at WebRTC and what we see is a flashy video (not Flash – just flashy). It has all that complex bells and whistles, and it is so easy to use compared to what we had before that we go and tinker with it, taking the time to build a video calling app and hosting it somewhere. When we get down into the details, we then see that it can do voice only as well and that there’s a data channel somewhere in there. This is VoIP thinking.

If we look at WebRTC from an M2M prism, then first and foremost it provides a data channel, and only later voice and video. And that data channel is hard to crack, because it doesn’t stand on its own and it requires a web browser.

Back to M2M.

We have devices. We have a network. We have servers. We have a use case to develop.

Backend is easy. We use web tools to build it. Probably a bit of analytics and Big Data would make sense as well, but this is all known territory.

Network is ok. We’ve done that as well. And we know how to do this at web scale if needed.

Devices. We can do devices, but somehow using the traditional ways doesn’t look that attractive anymore. You know – taking a proprietary real-time operating system that nobody uses, developing the device drivers on our own, writing code in C (or directly to the DSP god forbid) and then dealing with the lack of good IDE and debug tools. It just feels so… 80′. We might as well just go use punch cards for that part of our project.

But there’s a better way. And it fits with Atwood’s Law from 2007:

any application that can be written in JavaScript, will eventually be written in JavaScript.

We can do backend today with JavaScript – if we use Node.js.

Why not use Java Script on embedded devices? With or without a visual web browser?

Here’s my suggestion (and I heard it elsewhere as well):

- Have JavaScript run as standalone on devices – similar to how it is done in Node.js for servers

- Have networking done there using the same browser paradigms available today: XHR, SSE and WebSockets

- Add WebRTC to it. At the very least have the data channel implemented

- Add bindings and code to deal with voice and video on the device via WebRTC only if needed

What will happen?

It will make development a lot easier.

It will create a thriving developer ecosystem where tools will start to appear for the Internet of Things from non-commercial vendors.

It will make Chris Matthieu (Twelephone and Skynet) happy.

If we want to really open up the Internet of Things and make it into the Internet of Everything, then JavaScript is probably the route to go. And in it, WebRTC’s data channel enables so much more by reducing the reliance on the backend servers that we just can’t pass on that opportunity.

Now we only need someone to bring this vision to life.

Node.js and the Internet of Things: http://thethingsystem.com/dev/supported-things.html