It is in the viewer side.

Live broadcast is all the rage when it comes to WebRTC. In 2015 it grew 3-fold. It is a hard nut to crack, but there are solutions out there already – including the new Spotlight service from TokBox.

WebRTC Live Broadcast Today

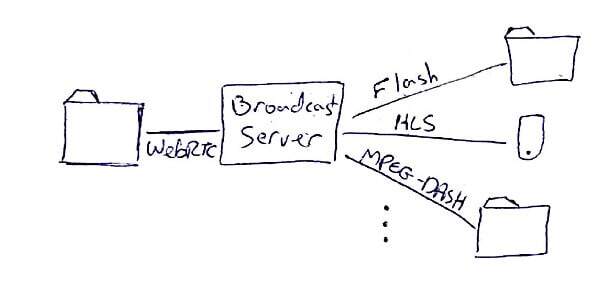

If you look closely, most of the deployments today for live broadcast using WebRTC look somewhat like the following diagram:

What happens today, is that WebRTC is used for the presenter – the acquisition of the initial video happens using WebRTC – just right to the broadcast server. There, the media gets transcoded and changes format to the dialects used for broadcasting – Flash, HLS and/or MPEG-DASH.

The problem is that these broadcast dialects add latency – check this explanation about HLS to understand.

With our infatuation to real time and the strive of moving any type of workload and use case towards real time, there’s no wonder that the above architecture isn’t good enough. With my discussions, many entrepreneurs would love to see this obstacle removed with live broadcasts having latency of mere seconds (if not less).

The current approaches won’t work, because they rely heavily on the ability to buffer content before playing it, and that buffering adds up to latency.

WebRTC Live Broadcast Tomorrow

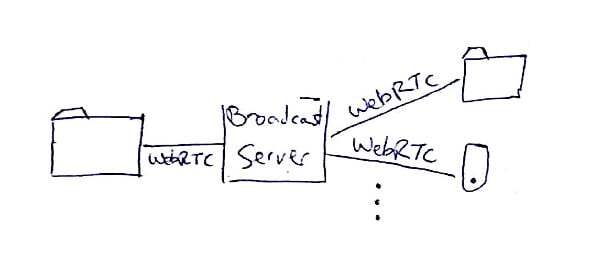

This is why a new architecture is needed – one where low latency and real time are imperatives and not an afterthought.

Since standardization and deployment takes time, the best alternative out there today is utilizing WebRTC, which is already available in most browsers.

The main difference here? The broadcast server needs to be able to send WebRTC at scale and not only handle it on its ingress.

To do this, we need a totally different server side WebRTC media implementation than the alternatives on the market today (both open source and commercial).

What happens today is that WebRTC implementations on the server are designed to work almost back-to-back – they simulate a full WebRTC client per connection. That’s all nice and well, but it can’t scale to 100’s, 1000’s or millions of connections.

To get there, the sever will first need to split the dependency on the presenter – it will need to be able to process media by itself, but do that in a way that optimizes for large scale sessions.

This, in turn, means rethinking how a WebRTC media stack is architected and built. Someone will need to rebuild WebRTC from the ground up with this single use case in mind.

I am leaving a lot of the details out of this article due to two reasons:

- While I am certain it can be done, I don’t have the whole picture in my mind at the moment

- I have a different purpose here, which we are now getting to

A Skillset Issue

To build such a thing, one cannot just say he wants low latency broadcast capabilities. Especially not if he is new to video processing and WebRTC.

The only teams that can get such a thing built are ones who have experience with video streaming, video conferencing and WebRTC – that’s three different domains of expertise. While such people exist, they are scarce.

Is it worth it?

Optimizing down from 20 seconds latency to 2 seconds latency. That’s what we’re talking about.

Is investing in it worth the effort? I don’t have a good answer for this one.

Need to pick an open source WebRTC media server framework for your project? Check out this free selection worksheet.

As I see it, the main problem of all this is that low latency (or at least webRTC) means udp and udp means losses. When you are peer-to-peer you have pli and fir, but 1000 persons cannot ask for full frame every time they lose picture, so you have to generate keyframes on timer basis and it also increases bandwidth and possibility of losing keyframe packets, and so there is only rtx available to deal with losses which also adds latency and also means balance between rtx and jitter buffer sizes.

Igor – thanks.

That’s part of the story. There’s also FEC to contend with, the ability to use VP9, the fact that broadcast means multiple resolutions and quality levels for different device types, etc.

No one said it was easy.

Did you miss https://code.facebook.com/posts/1653074404941839/under-the-hood-broadcasting-live-video-to-millions/ via Chad + Chris Wendt? RTMP is still not dead even though I found it surprising that this shares nothing with Messenger. But I do not understand facebook internals any more than you do 😉

I think you are on the wrong track when it comes to serverside requirements.

Fippo – thanks for the link. I did miss it, and it was well worth the read!

In fact, you can have scalable low latency video streaming server with Flash RTMP protocol (FMS, Red5, Wooza ….) but flash is dead……

So we need someone to rebuild such server with webrtc endpoint.

Red5 team made a webrtc connector for their commercial solution red5Pro but I don’t have any idea about their scalability.

In our part we’re building a solution based on Kurento, but scalabily is still an issue.

We actually solve the scale thing with Red5 Pro using a clustering model: https://www.red5pro.com/docs/server/clusters/

We have an idea how this should be implemented as a service, most likely will be able to release something for streaming later this year

Excellent post Tsahi! My thoughts on the subject: https://blog.red5pro.com/webrtc-one-to-many-broadcasting-why-latency-is-important-what-the-future-holds/

webrtc player- webrtc stream, sounds reasonable, but it still missing a big part of architect/design for streaming business. such, traffic scale up, way/codec to handle the media to bring flexibility and low latency ….

let wait and see …, BTW, make use of data channel may bring new ideas and ways.

Why just not to use WebRTC-Scalable-Broadcast: https://github.com/muaz-khan/WebRTC-Scalable-Broadcast ?

You can, and that’s what companies such as Peer5 and Streamroot are doing.

Hi Tsahi ! my question is that is there any recent updates regarding to live broadcasting using webrtc ? i am about to start a project where i have to build live video stream broadcast . any guides will be appreciated

There’s nothing in the WebRTC code itself – just the ecosystem and solutions around it. You can base a broadcast service around an SFU.