See also our new article on WebRTC WHIP & WHEP.

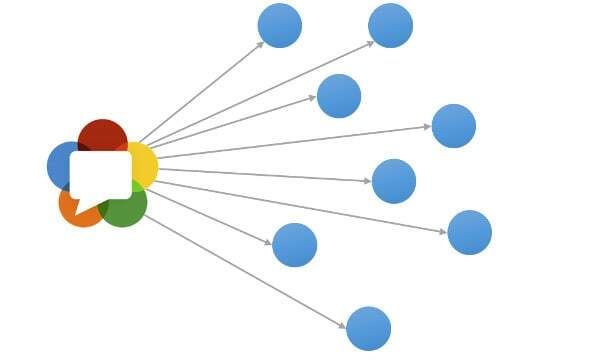

As I am working my way in analyzing the various use case categories for WebRTC, I decided to check what’s been important in 2015. The “winner” in attention was a relatively new category of WebRTC broadcast – one in which WebRTC is being used when what one is trying to achieve is sending a video stream to many viewers. These viewers can be passive, or they can interact with the creator of the broadcast.

Up until 2014, I had 4 such vendors in my list. 2015 brought 15 new vendors to it – call it “the fastest growing category”. And this is predominantly a US phenomena – only 3 of the new vendors aren’t US based startups.

Periscope and Meerkat are partly to “blame” here. The noise they made in the market stirred others to join the fray – especially if you consider many of them are based in San Francisco as well.

TokBox just introduced Spotlight – their own live broadcast APIs – for those who need. At its heart, Spotlight enables the types of interactions that we see on the market today for these kind of solutions:

- Ability to produce video by using WebRTC – either from a browser or a mobile app

- Ability to view the video content as a passive participant – usually via CDN by way of Flash, HLS or MPEG-DASH

- Ability to “join” the producer, creating a 1:1 video chat or a video conference that gets broadcasted to others

Here are some of my thoughts on this new emerging category:

- Most of the focus today is using WebRTC broadcast on the producer’s side. The reasons are clear:

- Flash is dying. HLS and MPEG-DASH are replacing it on the viewer side, but what is going to replace it in the producer side? Some go for specialized broadcasing applications, but WebRTC seems like a good alternative for many

- This is where vendors have more control – they can force producers to use a certain browser – it is much harder to force the viewers

- WebRTC plays nice in browsers and mobile. No other technology can achieve that today

- The producer side is also where most constraints/requirements come from today:

- You may want to “pull in” a viewer for an interview during a session

- Or have a panel of possible speakers

- You may wish to split the producer from the “actor”, facilitating larger crews

- All these fit well with the capabilities that WebRTC brings to the table versus the proprietary alternatives out there

- H.264 is the predominant requirement on the viewer side at the moment. VP9 is interesting. This means:

- Trnascoding in necessary in the backend prior to sending the video to viewers

- H.264 in Chrome can improve things for the vendors

- There’s a race towards zero-latency. Vendors are looking to reduce the 10-60 seconds of delay inherent in video streaming technologies to a second or less (not sure why)

- This would require attempting to replace viewer end of the architecture to a WebRTC one

- It will also necessitate someone building a backend that is optimized for this use case – something that wasn’t researched enough up until today

- Peer assisted delivery vendors such as Peer5 and Streamroot is another aspect. These kind of technologies sit “on top” of a video CDN and use WebRTC’s data channel to improve performance

- I’ve started noticing a few audio-only vendors joining the game as well. This will grow as a trend. The audio based solutions tend to be slightly different than the video ones and the technologies they employ are radically different. The technologies and architectures may converge, but not in 2016

2016 will be a continuation of what we’ve seen during 2015. More companies trying to define what live WebRTC broadcast looks like and aiming for different types of architectures to support it. In most cases, these architectures will combine WebRTC in them.

Do I still have to allow camera/mic access in the browser to receive one way broadcasts over WebRTC? Because that’d be a no go for live streaming (radio).

As far as I know Markus, there is no such need. You can receive video without allowing access to your own camera.

That said, in broadcast cases, you won’t be using WebRTC (at least not now) – you’ll use Flash, HLS or MPEG-DASH.

OK, got it. I currently use Icecast2 (thath’s plain http “download” streaming) with OPUS/WebM to the audio element for in-browser playback at a college radio station. Works fine, but is not really considered streaming by the browser afaik.

I’m still planning to look into implementing HLS+DASH streaming with Nginx and https://github.com/arut/nginx-rtmp-module

I have used that module for RTMP to Flash once with great success.

Regarding contribution using WebRTC, we’re looking into that as well. In practice, as the studio computers have multiple audio channels for different uses, afaik we’re waiting for better support for selecting the used media device and channel, which is still a problem in shipped browsers. Stereo is mandatory (and works in Chrome) but I’m still looking to find a SIP or WebRTC endpoint software supporting Stereo that can be put on linux servers so we can built headless codec systems.

Add Wowza to the list. Charlie Good, Wowza CTO & co-founder, announced WebRTC support at NAB (http://info.wowza.com/nab2016 at around 1:50) in private preview. Get hold of Wowza sales (sales [at] wowza.com if interested). This handles both producer and viewer sides and will transmux and transcode to/from WebRTC to other protocols and codecs. We are seeing a lot of low latency use cases where viewer side WebRTC is very interesting. Having an Flash-less option for viewing is a big plus.

Disclaimer–I am a Wowza guy.

Thanks for the tip David. I was wondering for quite a bit when will Wowza add WebRTC support. Great to see this one coming.