Is it time to change the governance of WebRTC in order to keep it growing and flourishing?

WebRTC started life in 2011 or 2012. Depending when you start counting.

That’s around 13 years now. Time to put things on the table – we might need a change in governance. A different way of thinking about WebRTC.

Table of contents

The concept of WebRTC unbundling

I published the above on LinkedIn last month.

It was a culmination of thoughts I’ve been having for the past several years.

You can pinpoint the first time I made that distinction in 2020 while coining the term WebRTC unbundling.

The notion was that WebRTC is being broken down into smaller pieces and developers are given more leeway and control over what WebRTC does (=a good thing). The result of all this is the ability to differentiate further, but also that the baseline of what WebRTC is gets farther behind what good media quality means.

There’s the popular open source implementation for WebRTC known as libwebrtc. It is maintained and governed by Google. When Google can enact its strategy by implementing their technologies and IP outside and around libwebrtc instead of inside libwebrtc – why wouldn’t they?

Google runs a business. They have commercial objectives. Differentiating from competitors who use libwebrtc to outwin Google would be a poor decision to make. Giving competitors using proprietary technology the source code of libwebrtc to copy from and improve upon without contributing back isn’t a smart move either.

This means cutting edge technologies and research is now done mostly outside of libwebrtc (and WebRTC) as much as possible. And the unbundling of WebRTC that started some 4 years ago is now starting to show.

Before we dive into the details

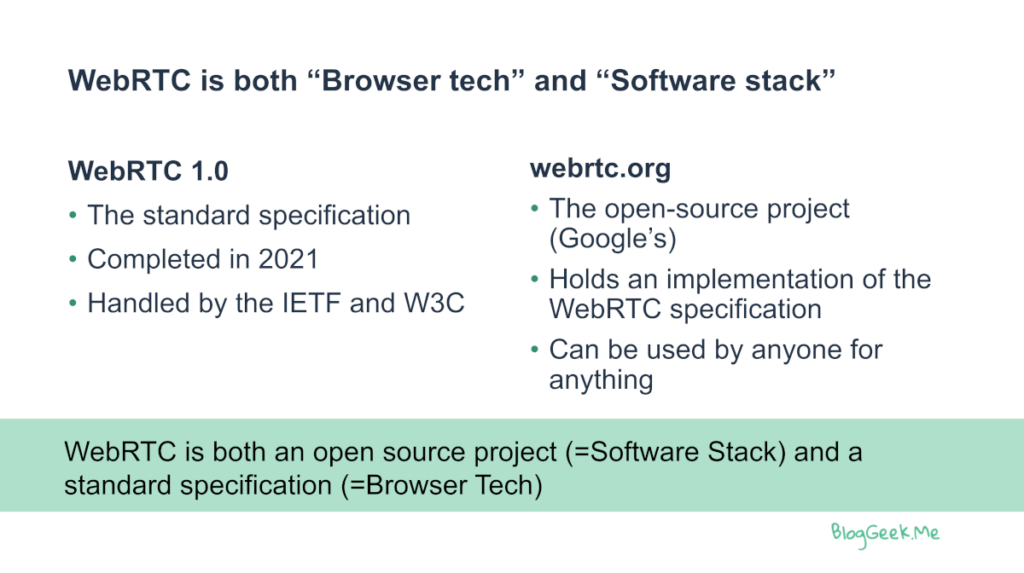

Something I always explain to people new to WebRTC is that WebRTC isn’t a single thing. When someone refers to it, he either thinks of WebRTC as a standard or WebRTC as an open source project:

The above is one of the first slides I’ve ever created about WebRTC.

WebRTC is an open standard. It is being specified by the IETF and W3C. The IETF deals with the network side while the W3C is all about the browser interface (JavaScript APIs).

WebRTC is also viewed as an open source project. That’s actually libwebrtc… the most common and popular implementation of WebRTC which has been created and is maintained by Google.

So remember – when people say WebRTC they can refer to it as either a standard or a package or both at the same time.

What we will do in this article from here on, is jump between these two definitions and see where we are with them today. We will start with the libwebrtc open source library.

The power and importance of libwebrtc

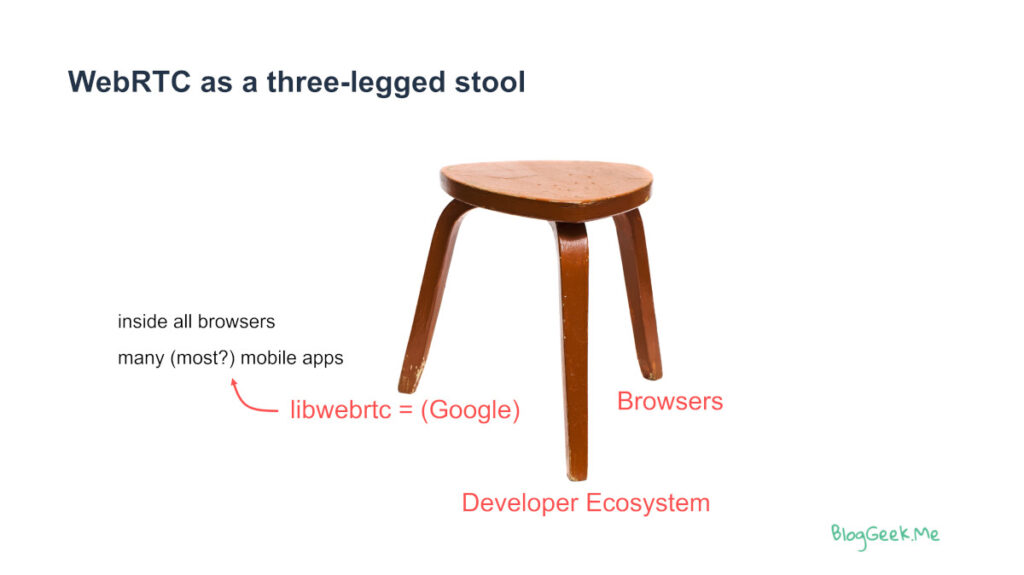

Here’s what I shared in my RTC@Scale 2024 session:

In WebRTC, libwebrtc is the most important library. There are others, but this is by far the most important. Why?

- It is integrated and used by ALL modern browsers (Chrome, Edge, Firefox and Safari)

- So when you interact with any browser in your WebRTC application, you end up working against libwebrtc

- Many mobile applications decided to use libwebrtc natively inside the app. Why? Because it is good enough

The end result is that… well… It is the most important WebRTC library out there.

–

Before libwebrtc, what we had was lame open source libraries that implemented media engines. All good options were commercial ones. In fact, libwebrtc (and WebRTC) started with Google acquiring a company called GIPS who had a great implementation of a commercial media engine that they licensed to companies. I know because the company I worked at licensed it, and the moment they got acquired, we got a flood of requests and questions about finding an alternative.

What WebRTC did was make media engines a commodity of sorts. A new era where high quality media can be had from open source. This also meant that the commercial media engine market died at the same time.

This new development of pushing innovations and improvements in the media engine pipeline outside of libwebrtc is what is going to take that advantage from open source and libwebrtc away.

More on that, a bit later. But next, why don’t we look at the standardization of WebRTC?

WebRTC standardization efforts

The standardization of WebRTC was split between two different organizations: the W3C and the IETF. They were always semi-aligned.

The IETF was in charge of what goes on in the network. How a WebRTC session looks like on the wire. For WebRTC, it uses stuff that we all considered quite modern in 2012 – light years in tech-time. The IETF Working Group working on WebRTC, RTCWEB, concluded its work and closed down.

The W3C was/is in charge of the API layer in the browser. The JavaScript interface, mostly revolving around the RTCPeerConnection. And yes, they are trying to wrap this one up and call it a day.

In many ways, what brought WebRTC to what it is today is the W3C – the part focused on the interface in the browser that developers use. That is because the browser is our window to the internet (and in many ways to the world as well). And this window includes the ability to use WebRTC through the APIs specified by the W3C.

The catch here is that the standardization done by the W3C for WebRTC consists almost solely by the browser vendors themselves. There aren’t any (or not enough) web developers sitting at the table. The ones who need and end up using the WebRTC APIs have no real voice in the WebRTC spec itself. The cooks in the kitchen are far remote from the restaurant diners who need to enjoy their dish.

And meanwhile, the cooks have different opinions and directions as well:

- Chrome protects its interests, focusing mainly on Google Meet’s requirements. This is what drives many of the contributions Google has been making to the W3C on the spec

- The rest? Mostly trying to block any forward movement so they won’t have to add changes to their own browser implementation. This is especially true for Safari and Firefox

So what do we end up with?

Google, trying to add things it needs to the WebRTC specification to solve their product needs

Other browser vendors, trying to delay Google a bit..

And developers who aren’t part of the game at all and are happy with the leftovers from what Google needs.

Vendors differentiating outside of (lib)WebRTC

The whole WebRTC ecosystem is enjoying the work of Google in libWebRTC. They do so in various ways:

- Directly by taking libWebRTC codebase, making it their own and compiling it into native applications

- Indirectly by having WebRTC run inside web browsers, and figuring out any bugs and issues they bump into

- By carving bits and pieces of it to use in their own app (like tearing the echo canceller or other algorithms from libWebRTC and using it elsewhere)

The first alternative is the most interesting one here.

When vendors do that, they usually end up forking the original codebase and modifying bits and pieces of it to fit their own needs. These might be minor bug fixes for edge cases or they may be full blown optimizations (like what Meta has done with their new MLow codec and Beryl echo cancellation algorithm – there were other areas as well. You’ll find them in the RTC@Scale event summary).

Video API vendors are no different. They usually take libWebRTC and compile it as part of their own mobile SDKs. Again, with likely changes in the code. They also get to see and work with a multitude of customers, each with its own unique requirements. In a way,they see a LOT of the market. Having these insights and understanding is great. Passing it to the libWebRTC team can be even better. These Video API vendors can be a great aggregator of customer insights…

Then there’s the fact that not many end up contributing back what they’ve done to libWebRTC. And even that comes with a whole set of reasons why:

- Assuming (rightly or wrongly) that these changes made are unique, proprietary, a competitive advantage – you name it

- Being afraid of the legal implications of doing so (exposure or whatever)

- Too much fuss to do

If you ask me, (1) is just bad manners – you get something for free from another vendor you might even be competing directly with. The least you can do is to share and contribute back, so that you have a level playing field at that low level of the stack.

Looking at (2) means someone needs to sit and talk to the legal team at your company. On one hand, you make use of open source and on the other you’re not giving back anything. I am not even sure if that reduces your exposure in any way. I am not a lawyer, but I do see the problem in this free lunch approach of the industry.

That third one is a big issue. And partly due to the fault of Google. They don’t make it easy enough to contribute back to the codebase. I can easily understand the reasoning – with billions of Chrome installations, having a no-name developer with a weird github alias from *somewhere* in the globe trying to push a piece of arcane/mundane code into libWebRTC that ends up in Chrome is darn dangerous. But the current situation seems almost insufferable.

I just don’t know who’s to blame here – companies who are just too lazy to contribute back and take the hoops required to get there or Google, for adding more blockers and hoops along their way.

Is standardization moving to the next shiny thing(s)?

There are two separate routes in web browsers that are setting up themselves to displace WebRTC: WebTransport + WebCodecs + WebAssembly & MoQ (Media over QUIC)

WebTransport + WebCodecs + WebAssembly

This trio is the unbundling of WebRTC. Taking it and breaking it into smaller components that cannot really be implemented in a web browser – these are WebTransport and WebCodecs. And adding the glue to them so that developers can cobble up the missing pieces however they feel like it – that’s the WebAssembly piece.

Vendors are already using WebAssembly to intervene with the WebRTC media processing pipeline to differentiate and improve on the user experience in various ways (noise suppression and background replacement being the main examples).

The next step is to skip WebRTC altogether:

- Use WebTransport for sending media over the network

- WebCodecs are there to encode and decode audio and video efficiently

- WebAssembly for the rest (packet loss, retransmission logic, echo cancellation, etc)

Don’t believe me? Zoom is doing almost that. They are using the WebRTC data channel as transport, and use WebCodecs and WebAssembly for the rest of it. Switching to WebTransport will likely happen for Zoom once it is ubiquitous across browsers (and offers solid performance compared to the data channel in WebRTC).

A new shiny toy for developers? Definitely.

Where will we see it first? In live streaming. I’ve written about it when discussing WHIP and WHEP, calling it the 3 horsemen.

MoQ (Media over QUIC)

The next big thing is likely to be MoQ.

WebTransport makes use of QUIC as its own transport. Around 5 years ago, I thought that QUIC can be a really good solution to replace WebRTC’s transport altogether. And it now has an official name – MoQ.

MoQ is about doing to RTP what WebTransport does to HTTP.

WebTransport takes QUIC and uses it as a modernized transport for web browsers, replacing HTTP and WebSocket.

MoQ takes QUIC and uses it as modernized media streaming for web browsers, replacing HLS and DASH.

There’s an overview for MoQ on the IETF website. Here’s the best part of it, directly from this post:

It includes a single protocol for sending and receiving high-quality media (including audio, video, and timed metadata, such as closed captions and cue points) in a way that provides ultra low latency for the end user.

If that sounds like WebRTC to you, then you’re almost correct. It is why many are going to see it (and use it) as a WebRTC alternative once it gets standardized and implemented by web browsers.

The main differences?

- The timed metadata piece, which WebRTC sourly missed for many years

- No P2P capability. Sacrificed for improved NAT traversal (by relying on QUIC and servers)

- The definition of media relays (servers) along with their operation

While this is targeted at live streaming services, this can easily trickle into video conferencing.

Just like WebRTC was designed and built for video conferencing, but later adopted by live streaming services – the opposite can and is likely to happen: MoQ is being designed and built first and foremost for live streaming and it will be adopted and used by video conferencing services as well.

–

Would Google be interested in WebRTC enough? Maybe it would venture to use WebTransport + WebCodecs + WebAssembly instead. Or just go for MoQ and consolidate its protocols across services (think YouTube + Google Meet). What would happen to WebRTC if that would take place?

Who contributes to libwebrtc?

Here’s what I showed at RTC@Scale:

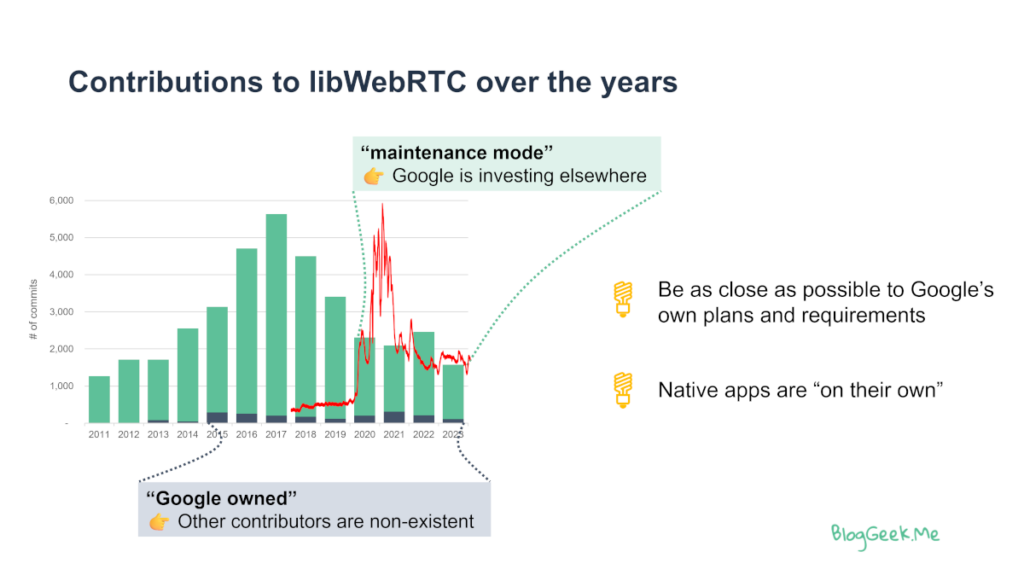

Let’s unpack this a bit.

The bars show the number of commits on a yearly basis. We see the numbers dwindling and winding down just as the use of WebRTC skyrockets (the redline) due to the pandemic. 2024 is likely to be even lower in terms of commits.

The greenish colored bars are Google’s contributions to libwebrtc. The blue? All the rest of the industry who make money using WebRTC – not all of them mind you – just those that contribute back (there are many others who never contribute back). Google has been sponsoring them somewhat which can not make them happy.

Why is that?

Why are so few contributions outside of Google end up in libwebrtc?

I guess there are two reasons here:

- Google doesn’t make it easy to contribute. In the end, libwebrtc gets embedded into Chrome which goes to billions of users every month with a new release. Not knowing what got integrated (malware or patent-encumbered code for example) is a real issue. Having insecure or not thoroughly tested code is also unacceptable at this scale

- Laziness of those who use libwebrtc but never contribute back

- In large corporations, the developers need to “fight” with the legal teams to contribute code back (the excuses are usually around liability and protecting IP)

- Smaller companies can’t be bothered with the friction that Google adds to the process – or just don’t want to spend the needed time

- Not wanting to make your competitor’s product better by contributing

- Struggling with the server side parts of WebRTC that in the end are quite tightly coupled with libWebRTC on the client. Google Meet undoubtedly delivers the best experience because the client side is designed for its needs

Many developers the world over enjoy the fruits of libwebrtc, but most aren’t willing to contribute back. This is true for both individual engineers as well as companies. Google even gave up on being frustrated with this and resorts to solving their own issues these days. They probably have a very good understanding of the overall usage in Chrome where Google Meet remains the dominant user.

On the one hand, Google isn’t making this easy. On the other hand, companies are lazy or protective of their own forked libwebrtc code to never contribute it back.

Can we save libwebrtc & WebRTC?

It is time to rethink WebRTC’s future.

For libwebrtc, we might need some other form of governance. Have more of the bigger vendors pitch in with the engineering effort itself. Meta, Microsoft and a few others who rely heavily on libwebrtc need to step up to that responsibility (the W3C Working Group is not where this kind of discussion happens) while Google needs to let go a bit. I have no clue how things are done in the world of Linux and I am sure libwebrtc isn’t big enough or important enough for that. But I do believe that something can be done here. At the end of the day it will require taking some of the maintenance cost off Google.

Just like Chrome has third party libraries such as libopus and dav1d (AV1 decoder) embedded into Chrome as part of libwebrtc, there is no real reason why libwebrtc itself can’t end up in the same way.

For WebRTC standardization, it is time to ask – is it finished, or are there more things needed?

Do we want to progress and modernize it further or are we happy with it as is?

Should we “migrate” it towards MoQ or a similar approach?

In the W3C, do we need to get more people involved? The web developers themselves maybe? They need to be listened to and made part of the process.

–

Will the above happen? Likely not.