Which signaling should you be using for your WebRTC service? Whatever you feel like.

And if you need assistance with the selection, then read this ? Choosing the best signaling protocol for your WebRTC application.

Signaling in WebRTC is an ongoing debate: some say that to be successful, WebRTC must have signaling. Others say that WebRTC need to be used to… gateway into IMS. And SIP.

And then others say who cares? I am in this camp. And luckily – I am not alone.

Dele Olajide published on Ignite Realtime his work adding multipoint voice chat to their Openfire platform. The interesting tidbit there is actually in the comments:

jons: “Is the reason you don’t use Jingle is because of Jingle issues, or because of issues with the websockets plugin dealing with jingle messages?”

Dele: “Nothing wrong with Jingle.

I have used it in OfChat WebRTC implementation to handle signalling of voice calls and establish a session between two or more participants in a chat or groupchat respectfully.

What I am saying is that in XMPP chat or groupchat, there is no need to signal an incoming call. Session is already established. All we need to do is exchange media SDP (offer/answer). For that we don’t need Jingle or even SIP. We just pass the SDP as payload in the <message>”

WebRTC is used as a feature within a larger context – and in that sense, the signaling protocol used depends on the larger context. In this case, it was all about an existing signaling available already.

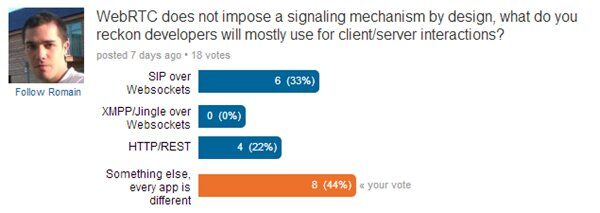

On the WebRTC group on LinkedIn, Romain Testard asked what will developers will mostly use for client/server interactions when it comes to WebRTC. While the number s relatively low, I think this speaks for itself.

You either side with the “legacy” world of SIP, or… you do whatever you feel like.

I can find only two reasons to use SIP signaling for WebRTC:

- Your current service runs using SIP and you don’t want or just can’t replace it all with something else – think of Blue Jeans or Vidtel offering WebRTC as part of an existing enterprise video conferencing service as an example. They need to support H.323 and SIP endpoints, so for them WebRTC is just another entry point into their system.

- You need backend servers to do work like IVR, recording, gatewaying, etc. – and the ecosystem available today for you mainly uses SIP.

While the first reason won’t be solved in the coming years, I can foresee in the future a set of solutions for the backend that will run purely using WebRTC and some proprietary web-developer-signaling – REST or other. This works better for the mindset of the developers who build these systems and probably provides a better long term solution in the end of the day.

If you are planning on developing a service with WebRTC, take the time to think about your signaling options – it isn’t a trivial decision to make as there is no single answer. This can make or break your architecture moving forward.

What is the difference between JSEP and ROAP ? Do they use Http or websockets ?