Different ways to do the same thing.

One of the biggest problems is choice. We don’t like having choice. Really. The less options you have in front of you the easier it is to choose. The more options we have – the less inclined we are to make a decision. It might be this thing called FOMO – Fear Of Missing Out, or the fact that we don’t want to make a decision without having the whole information – something that is impossible to achieve anyway, or it might be just the fear of committing to something – commitment means owning the decision and its ramifications.

WebRTC comes with a huge set of options to select from if you are a developer. Heck – even as a user of this technology I can no longer say what service I am using:

- I use Intermedia AnyMeeting for my course office hours and workshops

- Google Meet for testRTC meetings with customers

- Whatever a customer wants for my own consultation meetings, which varies between Hangouts, Skype, appear.in, talky, GoToMeeting, WebEx, … or the customer’s own service

In my online course, there’s a lesson discussing NAT traversal. One of the things I share there is the need to place the TURN server in WebRTC as close as possible to the edge – to the user with his WebRTC client. Last week, in one of my Office Hour sessions, a question was raised – how do you make that decision. And the answer isn’t clear cut. There are… a few options.

My guess is that in most cases, the idea or thought of taking a problem and scaling it out seems daunting. Taking that same scale out problem and spreading it across the globe to offer lower latency and geolocation support might seem paralyzing. At the end of the day, though it isn’t that complex to get a decent solution going.

The idea is you’ve got a user that runs on a browser or a mobile device. He is trying to reach out to your infrastructure (to another person probably, but still – through your infrastructure). And since your infrastructure is spread all over the globe, you want him to get the closest box to him.

How do we know what’s closest? Here are two ways I’ve seen this go down with WebRTC based services:

Via DNS

When your browser tries to reach out the server – be it the STUN or TURN server, the signaling server, or whatever – he ends up using DNS in most cases (you better use DNS than an IP address for these things in production – you are aware of it – right?).

Since the DNS knows where the request originated, it can make an informed decision as to which IP address to give back to the browser. That informed decision is done in the infrastructure side but by the DNS itself.

One of the popular services for it is AWS Route 53. From their website:

Amazon Route 53 Traffic Flow makes it easy for you to manage traffic globally through a variety of routing types, including Latency Based Routing, Geo DNS, and Weighted Round Robin.

This means you can put a policy in place so that the Route 53 DNS will simply route the incoming request to a server based on its location (Latency Based Routing, Geo DNS) or based on load balancing (Weighted Round Robin).

appear.in, for example, is making use of route53.

Amazon Route 53 isn’t the only such service – there are others out there, and depending on the cloud provider you use and your needs, you may end up using something else.

Via Geo IP

Another option is to use a Geo IP type of a service. You give your public IP address – and get your location in return.

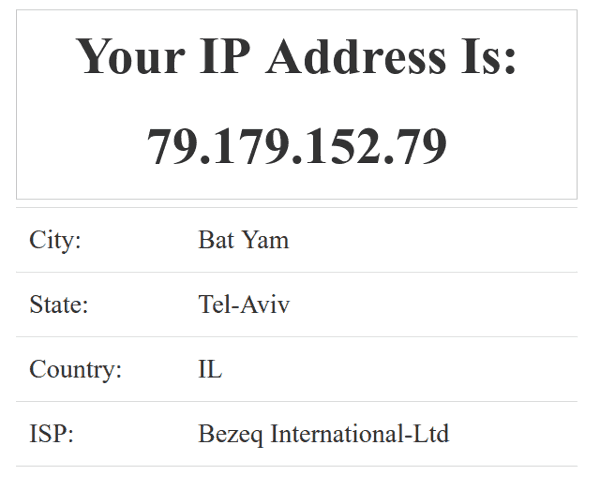

You can use this link for exampleto check out where you are. Here’s what I get:

A few things that immediately show up here:

- Yes. I live in Israel

- Yes. My ISP is Bezeq

- Not really… Tel-Aviv isn’t a state. It is just a city

- And I don’t live in Bat Yam. I live in Kiryat Ono – a 20km drive

That said, this is pretty close!

Now, this is a link, but you can also get this kind of a thing programmatically and there are vendors who offer just that. I’ve head the pleasure to use MaxMind’s GeoIP. It comes in two flavors:

- As a service – you shoot them an API and get geo IP related information, priced per query

- As a database – you download their database and query it locally

There’s a kind of a confidence level to such a service, as the reply you get might not be accurate at all. We had a customer complaining at testRTC servers which jinxed his geolocation feature and added latency. His geo IP service thought the machine was in Europe while in truth it was located in the US.

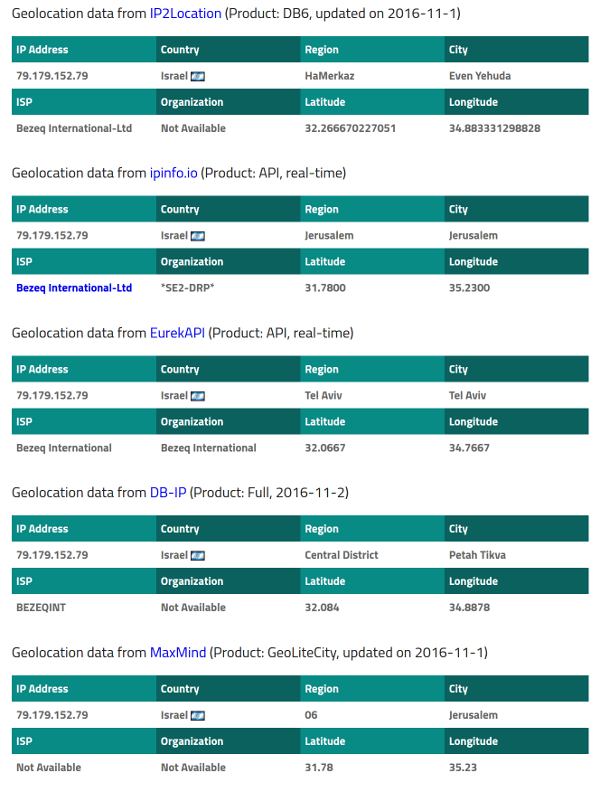

The interesting thing is, that different such services will give you different responses. Here’s where I am located base (see here):

As you can see, there’s a real debate as to my exact whereabouts. They all feel I live in Israel, but the city thing is rather spread – and none of them is exact in my case.

So.

There are many Geo IP services. They will differ in the results they give. And they are best used if you need an application level geolocation solution and a DNS one can’t be used directly.

Telemetry

When inside an app, or even from a browser when you ask permission, you can get better location information.

A mobile device has a GPS, so it will know the position of the device better than anything else most of the time. The browser can do something similar.

The problem with this type of location is that you need permission to use it, and asking for more permissions from the user means adding friction – decide if this is what you want to do or not.

Don’t use WebRTC iceServers to geolocate your users

I’ve seen those who use the Peer Connection configuration to pass multiple TURN servers in the iceSevers parameter, thinking that WebRTC will do a good job and figuring out which TURN server (and by extension which region) is best for a session to use.

On paper this might work. In practice – it doesn’t, and for multiple reasons:

- Some browsers simply dislike a lot of servers in the iceServers list. They’ll even warn about it

- The longer the list, the more ICE candidates you will have. The more ICE candidates, the more candidate pairs to test. The more pairs to test, the more messages you will have flying around at the beginning of a call. Not a good thing

- WebRTC will tend to try and figure out which connection is best to which TURN server. In turn, it may oscillate between TURN servers throughout the session which leads to poor media quality – exactly what you’re trying to avoid

What’s next?

I am sure the DNS option is similar in its accuracy level to the geo IP ones, though it might be a bit more up to date, or have some learning algorithm to handle latency based routing. At the end of the day, you should use one of these options and it doesn’t really matters which.

Assume that the solution you end up with isn’t bulletproof – it will work most of the times, but sometimes it may fail – in which case, latency will suffer a bit. Oh, and if in doubt, just pick up a managed WebRTC TURN service instead…

Need to pick a WebRTC media server framework? Why not use my Free Media Server Framework Selection Worksheet when checking your alternatives?

Thanks for a solid overview of the options, Tsahi. We are using Route 53’s latency-based routing for STUN/TURN in Gruveo.

Art – thanks for sharing. Have you considered anything else or was that like a default approach because you’re already on AWS?

Hi Tsahi – great post, thanks for the summary!

You briefly touched on Latency Based Routing in your DNS section, but I personally believe that’s one of the better methods. Just because you’re physically close to particular PoP doesn’t necessarily mean that PoP is the optimal. I may be in Berlin, physically closer to the Copenhagen datacenter, but have a one-hop connection to Munich. In other words, physical proximity isn’t the same as network proximity.

As you say, Amazon Route53 offers Latency Based Routing for DNS, but there are some inherent limitations to how good a job they can do at resolve time. There is a tradeoff between how long you spend determining the closest server and the need to get a response back to the application as fast as possible. Also, who is to say that the route (and therefore latency) experienced by DNS traffic will be the same as for RTP?

A nice solution could actually be a hybrid of a few of these approaches:

1. Identify three potential media server candidates based on geographic proximity (DNS, external database or device location reporting) and server load.

2. Quickly measure the round-trip latency from the client application to all three, using the desired protocol.

3. Pick the server with the lowest measured response time and use it for the call.

If the client and infrastructure are sufficiently clever, the system could potentially keep those parallel connections open and actively swap between them during the call as network conditions shift. Naturally, there are lots of caveats around ICE and firewall traversal which could affect how well this might work.

Nicholas,

Thanks. I am a fan of simple approaches, but in this case, I guess I’d go for an elegant one if one is to be found 🙂

I am wondering if anyone went the length of putting up such a system in place.

Hi Nicholas,

May anycast as an alternative of split horizon dns discovery, could help to find the closest TURN server PoP.

As it is written in turn discovery:

https://tools.ietf.org/html/draft-ietf-tram-turn-server-discovery-10#section-6

What about Nicholas’s approach, but just include the 3 closest in iceServers array and let ICE sort out the best. My understanding is that fastest response is at the head of queue for negotiation.

Warren, that should work. You’ll still need to configure the backend properly and take care of situations where sessions are made direct or “via” STUN to a media server in your backend.

can two webrtc Clients use two different Turn Servers (cascading) to set up audio/video session ?

Yes they can. It doesn’t occur often, but it can happen.

Apropo Nicholas’suggestion, can we not use Multivalue answer option provided by AWS Route 53? Then the browser can gather ICE candidates from each one and let ICE sort out the best candidate.

Aswath,

How are you going to map the responses? You are essentially opening a single peer connection in front of 3 different media servers in order to run ICE negotiation against all 3 at the same time using the same algorithm which is supposed to take place on a single machine on the server side. Seems somewhat complicated and risky.

I am missing your point of being complicated and risky. Doesn’t the spec allow for multiple ICE servers being included and the clients getting multiple relay addresses and let the ICE procedure select one among them? The only difference between that and what I am suggesting is that the multiple servers will be identified by a single domain name and DNS query will identify the individual addresses. I don’t think procedurally there is no difference between the two,

I probably didn’t finish that thought on the screen 🙂

In the context of this post, it is probably enough and should work. But once you add the media servers into the mix, then this is where things get tricky – relying on ICE is too late in the process to allocate a media server, as you need the media server’s public IP addresses to begin with.

The initial SDP that starts the whole ICE procedure already gets connected to a media server, and that connection is the one determining the server location – over HTTPS and not SRTP.