Explore the world of video codecs and their significance in WebRTC. Understand the advantages and trade-offs of switching between different codec generations.

Technology grinds forward with endless improvements. I remember when I first came to video conferencing, over 20 years ago, the video codecs used were H.261, H.263 and H.263+ with all of its glorious variants. H.264 was starting to be discussed and deployed here and there.

Today? H.264 and VP8 are everywhere. We bump into VP9 in WebRTC applications and we talk about AV1.

What does it mean exactly to move from one video codec generation to another? What do we gain? What do we lose? This is what I want to cover in this article.

Table of contents

- The TL;DR version

- What is a video codec anyway?

- Hardware acceleration and video codecs

- New video codec generation = newer, more sophisticated tools

- A few hard facts about video codecs

- WebRTC MTI – our baseline video codec generation

- The emergence of VP9 and rejection of HEVC

- Our brave new world of AV1

- Things to consider when introducing a new video codec in your application

- Where to find out more about video codecs and WebRTC

The TL;DR version

Don’t have time for my ramblings? This short video should have you mostly covered:

I started recording these videos a few months back. If you like them, then don’t forget to like them

The TL;DR:

- Each video codec generation compresses better, giving higher video quality for the same bitrate as the previous generation

- But each new video codec generation requires more resources – CPU and memory – to get its job done

- And there there are nuances to it, which are not covered in the tl;dr – for them, you’ll have to read on if you’re interested

What is a video codec anyway?

A codec is a piece of software that compresses and decompresses data. A video codec consists of an encoder which compresses a raw video input and a decoder which decompresses the compressed bitstream of a video back to something that can be displayed.

We are dealing here with lossy codecs. Codecs that don’t maintain the whole data, but rather lose information trying to hold as much as the original as possible with as little data that needs to be stored as possible

The way video codecs are defined is by their decoder:

Given a bitstream generated by a video encoder, the video codec specification indicates how to decompress that bitstream back into a viewable format.

What does that mean?

- The decoder is mostly deterministic

- Our implementation of the decoder will almost always be focused on performance

- The faster it can decode with as few resources as possible (CPU and memory) the better

- Remember this for the next section, when we’ll look at hardware acceleration

- Encoders are just a set of tools for us to use

- Encoders aren’t deterministic. They come in different shapes and sizes

- They can cater for speed, for latency, for better quality, etc

- A video codec specification indicates different types of tools that can be used to compress data

- The encoder decides which tools to use at which point, ending up with an encoded bitstream

- Different encoder implementations will have different resulting compression bitrate and quality

- In WebRTC, we value low latency

- Which means that our codecs are going to have to make decisions fast, sometimes sacrificing quality for speed

- Usually making use of a lot of heuristics and assumptions while doing so

- Some would say this is part math and part art

Hardware acceleration and video codecs

Video codecs require a lot of CPU and memory to operate. This means that in many cases, our preference would be to offload their job from the CPU to hardware acceleration. Most modern devices today have media acceleration components in the form of GPUs or other chipset components that are capable of bearing the brunt of this work. It is why mobile devices can shoot high quality videos with their internal camera for example.

Since video codecs are dictated by the specification of their decoder, defining and implementing hardware acceleration for video decoders is a lot easier than doing the same thing for video encoders. That’s because the decoders are deterministic.

For the video encoder, you need to start asking questions –

- Which tools should the encoder use? (do we need things like SVC or temporal scalability, which only make sense for video conferencing)

- At what speed/latency does it need to operate? (a video camera latency can be a lot higher than what we need for a video conference application for example)

This leads us to the fact that in many cases and scenarios, hardware acceleration of video codecs isn’t suitable for WebRTC at all – they are added to devices so people can watch YouTube videos of cats or create their own TikTok videos. Both of these activities are asynchronous ones – we don’t care how long the process of encoding and decoding takes (we do, but not in the range of milliseconds of latency).

Up until a few years ago, most hardware acceleration out there didn’t work well for WebRTC and video conferencing applications. This started to change with the Covid pandemic, which caused a shift in priorities. Remote work and remote collaboration scenarios climbed the priorities list for device manufacturers and their hardware acceleration components.

Where does that leave us?

- Hard to say

- Sometimes, hardware acceleration won’t be available

- Other times, hardware acceleration will be available for decoding but not for encoding. Or the encoding available in hardware acceleration won’t be suitable for things like WebRTC

- At times, hardware acceleration will be available, but won’t work as advertised

- While in many cases, hardware acceleration will just work for you

The end result? Another headache to deal with… and we didn’t even start to talk about codec generations.

New video codec generation = newer, more sophisticated tools

I mentioned the tools that are the basis of a video codec. The decoder knows how to read a bitstream based on these tools. The encoder picks and chooses which tools to use when.

When moving to a newer codec generation what usually happens is that the tools we had are getting more flexible and sophisticated, introducing new features and capabilities. And new tools are also added.

More tools and features mean the encoder now has more decisions to make when it compresses. This usually means the encoder needs to use more memory and CPU to get the job done if what we’re aiming for is better compression.

Switching from one video codec generation to another means we need the devices to be able to carry that additional resource load…

A few hard facts about video codecs

Here are a few things to remember when dealing with video codecs:

- Video codecs are likely the highest consumers of CPU on your device in a video conference (well… at least before we start factoring in the brave new world of AI)

- Encoders require more CPU and memory than decoders

- In a typical scenario, you will have a single encoder and one or more decoders (that’s in group video meetings)

- Hardware acceleration isn’t always available. When it is, it might not be for the video codec you are using and it might be buggy in certain conditions

- Higher resolution and frame rate increase CPU and memory requirements of a video codec

- Some video codecs are royalty bearing (more on that later)

- Encoding a video for the purpose of streaming it live is different than encoding it for a video conference which is different than encoding it to upload it to a photo album. In each we will be focusing on different coding tools available to us

- Video codecs require a large ecosystem around them to thrive. Only a few made this level of adoption, and most of them are available in WebRTC

- Different tools in different video codecs mean that switching from one codec to another in a commercial application isn’t as simple as just replacing the codec in the edge devices. There’s a lot more to it in order to make real use of a video codec’s capabilities

WebRTC MTI – our baseline video codec generation

It is time to start looking at WebRTC and its video codecs. We will begin with the MTI video codecs – the Mandatory To Implement. This has been a big debate back in the day. The standardization organizations couldn’t decide if VP8 or H.264 need to be the MTI codecs.

To make a long story short – a decision was made that both are MTI.

What does this mean exactly?

- Browsers implementing WebRTC need to support both of these video codecs (most do, but not all – in some Android devices, you won’t have H.264 support for example)

- Your application can decide which of these video codecs to use – it isn’t mandatory on your end to use both or either of them

These video codecs are rather comparable for their “price/performance”. There are differences though.

If you’re contemplating which one to use, I’ve got a short free video course to guide you through this decision making process: H.264 or VP8 – What Shall it be?

The emergence of VP9 and rejection of HEVC

The descendants of VP8 and H.264 are VP9 and HEVC.

H.264 is a royalty bearing codec and so is HEVC. VP8 and VP9 are both royalty free codecs.

HEVC being newer and considerably more expensive made things harder for it to be adopted for something like WebRTC. That’s because WebRTC requires a large ecosystem of vendors and agreements around how things are done. With a video codec, not knowing who needs to pay the royalties stifles its adoption.

And here, should the ones paying be the chipset vendor? Device manufacturer? The browser vendor? The application developer? No easy answer, so no decision.

This is why HEVC ended up being left out of WebRTC for the time being.

VP9 was an easy decision in comparison.

Today, you can find VP9 in applications such as Google Meet and Jitsi Meet among many others who decided to go for this video codec generation and not stay in the VP8/H.264 generation.

The big promise of VP9 was its SVC support

Our brave new world of AV1

AV1 is our next gen of video codecs. The promise of a better world. Peace upon the earth. Well… no.

Just a divergence in the road that puts a focus in a future that is mostly royalty free for video codecs (maybe).

What do we get from AV1 as a new video codec generation compared to VP9? Mainly what we did from VP9 compared to VP8. Better quality for the same bitrate and the price of CPU and memory.

Where VP9 brought us the promise of SVC, AV1 is bringing with it the promise of better screen sharing of text. Why? Because its compression tools are better equipped for text, something that was/is lacking in previous video codecs.

AV1 has behind it most of the industry. Somehow, at a magical moment in the past, they got together and got to the conclusion that a royalty free video codec would benefit everyone, creating the Alliance of Open Media and with it the AV1 specification. This got the push the codec needed to become the most dominant video coding technology of our near future.

For WebRTC, it marks the 3rd video generation codec that we can now use:

- Not everywhere

- It still lacks hardware acceleration

- Performance is horrendous for high resolutions

Here’s an update of what Meta is doing with AV1 on mobile from their RTC@Scale event earlier this year.

This is a start. And a good one. You see experiments taking place as well as first steps towards productizing it (think Google Meet and Jitsi Meet here among others) in the following areas:

- Decoding only scenarios, where the encoder runs in the cloud

- Low bitrates, where we have enough CPU available for it

- When screen sharing at low frame rate is needed for text data

Things to consider when introducing a new video codec in your application

First things first. If you’re going to use a video codec of a newer generation than what you currently have, then this is what you’ll need to decide:

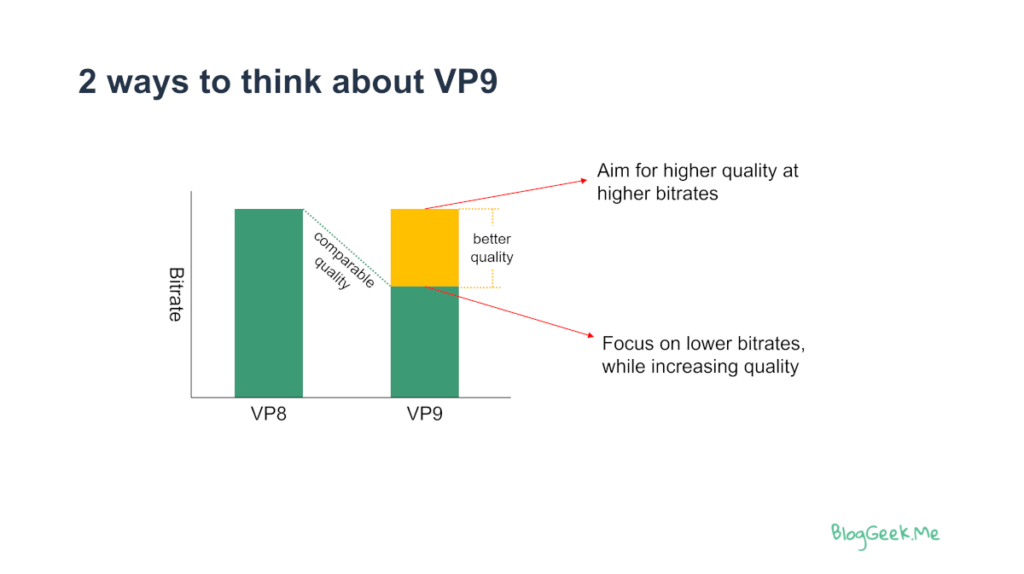

Do you focus on getting the same bitrate you have in the past, effectively increasing the media quality of the session. Or alternatively, are you going to lower the bitrate from where it was, reducing your bandwidth requirements.

Obviously, you can also pick anything in between the two, reducing the bitrate used a bit and increasing the quality a bit.

Starting to use another video codec though isn’t only about bitrate and quality. It is about understanding its tooling and availability as well:

- Where is the video codec supported? In which browsers? Operating systems? Devices?

- Is there hardware acceleration available for it? How common is it out there? How buggy might it be?

- Are there special encoding tools that we can/should adopt and use? Think temporal scalability, SVC, resilience, specific coding technique for specific content types, etc.

- In which specific scenarios and use cases do we plan on using the codec?

- Do we have the processing power needed on the devices to use this codec?

- Will we need transcoding to other video codec formats for our use case to work? Where will that operation take place?

Where to find out more about video codecs and WebRTC

There’s a lot more to be said about video codecs and how they get used in WebRTC.

For more, you can always enroll in my WebRTC courses.