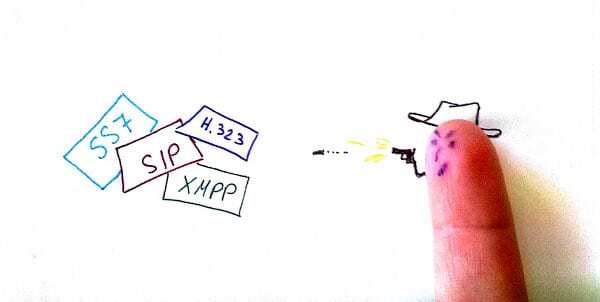

Signaling? Stardandizing it? For what purpose exactly?

I used to work in the signaling business. Developing protocol stacks, marketing them – explaining their value to customers. That need to follow a certain spec. To implement a well-known set of features. To follow industry use cases.

Those days are long gone.

You can look at 2013 as the year signaling died.

It was a slow death, so there’s no exact date, but I think we’re there.

The notions of interconnectivity, federation and interoperability were used to be important. They were laws of nature. Things you just had to have in a VoIP product. And now? They are tools to be used when needed, and they are either needed by the architects, to tap a specific ecosystems of products and developers or by business people, deciding how to wield them in the battlefield.

The decision not to add signaling to WebRTC might have been an innocent one – I can envision engineers sitting around a table in a Google facility some two years ago, having an interesting conversation:

“Guys, let’s add SIP to what we’re doing with WebRTC”

“But we don’t have anything we developed. We will need to use some of that open source stuff”

“And besides – why not pack XMPP with it? Our own GTalk uses XMPP”

“Go for it. Let’s do XMPP. We’ve got that libjingle lying around here somewhere”

“Never did like it, and there are other XMPP libraries floating around – you remember the one we used for that project back in the day? It is way better than libjingle”

“Hmm… thinking about it, it doesn’t seem like we’re ready for signaling. And besides, what we’re trying to do is open source a media engine for the web – we already have JavaScript XMPP – no need to package it now – it will just slow us down”

And here we are, sometime later, debating why did they not include signaling – it can be as simple as realities of meeting a deadline, a decision not to decide, or not to fight another religious battle about which protocol to use – or it could have been a guided decision.

It was one of many similar decisions that have been taking place lately.

A few other notable decisions that are killing signaling?

- Apple, selecting SIP for FaceTime; and then including their own proprietary push notifications, not standardizing it, and locking the service down

- The rise of WhatsApp, Line, iMessage and a slew of other IM apps – all with no notion of what exact signaling protocol they use (the fact that I don’t know or care…)

- Tango? Viber? Do they use a proprietary solution or a standardized one? Would that make a real business difference to them?

- Skype… which is now got its connectivity to Lync. Skype – proprietary. Lync – “SIP”ish in nature

- And last, but not least, Google dropping XMPP support from their Hangouts service

Notice a pattern?

Signaling is now a commodity. Something you use as needed. It also means that islands are now the norm for communication services, and openness on the protocol level is the exception.

You’d be surprised at how close you are to the actual discussion that happened.

Two more arguments: “XX: i have been involved in H.323, SIP, XMPP… can we please make it stop? I don’t want to spend my career watching all this fighting about protocols.”

I think the other argument was…”this is for the web, and we have this thing called http that is fairly well adopted”. 🙂

A fly in the wall. I’d give a lot to be one in such closed meetings.

The whole point misleading here is you talk entirely about closed silos from start to end or those preparing for being silos. There was never a need for a standard for such service, see Skype – they didn’t need webrtc anyhow and they didn’t need any standard to become large. Webrtc has less relevance on closed silos using proprietary protocols, those existing long before webrtc.

Either you have never dealt with any enterprise that need to keep communications private (but with option to interconnect to the world) or the post is written for the sake of buzzing around. Have anyone seen relevant percentage of enterprises relying on skype for their main telephony system? Not even home users.

As a basic rule that one dealing a bit with networking in the past should know, there is nowhere a need of a standard protocol in systems that don’t want to interconnect. You don’t need IP between fridge and toaster if you don’t want to connect them with other IP nodes (sonars with Morse code can be just enough between them).

No matter what that would be, a standard signaling protocol is going to stick around for ever. Silos are coming to go: icq, myspace, and more are getting in line sooner or later. Closed services are a matter of ephemeral trends, but different trends are trending after a while.

This post is maybe about predicting death of signaling in webrtc, which was not yet born anyhow.

Signaling as an all-encompassing catch-all solution that sweeps the market and makes everything interoperable is dead. Actually – since PSTN – it never did have any real life.

Signaling exist in any communication solution – but it no longer needs to be standardized to make a difference for most. Enterprises need to connect to PSTN, but even them, internally rely on VoIP solutions that are either proprietary or marginally adhere to standards – this is one of the reasons why you will always see the phones and the PBX provided by a single vendor in an enterprise.

IIRC, Tango is a SIP application.

tl;dr We need standardized signalling

You can’t outright say that WebRTC doesn’t use signalling. The scope WebRTC is to create some browser APIs to setup real-time data channels.

A HTML5 page can use the WebRTC APIs using javascript, but what values does the javascript use? What IP address does my browser send the media packets to (btw, that’s handled by the related RTCWeb standard).

In the context of the web, this means that WebRTC connections will be setup using HTTP (or WebSockets) as the transport protocol, and some kind of signalling protocol entirely unspecified.

For closed silos, and easy setup, a webserver might just push the values as json to the browser. But then we have a problem is two webservers want their users to talk to each other. Worse, if two domains with different implementations want to share users.

We need standardized signalling!

Here’s what I think.

The problem with almost all of the traditional signalling protocols, is that they are coupled to the transport layer de jour.

ISDN likes circuit switched networks, the addressing and bandwidth negotiation works well in that environment, but suffers in the unpredictability of the packet switched Internet. H323, SCCP and other IP-PBX oriented protocols solved the Internet problems, but are useless in the context of Web because they don’t adapt to change quickly enough.

XMPP and SIP are well standardised and very extensible. XMPP has a lot of machinery for governing its transport that works in a different mode to HTTP (persistent connections, BOSH etc).

Probably the most likely method of WebRTC signalling will be SIP using the WebSocket transport.

There are already internet drafts that define the modifications to SIP needed for clients to work, and at least 2 public implementations in javascript.

RTCWeb defines how to send and receive media, WebRTC defines how browsers interact with the media, but we still need something to send and receive to/from. That is the role of signalling and I would hate to be throwing around XHR and json blobs.

Ben,

Signaling will stay with us forever. Question is – how fundamental is it going to be moving forward. I think it is getting commoditized to the point of irrelevancy.

Signaling is very important to me. Part of my job involves watching wireshark traces of SIP and watching the behaviors of products from different vendors interacting with each other.

XMPP and SIP (and SMTP) are everywhere.

PSTN maybe the most common “standard” that audio phones use to interoperate, but it is usual to see that operators use SIP for routing calls inside their networks.

For homes, some operators are even offering so called “HD Audio” these days. I bet that if you check, they’re really using SIP to upgrade from G.711 without you knowing about it.

If you work in an office, it is probable that there’s a deskphone nearby. Does it have an RJ11 or an RJ45 cable in it?

If it is an RJ45, then admit it, you’re using SIP too. If RJ11, then it is probably interworked to SIP at the first server, then routed over IP.

It’s standardized signalling that dies. And no, this will not inevitably lead to silos: just use 3rd party authentication to tell a webrtc service who you are and under what URL you may be reached for calling back. A URL is as universal as an E.164.

True.

A URL might be as universal an an E.164, but what payload do I send to it?

With an E.164, I can start doing ENUM and SRV lookups and let my network determine what to do with it. Part of the lookup process gives me back a protocol and connection information.

With standard signaling, I know what to do next to complete the call.

With a URL, what do you do with it?

Do you send GET? POST? What payload do you send? query args or multipart MIME?

Do I encode it with xml? json? what parameters do I need to use.

Is it authenticated using OAuth2 or OpenID? Do I have an OAuth token that the server accepts or shall I try HTTP basic instead.

Without standardized signaling only the vendor who wrote the implementation can use that system. It’s a real problem that exists before we even start discussing the effects of WebRTC.

—

My hope, and the way it appears to be heading, is that vendors will start to implement web sites and clients using WebRTC to handle all of the hard stuff for the client, get a js library (my current favourite is sipML5.org) which talks SIP to a proxy for further routing.

This way, we’re not throwing away what we have learned over the past decade. The last thing I want to see is web designers reimplementing wheels and resolving problems.

When you access a WebRTC URL, the browser will download a javascript from it that tells it what to do. It’s really simple.

An example from the past: usenet vs the web

Usenet used standardised protocols to exchange forum discussions between servers. It had ‘interconnection agreements’, it had a universally accepted feature set. Then came the web. Many web site have forum discussions. Some require authentication of senders, some don’t (like this one). Some use 3rd party authentication — eg stackoverflow.com. Some require a ‘silo’ account, like facebook. Sure I can’t use my highly customized NNTP usenet reader to access all those forums. But the forum developers had much more freedom to innovate than in the usenet world where everything had to be standardized. And the average user preferred the web forums, too.

A browser downloading it’s client for a particular service is how I expect it to happen.

The Google hangouts client will be part of and know the Google procedures and conventions. The Cisco WebEx client will know and use the Cisco procedures and conventions.

That is “simple”. That model works right now, even without WebRTC. Albeit with lots of restrictions on which platform/browser/network combinations.

With standard signaling, I could extend my phonebook to include federated services (the email convention of @domain.com works well here).

At the moment, there is no “WebRTC” URI. I can’t click on a webrtc:[email protected] (c.f. mailto) and trust that my browser knows what to do with it. That would requires conventions, protocols… or dare I even say… Standard Signaling.

You actually can…

Twelephone, Speek, UberConference and a lot of other WebRTC services provide a URL that is either personal to the user or in the context of the specific interaction that you can use or share with others.

Same as email, only in a URL form.

Speek.com looks like a silo to me.

Twelephone uses the phono , which is a js library for the phono service and that provides a SIP backend.

UberConference use a gateway the call to other protocols. They offer a rich media experiance to their users then interwork with other protocols in their cloud.

The use of a protocol gateway is something that I expect will be fairly common in the future. Products like Digium’s Asterisk and Acme Packet can already do SIP/WebRTC translation. I have even seen some gateways do the SAVPF/AVP conversion for the media too.

Tsahi’s right – “signaling” in the “everyone must conform to a single, universal signaling protocol” is on its way out.

Historically, communications has been a balancing act, with interoperability on one side and innovation on the other. The PSTN is the ultimate standards-based and interoperable network but that comes at the price of utter inflexibility. On the other hand, you have walled-garden services like Skype and Viber. They support all kinds of innovative features, but even Skype is far from universal. The best thing about WebRTC is that it manages to be interoperable while at the same time enabling innovation.

Why doesn’t signaling matter in the WebRTC world? Because WebRTC endpoints are dynamic – they are created out of JavaScript, HTML and CSS when you visit a website. Your browser is essentially building a phone based on instructions from the web developer, and these instructions include some means of signaling. SIP over WebSocket? Jingle over BOSH? Some kind of home-grown JSON messaging over Comet? It doesn’t matter. The mechanism of interoperability isn’t the signaling protocol, it’s the Web. All you need is a standards-compliant browser and you can communicate with anyone.

In a WebRTC world, every site is a “fenced garden” — it has its own internal (and potentially proprietary) mode of operation, but anyone with a browser can come in and play. Each site may have a completely different user experience and underlying technology but you, as the end user, won’t care.