Three important news items were published in the past couple of weeks that are shaping the Video API market. And all have an AI aspect to them.

Our Programmable Communications industry (CPaaS) is moving and shifting. And those focused on video are the ones who matter at the moment. In the past, we’ve seen such innovations coming from Twilio, who defined and redefined the CPaaS market. In recent years not much. These days? You need to look at the video players to understand the trends.

Here are 3 big news items that got my attention this month and why they matter:

- Daily announced Pipecat Cloud

- LiveKit series B funding and… LiveKit Cloud Agents

- Cloudflare acquires Dyte and partners with Hugging Face

Let’s see where all this lead us to

Table of contents

Why video is leading the way in AI for CPaaS

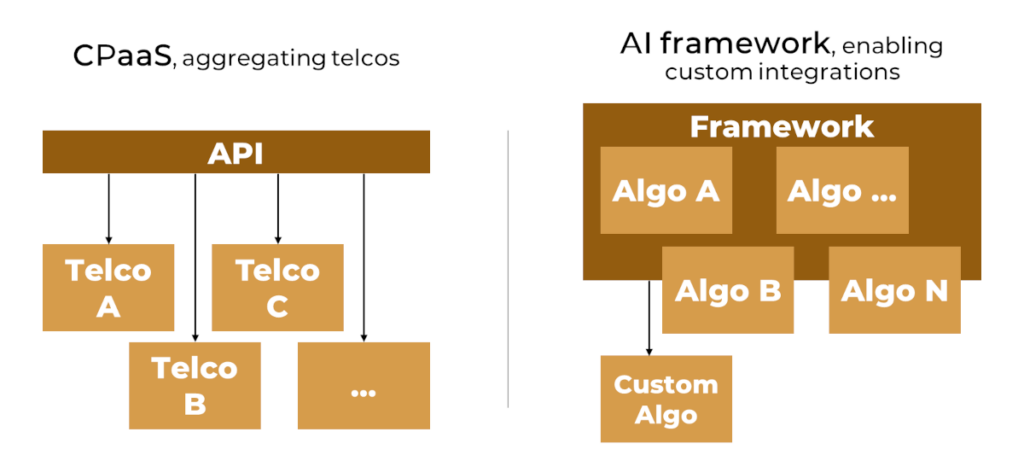

CPaaS started off around SMS and voice. The concept around it was to aggregate telecom providers and place a single, sane API on top of them.

The barrier or mote here for vendors was the negotiation of contracts and integrating with interfaces of 100+ telecom providers around the globe. Not fun at all.

That meant a customer could purchase a phone number, send a message and answer calls without the need to think if the underlying provider is Verizon, AT&T or Globe Telecom in the Philippines. And the customer didn’t really care – not who the underlying provider was, as long as the service was good. And that service was uniform in nature – you want calls to get connected and messages to be delivered at a high rate. Nothing less and nothing more.

Fast forward to today and nothing changed in voice-land.

But AI is different.

When you look at how the voice focused vendors are adding AI, some are doing so by deciding which algorithms/vendors to use and placing an API layer on top of it, taking their sweet time about it. The notion is that the customer doesn’t care much/enough about this anyways and/or that the algorithms/vendors are finite and small in their number. So they can do it all themselves.

The video focused vendors who are looking at this and are at the forefront with their vision are Daily, LiveKit and Agora. They all created AI frameworks making them open source. Gustavo wrote about these already.

The concept behind all these frameworks is simple:

- Make it easy to connect the Programmable Communications media stream to the framework

- Have the framework flexible enough to deal with a variety of use cases, some of which are still unknown to us

- Integrate with as many algorithms/vendors as possible

- Make it open source, so that others can integrate more algorithms/vendors (because the world is infinite here and not finite)

And it worked for them. At least based on the engagement numbers we see on git for the relevant projects.

From an AI interface to an AI framework

The naive solution which I was promoting and aiming for was simple. If you are dealing with CPaaS, what you need to offer is a way to extract or inject in real-time audio and video streams to your platform in a backend-to-backend manner.

Such an approach just means that you have a WebSocket, RTP or some other transport mechanism from your media servers that can then be connected to external AI services. Think of TTS (Text-To-Speech) for a call as an example. Users connect to your SFU. The developers can connect the audio from that meeting and send it towards whatever TTS service they want and continue things from there.

That enabler is an AI interface for CPaaS. Some services have had these for years on their voice channels. Those doing video started introducing them more recently. It gives developers the full capabilities, but little else. In a way, it leaves a lot to be desired. Especially now that LLMs are so popular and mostly text based.

What happens is that we usually need a kind of a processing pipeline these days. A way to ship media from the media server through one or more external components and then back into the media server. That requires an AI framework.

Something akin to… well… Daily’s Pipecat and LiveKit Agents.

I believe such frameworks connected to the Video API or being an integral part of them will be critical moving forward.

Daily and Pipecat Cloud

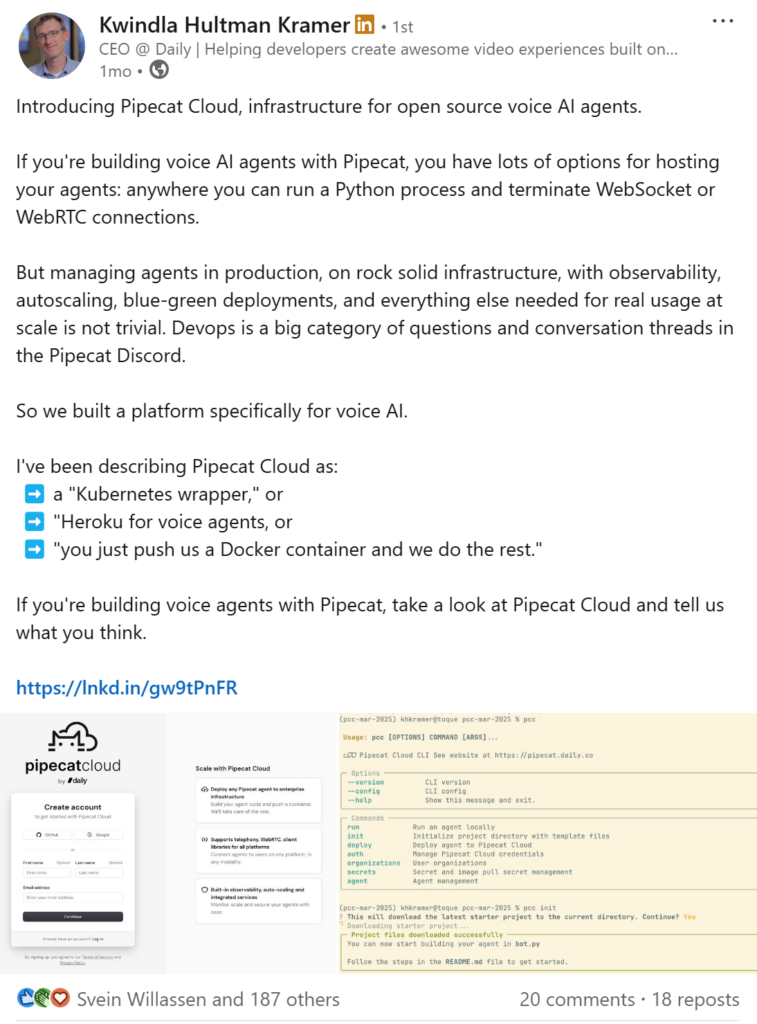

Daily had a hosted solution for AI called Daily Bots. It decided to sunset it and instead introduce Pipecat Cloud. The actual announcement was made by their CEO, Kwindla Hultman Kramer over LinkedIn:

(you should follow Kwindla on LinkedIn – he shares a ton of insights and resources there regularly)

The main change?

Up until now, Daily developers could use one of two approaches:

- Adopt Pipecat as their AI framework, build their logic with it, and then deploy it on their own wherever they wanted – just like any other open source component

- Use Daily Bots, which was a hosted service by Daily, built on top of Pipecat. It was great but limited in nature (it didn’t allow running custom Python code as part of the bot)

Daily decided to sunset Daily Bots and migrate its customers to a new platform called Pipecat Cloud. This is a managed Pipecat service, where developers build their own Pipecat pipelines in local Docker containers and then upload them to the Pipecat Cloud where they run in production. Daily takes care of scaling, monitoring and everything else.

It was the natural next step:

- This increases the mote between Pipecat and Daily’s competitors to just use Pipecat; they would need to now offer a cloud based service as well to make it compelling to begin with

- It enables and entices an easy migration path between the Cloud and the open source offering

In a way, Daily took a step from LiveKit’s playbook – starting by offering an open source framework (Pipecat), getting developers hooked and addicted to it, and then introducing a paid Cloud service for it. Which is a natural segway to… LiveKit.

LiveKit and Cloud Agents

LiveKit had a big announcement this month, celebrating its new series B funding of $45M. This post is interesting in the way it is written – from the least important to the most important (at least for me):

- LiveKit Agents 1.0 is released, in a way, stating this isn’t a beta or an MVP anymore without really saying it

- Workflows are introduced, for better support of a conversation flow with known steps in it (mainly for contact centers)

- Multilingual semantic turn detection, which is neat

- Telephony support, which was there before, but somehow mentioned here for emphasis I believe

- Wrapped under Agents 1.0 is also Cloud Agents, which I believe deserve to be mentioned separately

- LiveKit Cloud Agents is the same as Pipecat Cloud – in the sense that you build your own LiveKit Agents logic and code, and then host it on LiveKit’s cloud

- Unlike Pipecat Cloud, Cloud Agents is in closed beta with a Google Form in front of it to access

- This might mean that LiveKit weren’t ready for this announcement, but had to push it through because of Daily’s announcement AND because of their series B funding

- Series B funding

- $45M is serious money in 2025, especially in the Video API domain where funding is scarce. This comes low when compared to pure AI players, but in a way, shows where the focus is in our industry now – AI (not surprising)

- Total funding LiveKit raised so far is $83M, which is considerable and shows the trust of its investors

- LiveKit plans to use this new funding towards “growing our team and furthering our progress towards offering an all-in-one platform for building AI agents that can see, hear, and speak like we do.”

This was great news for LiveKit and it gives them what they need to push through and grow their offering in ways that are hard to achieve in the current economic climate.

Cloudflare closing its gaps

I must admit. For me, Cloudflare in WebRTC was a bright shining light and a huge disappointment at the same time.

On one hand:

- Cloudflare is likely the 4th IaaS vendor after AWS, Azure and GCP

- Their spread of 200+ data center and use of Anycast brought something fresh and new to the WebRTC market

- A no frills hosted SFU was again something interesting and new

On the other hand though:

- There was no client SDK to speak of

- Cloudflare assumed developers would just connect to their SFU and it will magically just work, which is far from the reality. Especially if you want to optimize for media quality

- Since the initial announcements, no further news came out of Cloudflare officially

It seems like Cloudflare didn’t lose interest in WebRTC. It just tried to figure out what the next big step should be, and it is trying to close the gaps with two different deals it did, wrapped into a single announcement.

It starts with a new name for the offering. Instead of Cloudflare Calls it is now called Cloudflare Realtime, which now includes 3 products: RealtimeKit (new and in beta), TURN Server (once almost a hidden part under Calls) and Serverless SFU (what was Calls).

- Cloudflare acquired Dyte, another Video API vendor from India, and wrapped it into RealtimeKit

- Dyte will be moving its own API and SDKs to use Cloudflare’s infrastructure (IaaS and most likely also TURN and SFU). At some future point, they might just close Dyte as a product/company and have it all under RealtimeKit

- RealtimeKit now serves as the biggest missing part for Cloudflare – client SDKs. The announced platforms that will be supported by these SDKs are Kotlin, React Native, Swift, JavaScript and Flutter

- Recording and Voice AI (in partnership with EleventLabs) will be part of the platform as well

- As with LiveKit Cloud Agents, the access to RealtimeKit is also in private beta behind a signup form

- There is also a promise that all this comes with a robust AI offering, but that feels more of a lip service or a roadmap item than anything else at the moment

- Partnership with Hugging Face

- Hugging Face is a large and important player in the generative AI and machine learning domain

- Recently, it launched their own FastRTC framework. FastRTC is all about connecting WebRTC and WebSockets to AI models – essentially what we need to build our media pipelines in Video APIs; and in a way, somewhat similar a bit to PipeCat and LiveKit Agents

- To make sure users of FastRTC end up with Cloudflare’s WebRTC infrastructure and RealtimeKit, the initial step that Cloudflare took was to offer free 10Gb of TURN bandwidth each month to Hugging Face users. It sounds much, but it is $0.5/month based on Cloudflare’s TURN pricing

- What’s important here is the partnership and the intent. I am sure this is a first step, considering the acquisition of Dyte in parallel to this

All in all, a positive announcement for Cloudflare and shows intent of investing further in WebRTC and Video APIs.

Upcoming update of the Video API report

These market changes, along with a few previous ones, made me decide to update my Video APIs report.

It needs a better explanation of the market after Twilio decided to keep their Programmable Video service, but also in light of the trends mentioned here, beef up the whole section dealing with AI frameworks.

I am again reaching out to the vendors, to see what I missed from the work they put into their platforms this past year, and also looking for vendors who weren’t covered by the report so far and should be there. If you know of one, or work in one, just ping me to let me know.

And if you are interested to learn more about this report, or any of my other services – just reach out to me.

You can also check out the TEN Framework (https://github.com/TEN-framework/ten-framework), which is another real-time voice AI agent framework.