Google in 2018 is all about AI. But not only…

In November 2015, Google released TensorFlow, an open source machine learning framework. While we’ve had machine learning before that – at Google and elsewhere, this probably marks the date when machine learning and as an extension AI got its current spurt of growth.

Some time, between that day and the recent Google I/O event, Sundar Pichai, CEO of Google, probably brought his management team, knocked on the table and told them: “We are now an AI company. I don’t care what it is that you are doing, come back next week and make sure you show me a roadmap of your product that has AI in it.”

I don’t know if that meeting happened in such a form or another, but I’d bet that’s what have been going at Google for over a year now, culminating at Google I/O 2018.

After the obligatory icebreaker about the burger emoji crisis, Pichai immediately went to the heart of the keynote – AI.

Google announced AI at last year’s Google I/O event, and it was time to show what came out of it a year later. Throughout the 106 minutes keynote, AI was mentioned time and time again.

That said, there was more to that Google I/O 2018 keynote than just AI.

Google touched at its keynote 3 main themes:

- AI

- Wellbeing

- Fake news

I’d like to expand on each of these, as well as discuss parts of Smart Displays, Android P and Google Maps pieces of the keynote.

I’ll try in each section to highlight my own understanding and insights.

Before we begin

Many of the features announced are not released yet. Most of them will be available only closer to the end of the year.

Google’s goal was to show its AI power versus its competition more than anything else they wanted to share in this I/O event.

This is telling in a few ways:

- Google weren’t ready with real product announcements for I/O that were interesting enough to fill 100 minutes of content. Or more accurately, they were more interested in showing off the upcoming AI stuff NOW and not wait for next year or release it later

- Google either knows its competitors are aware of all the progress it is making, or doesn’t care if they know in advance. They are comfortable enough in their dominance in AI to announce work-in-progress as they feel the technology gap is wide enough

AI

When it comes to AI, Google is most probably the undisputed king today. Runners up include Amazon, Microsoft, IBM, Apple and Facebook (probably at that order, though I am not sure about that part).

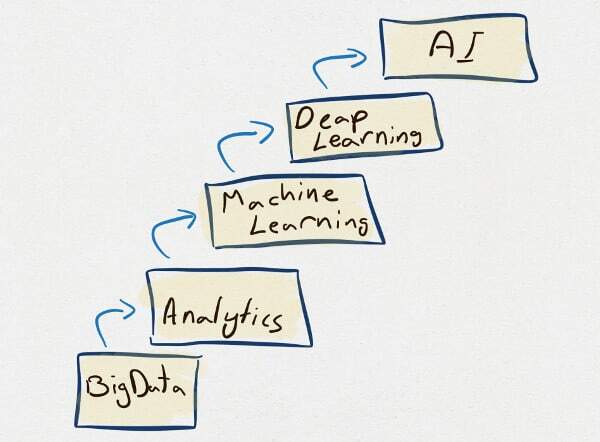

If I try to put into a diagram the shift that is happening in the industry, it is probably this one:

Not many companies can claim AI. I’ll be using ML (Machine Learning) and AI (Artificial Intelligence) interchangeably throughout the rest of this article. I leave it to you to decide which of the two I mean 🙂

AI was featured in 5 different ways during the keynote:

- Feature enhancer

- Google Assistant (=voice/speech)

- Google Lens (=vision)

- HWaaS

Feature Enhancer

In each and every single thing that Google does today, there’s an attention to how AI can improve that thing that needs doing. During the keynote, AI related features in GMail, Google Photos and Android were announced.

It started off with four warm-up feel-good type use cases that weren’t exactly product announcements, but were setting the stage on how positive this AI theme is:

- Diagnosing diseases by analyzing human retina images in healthcare

- Predicting probability of rehospitalization of a patient in the next 24 hours

- Producing speaker based transcription by “watching” a video’s content

- Predictive morse typing for accessibility

From here on, most sections of the keynote had an AI theme to them.

Moving forward, product managers should think hard and long about what AI related capabilities and requirements do they need to add to the features of their products.What are you adding to your product that is making it SMARTER?

Google Assistant (=voice and speech)

Google Assistant took center stage at I/O 2018. This is how Google shines and differentiates itself from its main 3 competitors: Apple, Amazon and Facebook.

In March, Forbes broke some interesting news: at the time, Amazon was hiring more developers for Alexa than Google was hiring altogether. Alexa is Amazon’s successful voice assistant. And while Google hasn’t talked about Google Home, its main competitor at all, it did emphasize its technology differentiation. This emphasis at I/O was important not only for Google’s customers but also for its potential future workforce. AI developers are super hard to come by these days. Expertise is scarce and competition between companies on talent is fierce. Google needs to make itself attractive for such developers, and showing it is ahead of competition helps greatly here.

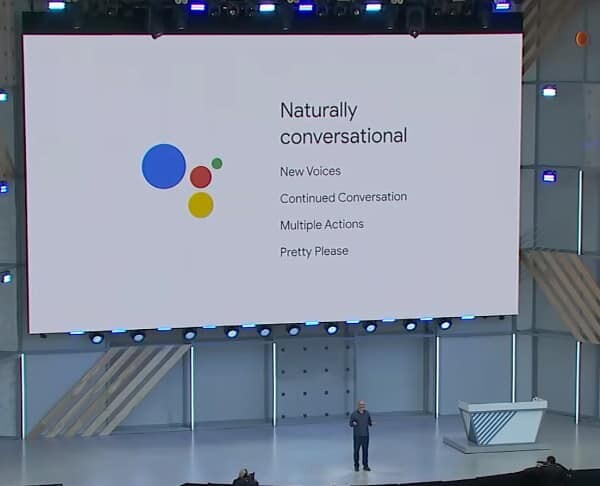

Google Assistant got some major upgrades this time around:

- WaveNet. Google now offers an improved text to speech engine that makes its speech generator feel more natural. This means:

- To get new “voices” now requires Google to have less samples of a person speaking

- Which allowed it to introduce 6 new voices to its Assistant (at a lower effort and cost)

- To make a point of it, they started working with John Legend to get his voice to Assistant – his time is more expensive, and his voice “brand” is important to him, so letting Google use it shows his endorsement to Google’s text-to-speech technology

- This is the first step towards the ability to mimic the user’s own voice. More on that later, when I get to Google Duplex

- Additional languages and countries. Google promised support for 30 languages and 80 countries for Assistant by year end

- Naturally Conversational. Google’s speech to text engine now understand subtleties in conversations based not only on what is said but also how it is said, taking into account pitch, pace and pauses when people speak to it

- Continued conversation. “Hey Google”. I don’t need to say these action words anymore when engaging in a back and forth conversation with you. And you maintain context between the questions I ask

- Multiple actions. You can now ask the assistant to do multiple things at once. The assistant will now parse them properly

Besides these additions, where each can be seen as a huge step forward on its own right, Google came out with a demo of Google Duplex, something that is best explained with an audio recording straight from the keynote:

If you haven’t watched anything from the keynote, be sure to watch this short 4 minutes video clip.

There are a few things here that are interesting:

- This isn’t a general purpose “chatbot”/AI. It won’t pass a turing test. It won’t do anything but handling appointments

- And yet. It is better than anything we’ve seen before in doing this specific task

- It does that so naturally, that people can’t distinguish it from a real person, at least not easily

- It is also only a demo. There’s no release date to it. It stays in the domain of “we’ve got the best AI and we’re so sure of it that we don’t care of telling our competitors about it”

- People were interested in the ethical parts of it, which caused Google to backtrack somewhat later and indicate Duplex will announce itself as such at the beginning of an interaction

- Since we’re still in concept stage, I don’t see the problem

- I wouldn’t say google were unethical – their main plan on this one was to: 1. Show supremacy; 2. Get feedback

- Now they got feedback and are acting based on it

- Duplex takes WaveNet to the next level, adding vocal queues to make the chatbot sound more natural when in a conversation. The result is uncanny, and you can see by the laughs of the crowds at I/O

- Duplex is a reversal of the contact center paradigm

- Contact center software, chatbots, ML and AI are all designed to get a business better talk with its customers. Usually through context and automation

- Duplex is all about getting a person to better talk to businesses. First use case is scheduling, but if it succeeds, it won’t be limited to that

- What’s there to stop Google from reversing it back and putting this at the hands of the small businesses, allowing them to field calls of customers more efficiently?

- And what happens once you put Duplex in both ends of the call? An AI assistant for a user trying to schedule an appointment with an AI assistant of a business

- When this thing goes to market, Google will have access to many more calls, which will end up improving their own services:

- An improvement to the accuracy and scenarios Duplex will be relevant for

- Ability to dynamically modify information based on the content of these calls (it showed an example of how it does that for opening hours on Google Maps during the keynote)

- Can Google sell back a service to businesses for insights about their contact centers based on people’s requests and the answers they get? Maybe even offer a unique workforce optimization tool that no one else can

- I’d LOVE to see cases where Duplex boches these calls in Google’s field trials. Should be hilarious

You’d like to read what Chad Hart has to write about Duplex as well.

For me, Duplex and Assistant are paving the way to where we are headed with voice assistants, chatbots and AI. Siri, Cortana and Lex seem like laggards here. It will interesting to see how they respond to these advancements.

Current advancements in speech recognition and understanding make it easier than ever to adopt these capabilities into your own products.If you plan on doing anything conversational in nature, look first at the cloud vendors and what they offer. As this topic is wide, no single vendor covers all use cases and capabilities.

While at it, make sure you have access to a data set to be able to train your models when the time comes.

Google Lens (=vision)

Where Google Assistant is all (or mostly) about voice, Google Lens is all about vision.

Google Lens is progressing in its classification capabilities. Google announced the following:

- Lens now recognizes and understands words it “sees”, allowing use cases where you can copy+paste text from a photo – definitely a cool trick

- Lens now handles style matching for clothing, able of bringing suggestions of similar styles

- Lens offers points of interest and real time results by offering on-device ML, coupled with cloud ML

That last one is interesting, and it is where Google has taken the same approach as Amazon did with DeepLens, one that should be rather obvious based on the requirements here:

- You collect and train datasets in the cloud

- You run the classification itself on the edge device – or in the cloud

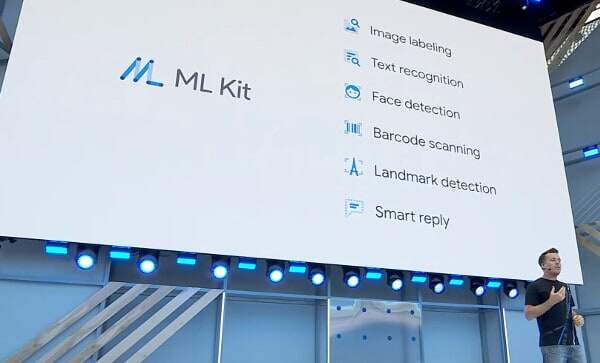

It took it a step further, offering it also programmatically through ML Kit – Google’s answer to Apple’s Core ML and Amazon’s SageMaker.

Here’s a table summarizing the differences between these three offerings:

| Apple | Amazon | ||

| ML Framework | TensorFlow | Core ML + converters | MXNet & TensorFlow |

| Cloud component | Google Firebase | none | AWS SageMaker |

| Edge component | ML Kit | Core ML | AWS DeepLens |

| Edge device types | Android & iOS | iOS | DeepLens |

| Base use cases |

|

Handpicked samples from open source repositories | Samples:

|

| Proprietary parts | Cloud TPUs and productized use cases | iOS only | AWS ecosystem only |

| Open parts | Devices supported | Machine learning frameworks | Machine learning frameworks |

Apple Core ML is a machine learning SDK available and optimized for iOS devices by Apple. You feed it with your trained model to it, and it runs on the device.

- It is optimized for iOS and exists nowhere else

- It has converters to all popular machine learning frameworks out there

- It comes with samples from across the internet, pre-converted to Core ML for developers to play with

- It requires the developers to figure out the whole cloud backend on their own

AWS DeepLens is the first ML enabled Amazon device. It is built on top of Amazon’s Rekognition and SageMaker cloud offerings.

- It is a specific device that has ML capabilities in it

- It connects to the AWS cloud backend along with its ML capabilities

- It is open to whatever AWS has to offer, but focused on the AWS ecosystem

- It comes with several baked samples for developers to use

Google ML Kit is Google’s machine learning solution for mobile devices, and has now launched in beta.

- It runs on both iOS and Android

- It makes use of TensorFlow Lite for the device side and on TensorFlow on the backend

- It is tied into Google Firebase to rely on Google’s cloud for all backend ML requirements

- It comes with real productized use cases and not only samples

- It runs its models both on the device and in the cloud

This started as Google Lens and escalated to an ML Kit explanation.

HWaaS

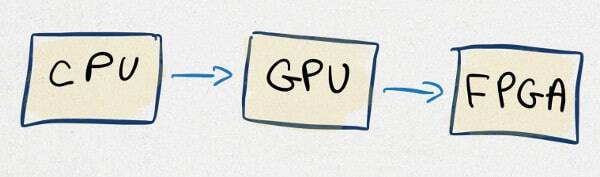

With everything moving towards the cloud, so does hardware in some sense. While the cloud started from hardware hosting of virtualized Linux machines, we’ve been seeing a migration towards different types of hardware recently:

We’re shifting from general purpose computing done by CPUs towards specialized hardware that fits specific workloads in the form of FPAG.

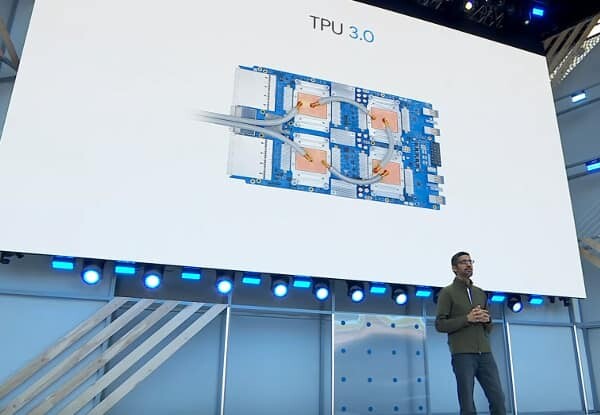

The FPGA in the illustration above is Google’s TPU. TPU stands for TensorFlow Processing Unit. These are FPGAs that have been designed and optimized to handle the TensorFlow mathematical functions.

TensorFlow is said to be slow on CPUs and GPUs compared to other alternatives, and somehow Google is using it to its advantage:

- It open sourced TensorFlow, making it the most popular machine learning framework out there in a span of less than 3 years

- It is now in its third generation of TPUs on Google Cloud for those who need to train large datasets quickly

- TPUs are out of the reach of Amazon and other cloud providers. It is proprietary hardware designed, hosted and managed by Google, so any performance gains coming from it are left at the hands of Google for its customers to enjoy

Google’s TPUs got their fair share of time at the keynote in the beginning and were stitched throughout the keynote at strategic points:

- Google Lens uses TPUs to offer the real time capabilities that it does

- Waymo makes use of these TPUs to get to autonomous cars

Pichai even spent time boasting large terms like liquid cooling…

It is a miracle that these TPUs aren’t plastered all over the ML Kit landing page.

Wellbeing

I am assuming you are just as addicted to your smartphone as I am. There are so many jokes, memes, articles and complaints about it that we can no longer ignore it. There are talks about responsibility and its place in large corporations.

Apple and Google are being placed on the spotlight on this one in 2018, and Google took the first step towards a solution. They are doing it in a long term project/theme named “Wellbeing”.

Wellbeing is similar to the AI initiative at Google in my mind. Someone came to the managers and told them one day something like this: “Our products are highly addictive. Apple are getting skewered in the news due to it and we’re next in line. Let’s do something about it to show some leadership and a differentiation versus Apple. Bring me ideas of how we can help our Android users with their addiction. We will take the good ideas and start implementing them”.

Here are a few things that came under Wellbeing, and one that didn’t but should have been:

- Dashboard – Google is adding to Android P an activity dashboard to surface insights to the users on what they do on their smartphones

- YouTube includes a new feature to remind you to take a break when a configured amount of time passes. You can apply the same to other third party apps as well

- Smarter do not disturb feature, coupled with Shush – all in an effort to reduce notifications load and anxiety from the user

- Wind down – switching to grayscale mode when a predetermined time of day arrives

- Pretty Please – Google Assistant can be configured to respond “better” and offer positive reinforcements when asked nicely. This one should help parents make their kids more polite (I know I need it with my kids at home)

In a way, this is the beginning of a long road that I am sure will improve over time. It shows the maturity of mobile platforms.

Fake News

Under responsibility there’s the whole Fake News of recent years.

While Wellbeing targets mainly Apple, The Google News treatment in the keynote was all about addressing Facebook’s weakness. I am not talking about the recent debacle with Cambridge Analitica – this one and anything else related to user’s data privacy was carefully kept away from the keynote. What was addressed is Fake News, where Google gets way more favorable attention than Facebook (just search Google for “google fake news” and “facebook fake news” and look at the titles of the articles that bubble up – check it also on Bing out of curiosity).

What Google did here is create a new Google New experience. And what is interesting is that it tried to bring something to market that skims nicely between objectivity and personalization – things that don’t often correlate when it comes to opinion and politics. It comes with a new layer of visualization that is more inviting, but most of what it does is rooted in AI (as anything else in this I/O keynote).

Here’s what I took out of it:

- AI is used to decide what are quality sources for certain news topics. They are designed to build trust in the news and to remove the “fake” part out of it

- Personalized news is offered in the “category” level. Google will surface topics that interest you

- Next to personalized news, there’s local news as well as trending news, which gets surfaced, probably without personalization though the choice of topics is most probably machine learning driven

- Introduced Newscast – a presentation layer of a topic, enabling readers to get the gist of a topic and later drill down if they wish in what Google calls Full Coverage – an unfiltered view of an event – in an unpersonalized way

One more thing Google did? Emphasized that they are working with publishers on subscriptions, being publisher-friendly, where Facebook is… er… not. Will this hold water and help publishers enough? Time will tell.

Smart Displays

Smart displays are a rather new category. Besides Android as an operating system for smartphones and the Waymo AI piece, there was no other device featured in the keynote.

Google Home wasn’t mentioned, but Smart Displays actually got their fair share of minutes in the keynote. The only reason I see for it is that it is coupled nicely with the Google Assistant.

The two features mentioned that are relevant?

- It can now show visuals that relate to what goes on in the voice channel

- This is similar in a way to what MindMeld tried doing years back, before its Cisco acquisition

- The main difference is that this involves a person and a chatbot. Adding a visual element makes a lot of sense and can be used to enhance the experience

- It offers rich and interactive responses, which goes hand in hand with the visuals part of it

I am unsure why Google gave smart displays the prominence it did at Google I/O. I really have no good explanation for it, besides being a new device category where Apple isn’t operating at all yet – and where Amazon Alexa poses a threat to Google Home.

Android P

10 years in, and Android P was introduced.

There were two types of changes mentioned here: smarts and polish.

Smarts was all about AI (but you knew that already). It included:

-

- Adaptive Battery

- Adaptive Brightness

- ML Kit (see the Lens section above)

Polish included:

- App Actions and Slices, bot offering faster and better opportunities for apps to interact with users outside of the app itself

- UI/UX changes all around that are just part of the gradual evolution of Android

There was really not much to say about Android P. At least not after counting all the AI work that Google has been doing everywhere anyway.

Google Maps

Google Maps was given the stage at the keynote. It is an important application and getting more so as time goes by.

Google Maps is probably our 4th search destination:

- Google Search

- Google Assistant

- YouTube

- Google Maps

This is where people look for information these days.

In Search Google has been second to none for years. It wasn’t even part of the keynote.

Google Assistant was front and center in this keynote, most probably superior to its competitors (Siri, Cortana and Lex).

YouTube is THE destination for videos, with Facebook there, but with other worries at this point in time. It is also safe to say that younger generations and more visual audiences search YouTube more often than they do anything else.

Maps is where people search to get from one place to another, and probably searching even more these days – more abstract searches.

In a recent trip to the US, I made quite a few searches that were open ended on Google Maps and was quite impressed with the results. Google is taking this a step further, adding four important pillars to it:

- Smarts

- Personalization

- Collaboration

- Augmented Reality

Smarts comes from its ML work. Things like estimating arrival times, more commune alternatives (they’ve added motorcycle routes and estimates for example), etc.

Personalization was added by the introduction of a recommendation engine to Maps. Mostly around restaurants and points of interest. Google Maps can now actively recommend places you are more likely to like based on your past preferences.

On the collaboration front, Google is taking its first steps by adding the ability to share locations with friends so you can reach out a decision on a place to go to together.

AR was about improving walking directions and “fixing” the small gripes with maps around orienting yourself with that blue arrow shown on the map when you start navigating.

Where are we headed?

That’s the big question I guess.

More machine learning and AI. Expect Google I/O 2019 to be on the same theme.

If you don’t have it in your roadmap, time to see how to fit it in.