Media in WebRTC.

What makes it so challenging?

I guess it can be attributed to the many disciplines and different areas of knowledge that you are expected to grok.

My last two articles? They were about the differences between VoIP, WebRTC and the web.

By now, you probably recognize this:

If you’ve got some VoIP background, then you should know how WebRTC is different than VoIP.

If you’ve got a solid web background, then you should know why WebRTC development is different than web development.

When it comes to media, media flows and media related architectures, there seems to be an even bigger gap. People with VoIP background might have some understanding of voice, but little in the way of video. People with web background are usually clueless about real time media processing.

The result is that in too many cases, I see WebRTC architectures that make no sense in how they fit to what the vendor had in mind to create.

Want to learn more about media in WebRTC? Join this free webinar to see an analysis of a real case study I came across recently. What did the company had in mind to build and how they botched their architecture along the way.

Here are 4 reasons why media is so challenging:

#1 – Media is as Real Time as it Gets

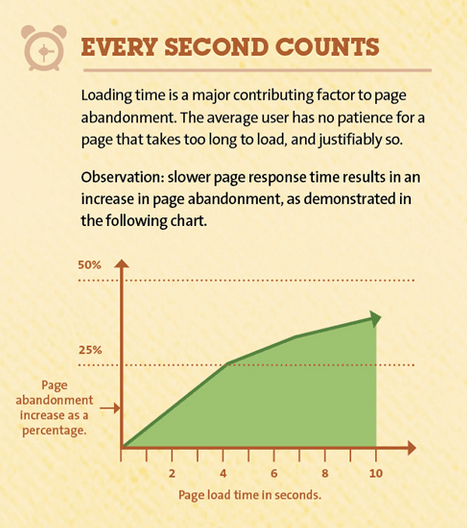

Page load speed is important. People leave if your site doesn’t load fast. Google incorporates it as an SEO ranking parameter.

This is how it is depicted today:

So… every second counts. And the post slug is “your-website-design-should-load-in-4-seconds”.

From a WebRTC point of view, here’s what I have to say about that:

If I were given a full second to get things done with WebRTC I’d be… (fill in the blank)

Seriously though, we’re talking about real time conversations between people.

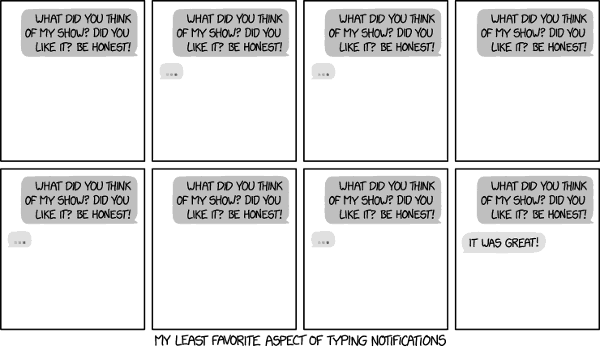

Not this conversation:

But the one that requires me to be able to hold a real, live one. With a person that needs to listen to me with his ears, see me with his eyes, and react back by talking to me directly.

400 milliseconds of a roundtrip or less (that’s 200 milliseconds to get media from your camera to the display on the other side) is what we’re aiming for. A full second would be disastrous and not really usable.

Real time.

For real.

#2 – Media Requires Bandwidth. Lots and Lots of Bandwidth

This one seems obvious but it isn’t.

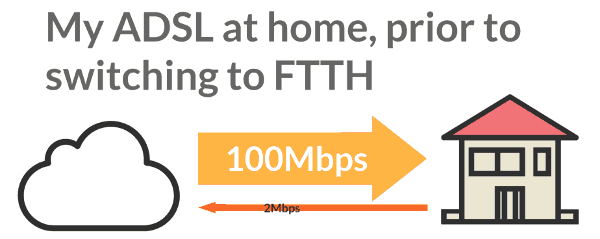

Here’s a typical ADSL line:

Most people live in countries where this is the type of a connection you have into your home. You’ll have 20, 40 or maybe 100MB downlink – that’s the maximum bitrate you can receive. And then you’ll have 1, 2 or god forbid 3MB uplink – that’s the maximum bitrate you can send.

You see, most of the home use of the internet is based on the premise that you consume more than you generate. But with WebRTC, you’re generating media at all times (if it isn’t a live streaming type of a use case). And that media generation is going to eat on your bandwidth.

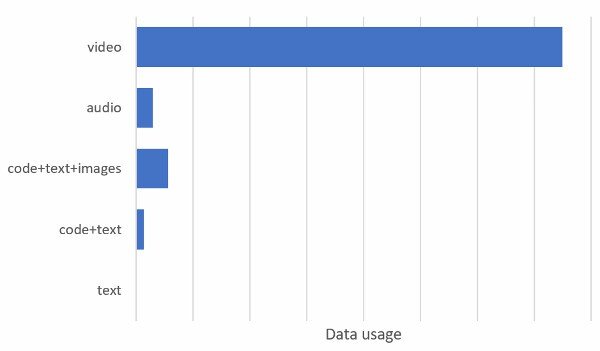

Here’s how much it takes to deliver this page to your browser (text+code, text+code+images) versus running 5 minutes of audio (I went for 40kbps) and 5 minutes of video (I went for 1Mbps). I made sure the browser wasn’t caching any page elements.

There’s no competition here.

Especially if you remember that with the page it is you who is downloading it, while with audio and video you’re both sending and receiving – it it is relentless as long as the conversation goes on the data use will grow.

Three more things to consider here:

- Usually, the assumption is that you need twice the bandwidth available than what you’re going to effectively send or receive (overheads, congestion and pure magi)

- You’re not alone on your network. There are more activities running on your devices competing over the same bandwidth. There can be more people in your house competing over the same bandwidth

- If you’re connecting over WiFi, you need to factor in stupid issues such as reception, air interferences, etc. These affect the effective bandwidth you’ll have as well as the quality of the network

#3 – Media is a Resource Hog

So it’s real time and it eats bandwidth. But that’s only half the story.

The second half involves anything else running on your device.

To encode and decode you’re going to need resources on that device.

CPU. Something capable. A usable hardware acceleration for the codecs to assist is welcomed.

Memory. Encoding and decoding are taxing processes. They need lots and lots of memory to work well. And also remember that the higher the resolution and frame rate of the video you’re pumping out – the higher the amount of memory you’ll be needing to be able to process it.

Bus. Usually neglected, there’s the device’s bus. Data needs to flow through your device. And video processing takes its toll.

Doing this in real time, means opening dedicated threads, running algorithms that are time sensitive (acoustic echo cancellation for example), synchronizing devices (lip syncing). This is hard. And doing it while maintaining a sleek UI and letting other unrelated processes run in the background as well makes it a tad harder.

So thinking of running multiple encoders and decoders on the device, working in mesh topologies in front of a large number of other users, or any other tricks you’re planning need to account for these challenges. And they need to put in focus the fact that browser vendors need to be aware of these topologies and use cases and take their time to optimize WebRTC to support them.

#4 – Media is Just… Different

Then there’s this minor fact of media just being so darn different.

It isn’t TCP, like HTTP and Websocket.

It requires 3 (!) different servers to just get a peer to peer session going (and they dare call it peer to peer).

Here’s how most websites would indicate their interaction with the browser:

And this is how a basic one would look like for WebRTC:

We’ve got here two browsers to make it interesting. Then there’s the web server and a STUN/TURN server.

It gets more complicated when we want to add some media servers into the mix.

In essence, it is just different than what we’re used to in the web – or in VoIP (who decided to do signaling with HTTP anyway? Or rely on STUN and TURN instead of placing an SBC?).

What’s Next?

These reasons of media being challenging? Real time, bandwidth-needy, resource hog and being different; That’s on the browser/client side only. Servers that need to process media suffer from the same challenges and a few more. One that comes to mind is handling scale.

So we’ve only touched the tip of the iceberg here.

This is why I created my Advanced WebRTC Architecture Course a bit over a year ago. It is a WebRTC training that aims at improving the WebRTC understanding of developers (and the semi-technical people around them).

In the coming weeks, I’ll be relaunching the office hours that run alongside the course for its third round. Towards that goal, I’ll be hosting a free webinar about media in WebRTC.

I’ll be doing something different this time.

I had an interesting call recently with a company moving away from CPaaS towards self development. The mistake they made was that they made that decision with little understanding of WebRTC.

Here’s what we’ll do during the webinar:

- Introduce the requirements they had

- Explain the architecture and technology stack they selected

- Show what went wrong

- Suggest an alternate route

Similar to my last launch, there will be a couple of time limited bonuses available to those who decide to enroll for the course.

Want to learn more about media in WebRTC? Join this free webinar to see an analysis of a real case study I came across recently. What did the company had in mind to build and how they botched their architecture along the way.

And if you’re really serious, enroll to my Advanced WebRTC Architecture Course.

very good intro, i like it