What Does Machine Learning Have to do with MOS Scores?

Human subjectivity in MOS calculations doesn’t hold water when it comes to heterogeneous environments. That’s where machine learning comes to play.

MOS score. That Mean Opinion Score. You get a voice call. You want to know its quality. So you use MOS. It gives you a number between 1 to 5. 1 being bad. 5 being great. If you get 3 or above – be happy and move on they say. If you get 4.something – you’re a god. If you don’t agree with my classification of the numbers then read on – there’s probably a good reason why we don’t agree.

Anyways, if you go down the rabbit hole of how MOS gets calculated, you’ll find out that there isn’t a single way of doing that. You can go now and define your own MOS scoring algorithm if you want, based on tests you’ll conduct. From that same Wikipedia link about MOS:

“a MOS value should only be reported if the context in which the values have been collected in is known and reported as well”

Phrased differently – MOS is highly subjective and you can’t really use MOS scores produced in one device to MOS scores produced in another device.

This is why I really truly hate delving into these globally-accepted-but-somewhat-useless quality metrics (and why we ended up with a slightly different scoring system in testRTC for our monitoring and testing services).

What Goes into MOS Scoring Calculations?

Easy. everything.

Or at least everything you have access to:

- RTCP sender and receiver reports

- Received RTP packets

- Knowing the voice codec used

- Actually decoding the audio stream and “listening” to it

- Understanding what the end user is really going to hear

Here are a few examples:

Physical desk phone

A physical IP phone has access to EVERYTHING. All the software and all the hardware.

It even knows how the headset works and what quality it offers.

Theoretically then, it can provide an accurate MOS that factors in everything there is.

Android native app

Android apps have access to all the software. Almost. Mostly.

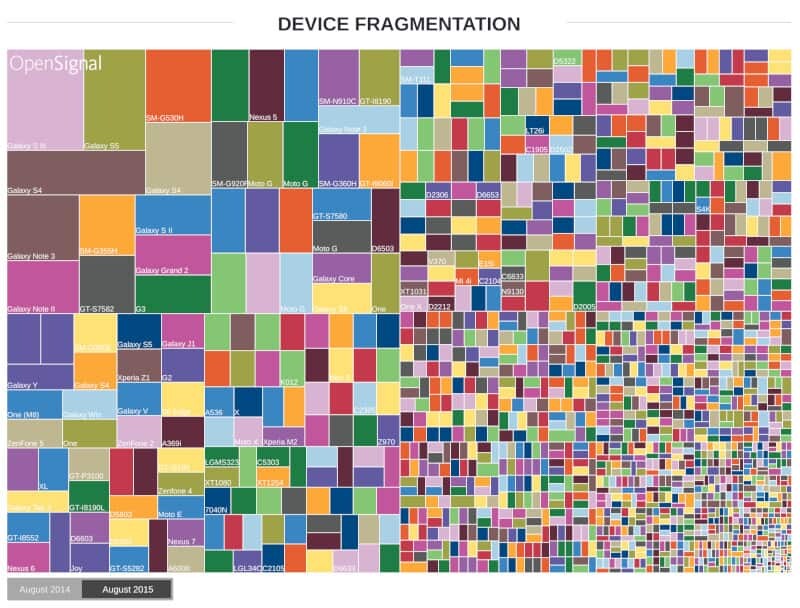

The low level device drivers are as known as the hardware that app is running on. The only problem is the number of potential devices. A few years back, these types of visualizations of the Android fragmentation were in fashion:

This one’s from OpenSignal. Different devices have different location for their mics and speakers. They use different device drivers. Have different “flavors” of the Android OS. They act differently and offer slightly different voice quality as well.

What does measuring what an objective person think about the quality of a played audio stream mean in such a case? Do we need to test this objectivity per device?

Media server who routes voice around

Then we have the media server. It sends and receives voice. It might not even decode the audio (it could, and sometimes it does).

How does it measure MOS? What would it decide is good audio versus bad audio? It has access to all packets… so it can still be rather accurate. Maybe.

WebRTC inside a browser

And we have WebRTC. Can’t write an article without mentioning WebRTC.

Here though, it is quite the challenge.

How would a browser measure MOS of its audio? It can probably do a good a job as an Android device. But for some reason, MOS scoring isn’t part of the WebRTC bundle. At least not today.

So how would a JavaScript web application calculate MOS of the incoming audio? By using getStats? That has access to an abstraction on top of the RTCP sender and receiver reports. It correlates to these to some extent. But that’s about as much as it has at its disposal for such calculations, which doesn’t amount for much.

Back to MOS calculations

But what does MOS really calculate?

The quality of the voice I hear in a session?

Maybe the quality of voice the network is capable of supporting?

Or is it the quality of the software stack I use?

What about the issue with voice quality when the person I am speaking with is just standing in a crowded room? Would that affect MOS? Does the actual original content need to be factored into MOS scores to begin with?

I’ll leave these questions opened, but say that in my opinion, whatever quality measurement you look at, it should offer some information to the things that are in your power to change – at least as a developer or product owner. Otherwise, what can you do with that information?

What Affects Audio Quality in Communications?

Everything.

- The quality of the microphone used to record the original audio (though this usually gets neglected in discussions around MOS)

- The location of the person speaking – a crowded room, airport, next to a working vacuum cleaner – or in a silent recording studio

- The voice codec used, its configuration and the level and aggressiveness of the compression it is using for this session

- The network conditions – in the last mile from both the sender and the receiver, of every hop along the way and the routers and servers it has to pass through

- The media servers – and every possible aspect about them

- The receiver’s software. Especially the jitter buffer and packet loss concealment algorithms

- The sender’s acoustic echo cancellation implementation quality

- The receiver’s voice decoder implementation

- The receiver’s speakers

I am sure I missed a bullet or two. Feel free to add them in the comments.

The thing is, there’s a lot of things that end up affecting audio quality when you make the decision of sending it through a network.

Is Machine Learning Killing MOS Scoring or Saving It?

So what did we have so far?

A scoring system – MOS, which is subjective and inaccurate. It is also widely used and accepted as THE quality measure of voice calls. Most of the time, it looks at network traffic to decide on the quality level.

At Kranky Geek 2018, one of the interesting sessions for me was the one given by Curtis Peterson of RingCentral:

He discussed that problem of having different MOS scores for the SAME call in each device the call passes through in the network. The solution was to use machine learning to normalize MOS scoring across the network.

This got me thinking further.

Let’s say one of these devices provides machine learning based noise suppression. It is SO good, that it is even employed on the incoming stream, as opposed to placing it traditionally on the outgoing stream. This means that after passing through the network, and getting scored for MOS by some entity along the way, the device magically “improves” the audio simply by reducing the noise.

Does that help or hurt MOS scoring? Or at least the ability to provide something that can be easily normalized or referenced.

Machine Learning and Media Optimization

We’ve had at Kranky Geek multiple vendors touching the domain of media optimizations. This year, their focus was mainly in video – both Agora.io and Houseparty gave eye opening presentations on using machine learning to improve the quality of a received video stream. Each taking a different approach to tackling the problem.

While researching for the AI in RTC report, we’ve seen other types of optimizations being employed. The idea is always to “silently” improve the quality of the call, offering a better experience to the users.

The next couple of years, we will see this area growing fast, with proprietary algorithms and techniques based on machine learning are added to the arms race of the various communication vendors.

Interested in more of these sessions around real time communications and how companies solve problems with it today?

” the device magically “improves” the audio simply by reducing the noise.”

In other words, it’s some type of noise canceling headset where ML is used for providing typical noise patterns (‘office’, ‘street’, ‘subway’ etc) instead of traditional noise canceling techniques. Does noise reducing always means higher MOS? MOS, for example, depends on language. If a UK caller calls a callee in India, which call leg defines final MOS – UK or Indian? How to normalize MOS in a network for different languages (accents, pronunciations etc)?

Ilya,

I have no clue 🙂

Which is why I hate MOS so much as a predictor of audio quality. There are just too many factors to place into a single number between 1-5.

Hmm – “opinion” – it is about the interaction between 2 humans and the technical quality use to be only one of the factors causes the “positive” or “negative” feeling about the dialogue. So – we use different approach in the O2 CZ contact center. The user has an option to “reward” the operator by thumb up or down when the session finishes. Cca 12% of the conversations are “rewarded” – most of it positive. But if we include in the game AI (we are using .NET ML) – we are able to guess the “thumb” with accuracy of 90-95%. Because we feed the AI not only with technical parameters, but with “all we know” about the session. It means time, duration, scope, agent name, customer past experience and some of technical data mentioned in Tsahi’s article. This approach can improve the performance of call center significantly – we are able to find “not rewarded”, but potentially negative sessions and anticipate the negative reaction according past (computed) experience.