TL;DR – YES.

Do I need a media server for a one-to-many WebRTC broadcast?

That’s the question I was asked on my chat widget this week. The answer was simple enough – yes.

Decided you need a media server? Here are a few questions to ask yourself when selecting an open source media server alternative.

Then I received a follow up question that I didn’t expect:

Why?

That caught me off-guard. Not because I don’t know the answer. Because I didn’t know how to explain it in a single sentence that fits nicely in the chat widget. I guess it isn’t such a simple question either.

The simple answer is a limit in resources, along with the fact that we don’t control most of these resources.

First Things First

Before I go into an explanation, you need to understand that there are 4 types of WebRTC servers:

- Application server

- Signaling server

- NAT traversal server (STUN & TURN)

- Media server

This question is specifically focused on (4) Media server. The other 3 servers are needed here no matter if this is 1:1 or 1:many session.

The Hard Upper Limit

Whenever we want to connect one browser to another with a direct stream, we need to create and use a peer connection.

Chrome 65 includes an upper limit to that which is used for garbage collection purposes. Chrome is not going to allow more than 500 concurrent peer connections to exist.

500 is a really large number. If you plan on more than 10 concurrent peer connections, you should be one of those who know what they are doing (and don’t need this blog). Going above 50 seems like a bad idea for all use cases that I can remember taking part of.

Understand that resources are limited. Free and implemented in the browser doesn’t mean that there aren’t any costs associated with it or a need for you to implement stuff and sweat while doing so.

Bitrates, Speeds and Feeds

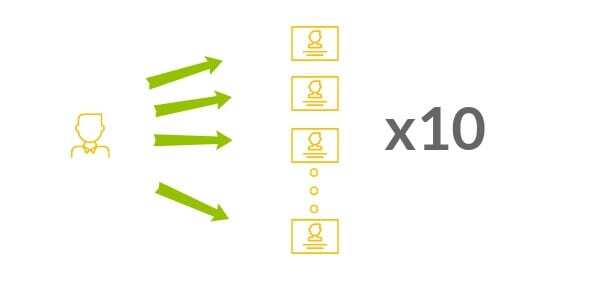

This is probably the main reason why you can’t broadcast with WebRTC, or with any other technology.

We are looking at a challenging domain with WebRTC. Media processing is hard. Real time media processing is harder.

Assume we want to broadcast a video at a low VGA resolution. We checked and decided that 500kbps of bitrate offers good results for our needs.

What happens if we want to broadcast our stream to 10 people?

Broadcasting our stream to 10 people requires bitrate of 5mbps uplink.

If we’re on an ADSL connection, then we can find ourselves with 1-3mbps uplink only, so we won’t be able to broadcast the stream to our 10 viewers.

For the most part, we don’t control where our broadcasters are going to be. Over ADSL? WiFi? 3G network with poor connectivity? The moment we start dealing with broadcast we will need to make such assumptions.

That’s for 10 viewers. What if we’re looking for 100 viewers? A 1,000? A million?

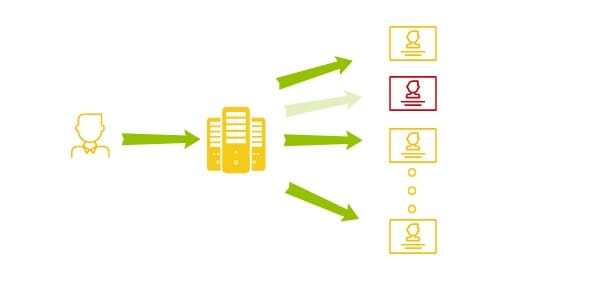

With a media server, we decide the network connectivity, the machine type of the server, etc. We can decide to cascade media servers to grow our scale of the broadcast. We have more control over the situation.

Broadcasting a WebRTC stream requires a media server.

Sender Uniformity

I see this one a lot in the context of a mesh group call, but it is just as relevant towards broadcast.

When we use WebRTC for a broadcast type of a service, a lot of decisions end up taking place in the media server. If a viewer has a bad network, this will result with packet loss being reported to the media server. What should the media server do in such a case?

While there’s no simple answer to this question, the alternatives here include:

- Asking the broadcaster to send a new I-frame, which will affect all viewers and increase bandwidth use for the near future (you don’t want to do it too much as a media server)

- Asking the broadcaster to reduce bitrate and media quality to accommodate for the packet losses, affecting all viewers and not only the one on the bad network

- Ignoring the issue of packet loss, sacrificing the user for the “greater good” of the other viewers

- Using Simulcast or SVC, and move the viewer to a lower “layer” with lower media quality, without affecting other users

You can’t do most of these in a browser. The browser will tend to use the same single encoded stream as is to send to all others, and it won’t do a good job at estimating bandwidth properly in front of multiple users. It is just not designed or implemented to do that.

You Need a Media Server

In most scenarios, you will need a media server in your implementation at some point.

If you are broadcasting, then a media server is mandatory. And no. Google doesn’t offer such a free service or even open source code that is geared towards that use case.

It doesn’t mean it is impossible – just that you’ll need to work harder to get there.

Looking to learn more about WebRTC? Check out my (paid and free) online WebRTC training courses. Join now so you don’t miss out.

Great Article Tsashi and right when I was looking for answer.

I came across Kurento Media Server. What do you think of this for a 1 to many broadcast?

Kranthi,

I wouldn’t use Kurento at all. It hasn’t been seriously updated in the past year or so which makes it risky to use.

Look at Jitsi and Janus for alternatives.

Kurento iOS support is finished. Lots of Bugs in the Code. The Serverside is so glitchy. After being sold to twilio, they have stopped maintaining it.

Hello!

I am web developer

My name is Wen Chang.

Excuse me , can i ask you?

I want to make web apps (contains video , audio , message chatting : one to many)

can i make this app using kurento-server?

Kurento seems to be picking up a bit. Still not sure how eager I’d be to use it though.

Tsahi,

We are using licode as our media server. What will be your comments on licode for this scenario?

Sreelekshmi,

I know less about Licode than other vendors, mainly because most of those I interacted with are not using it. You will need to check with the Licode team directly.

Do you think that I can use licode if I want to do an app like periscope?

You should try. I haven’t seen many running Licode in production, but that just might be the people I talk to.

Check out Jitsi and Janus as well.

Hi All,

I’m getting an issue when I tried to make more than 16 concurrent calls. Can anyone tell me a proper way to investigate this issue?

Error Message by sip.js 0.7.8

DOMException: Could not start source

sip.invitecontext.mediahandler | unable to acquire streams

Bharath,

The place to look would be the SIP.js forum – https://groups.google.com/forum/#!forum/sip_js

Hi Sahi,

Our use case is One broadcaster -> many Viewers might be 100k users.

will jitsi meets our need, whether its scalable.

We hosted and checked jitsi meet, but it seems like Google Hangout with Many peers with 2 way communication, do we have library like kurento i.e one broadcast many viewers

Support for super large conferences is on the way with Jitsi Videobridge but if you are looking for 100K users then maybe you want to use it in a combination with an HLS server. The Jitsi project that does this is Jibri: https://github.com/jitsi/jibri

Thanks for you reply, can we achieve low latency ~1s also using jibri(with hls)?

Also is there any methodology available in the jitsi for scaling up and down depending on outbound stream and ports availability in AWS cloud.

Thinking clustering will work, is there any documentation in jitsi for the scaling with clusters.

Hello Tzahi,

First of all great article!

I was wondering what are your thoughts on Hybrid solutions?, i.e. mixing webrtc with rtmp or hls, to have maximum compatibility with different browsers/os.

What should guide me when I’m building such a solution, how should I choose the media server technology (rtmp\webrtc\hls), what is the best encoding direction webrtc-> rtmp\hls or vice versa?

Thanks a lot

Ziv,

That’s a lot more than a single question with no clear answer, so can’t really comment much about it here.

It will greatly depend on what your service is doing and what your requirements are. These will dictate the broadcaster’s and the viewer’s protocols.

Hybrid models are possible and achievable bu they are more complex to develop, deploy and maintain, so I usually try to refrain from them if possible.

Thank you for the article.

I’m building (as a prototype) an ultra low latency video streaming channel where I need to broadcast RTP stream from a few (5..10) cameras to say hundreds or thousands clients in web browsers who can choose which camera of those to see (or maybe a few of them together).

As a mediaserver I made it working with Kurento, but not happy with it’s complexity n effort to configure etc. I’m also looking @Janus and Jitsi – but maybe you could point me to another direction? Say, Streamedian or a like?

Appreciate your guidance.

Andrew,

I like the way Janus and Jitsi are built for sure purpose. There are others as well, such as Wowza and Red5Pro.

I believe you were referring to Red5 Pro. Thanks for the plug!

Yap – fixed 🙂

Hi Tsahi,

I have read your post, and it seems like you are talking about problems with Mesh topology. When we use SFU, new client only setup one PeerConnection to server. If we have a new viewer, there is only a new media stream from server to client, not from client to server. So the only problem maybe is the the server bandwidth right? Or am I missing something ?

Thanks.

Tuan,

This one focuses on mesh topologies. When it comes to routed SFUs you will not have the performance issues on the browser side, but rather the varying bitrates available by each viewer as well as total bandwidth available on the server end.

Thanks you for your clarification. By the way, I really love your posts about WebRTC. So keep up your good work :))

Hello, I am thinking to build an application like periscope using react native, what media server do you recommend me?

For live broadcasting like HQ Trivia, would you recommend WebRTC over other protocols? What are some pros and cons?

That would depend a lot on your requirements Richard.

HQ Trivia is a kind of a template that gets used today in other domains to try and copy its success. The copy process usually involved minor tweaks to differentiate the new service. These tweaks may require the use of WebRTC.

– Janus has a special solution for streaming called SOLEIL. It’s based on Lorenzo’s PhD (http://www.fedoa.unina.it/10403/1/miniero_lorenzo_27.pdf) and they published some more details about it in july under “SOLEIL: Streaming Of Large-scale Events over Internet cLouds” at IEEE. It’s not open source yet, but i’m sure one can contact them about it.

– Without multiple media servers in cluster / cascade / tree / …, you won’t reach much more than 1,000 viewers.

– Beyond the upload bandwidth limitations for the publisher, the main problem to crack is handling network variations (both quantity: bandwidth, and quality: jitter, packet loss) between viewers, a.k.a. the noisy neighbour problem, when you have multiple hops between publisher and viewer. It’s a known limitation of RTP / RTCP (see V. Novotny and D. Komosny, “Large-scale rtcp feedback optimization,” Journal of Networks, Vol. 3, No. 3, March 2008), that has been solved by some (vidyo), but never in an open source implementation.

– Medooze.com is another open source WebRTC media server (toolkit), that has been used to solve this problem. It is currently used by Spanchain (spank.live) for live adult entertainment where latency is crucial for user experience (ever tried to make love with 5 seconds delay?). It has been tested up to 1 million viewers thanks to Google webrtc testing tool KITE. It is at the core of Xirsys’s operated millicast.com, a webrtc-based streaming platform.

– Tsahi does a very good job, as usual, at giving the big picture. For those interested in more in-depth technical details, which compare rtmp, flash and webrtc, we wrote two blog posts:

* http://webrtcbydralex.com/index.php/2018/05/15/streaming-protocols-and-ultra-low-latency-including-webrtc/

* http://webrtcbydralex.com/index.php/2018/06/08/using-webrtc-as-a-replacement-for-rtmp/

– There will be a session at Live Streaming Summit (Huntington Beach, CA, Nov. 13~14) dedicated to those questions: “VES203: WebRTC: The Future Champion of Low Latency Video”.

Thanks for sharing this Alex.

Hi, If I take your course, will I learn how to implement media server for one-to-many rtc¿¿

Yes. The course explains the challenges in one-to-many scenarios and the differences between them. It also goes into the different available alternatives out there (I don’t expect you to develop a media server from scratch, but you will learn to decide which one to use and what to look for when you select a media server).

I can recommend Ant Media as a media server, its open source also: https://github.com/ant-media/Ant-Media-Server

Which product to use as a WebRTC relaying server? Can be commercial.

Hello, we are looking for a WebRTC server (hosted on some paid server) for videochat (or sometimes audio only) for at least 30-5 users, that would overcome current free solutions’ limitation. I suspect that the free solutions just do peer-to-peer so with 30 participants each participant would have maintain 30-to-30 connections, that would sturate at least upload bandwidth of each user’s internet connetion.

So what I suspect sending ALL traffic through a central server could solve the problem. I mean that each of the 30 users send his audio/video only once to the server, and server sends it back to all other 29 users, and so the user sends 1 stream and receives 29 streams. This usually would satisfy bandwidht of home and office internet connections as they usually have a bandwidth ratio like upload bandwidth is 10% of download bandwidth…

Do you think this approach could be a solution compared to free products like Skype or Discord?

If yes then would this mode be supported by any product that can be hosted on our own or some paid server?

We tried Wowza Streaming Engine, it works in the above scenario perfectly, but it loses stability (hangs, chocking, wrong states) when having few dozens of concurrent connections (e.g. 50 is totally too much)

Jitsi Meet is free and doesn’t do peer-to-peer. There are many other free services out there today that use media servers.

Peer-to-peer won’t scale beyond 2-4 participants, so none of the services do that.

Janus, Jitsi and mediasoup are all open source media servers for WebRTC that can be used to build such services.

Dear Tsahi,

Thanks for your great post.

I have read a bunch of your posts, every one of them is very good from general to detail.

And because there’s nothing much of documentation from official WebRTC site so you’re one of my great tutor 🙂

????

Nice article! I am building a one-to-many broadcast type system and at first was going to just use WebRTC for the back end participants.. one or more people.. And have other peers join the “call”, but don’t use their audio or video (they are just viewers). AWS has a good offering for the STUN, TURN, and Media server.

For viewers to see the stream on their desktop, I was going to relay it and pay for the bandwidth. But now I think I can add them as fake peers up to 500? I don’t understand where the multiplier is. One 5Mb stream is being shared by everyone.. so x number of people join but shouldnt it stay at 5mb per person? I understand the slow user situation but hopefully AWS media server can deal with him.

Is it possible to use the 500 limit of peer to peer and send overage to direct HLS link?

Tim, not sure what the scenario here is exactly.

Is it WebRTC –> WebRTC or is it WebRTC –> HLS?

First of all this is a nice article and place to discuss about WebRTC things, I have few questions and hope someone can direct me to the right direction.

I need to implement a way to broadcast video to many people and also get them all into single audio conference. Currently doing video using RTMP and have more than 15 seconds delay.

With the current configuration if I somehow manage to create audio conference that’ll not sync with the broadcaster’s audio.

Broadcasting with WebRTC isn’t simple. Depending on the size of the conference, different alternatives exist – from using FreeSwitch as a conferencing bridge that mixes audio, through using open source SFUs to using CPaaS vendors.

Hi Tshai,

It has been a while now… Can Kurento be used for 1 to many live streaming purpose? If not what do you suggest in that scenario.

Depends how much “live” it needs to be.

For broadcast, I’d probably go with Janus or mediasoup in open source. Red5 Pro, Ant Media, millicast and others offer a commercial solution – sometimes self hosted and sometimes cloud managed. Agora also in this space as a CPaaS vendor and also LiveSwitch cloud.

If you’re fine with a bit of latency then just send it via CDN and use HLS and in the near future LLHLS.

Hi Tsahi – I’ve been reading through all your WebRTC blogs and working through various codelabs. I’m a musician trying to set up a WebRTC live stream 1-to-many from my studio to my website where the audience can watch via the browser. I’ve gotten it working with OpenTok until we ran into a config. error (likely for a Nancy engine that isn’t currently maintained, but 52 tech support emails back and forth couldn’t resolve it) and also on a start-up, Frozen Mountain, but had repeated audio quality issues and some audio-video lag due to differences in codec application (Millicast is WAY beyond my budget and I’ve not been able to get it working with either Red5 or Ant). So now I am simply trying to run a node.js server on my local machine and use a Hieroku/Janus to distribute to the website itself. Can you recommend a code tutorial for the specifics of the Janus configuration, specifically in terms of pushing the stream from my machine to the Janus network and subsequently to the website html/js? I’m in some deep water relative to my coding ability.

Thanks so much!

Nothing specific I am afraid.

Try looking at the Janus community group. I am afraid that at the end of the day what you lack is WebRTC knowledge and experience… I can recommend my courses on webrtccourse.com – they should give you the baseline of understanding but I get a feeling that you’re looking for a quick fix and a specific solution and less the background and building blocks knowhow.

Hello Tsahi

Using Ant media server with webrtc, Is it possible to publish stream while playing other stream??

Vishal, best to direct such questions to Ant media.