Another distuptive WebRTC experiment in Chrome to become reality.

I’ve had clients approaching me in the past month or two with questions about a new type of address cropping up in as ICE candidates. As it so happens, these new candidates have caused some broken experiences.

In this article, I’ll try to untangle how local ICE candidates work, what is mDNS, how it is used in WebRTC, why it breaks WebRTC and how this could have been handled better.

How local ICE candidates work in WebRTC?

Before we go into mDNS, let’s start with understanding why we’re headed there with WebRTC.

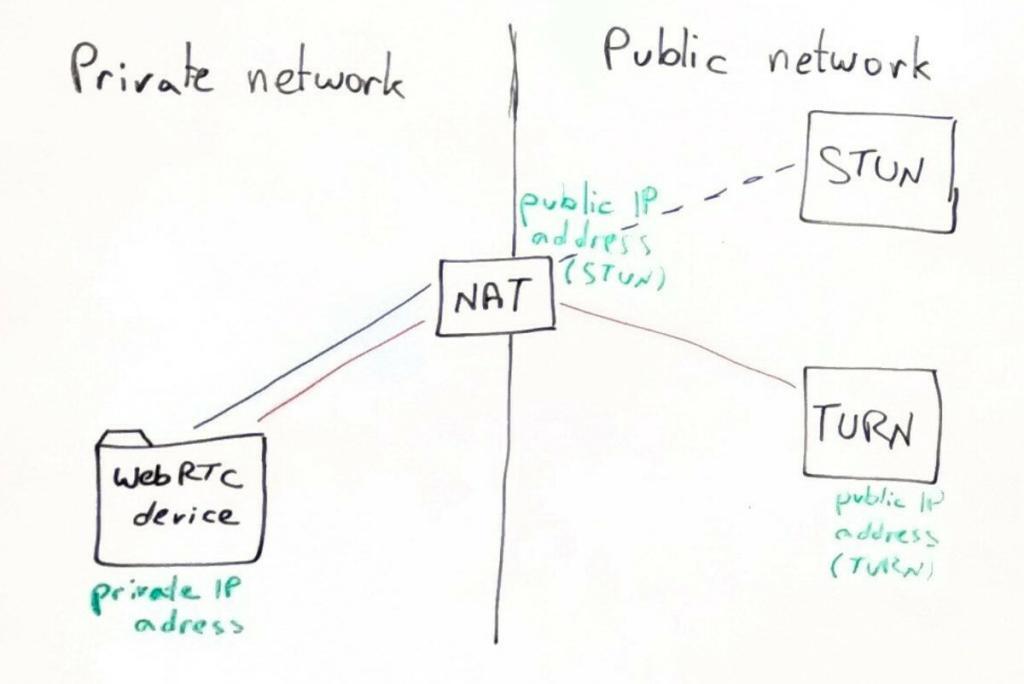

When trying to connect a session over WebRTC, there are 3 types of addresses that a WebRTC client tries to negotiate:

- Local IP addresses

- Public IP addresses, found through STUN servers

- Public IP addresses, allocated on TURN servers

During the ICE negotiation process, your browser (or app) will contact its configured STUN and TURN server, asking them for addresses. It will also check with the operating system what local IP addresses it has in its disposal.

Why do we need a local IP address?

If both machines that need to connect to each other using WebRTC sit within the same private network, then there’s no need for the communication to leave the local network either.

Why do we need a public IP address through STUN?

If the machines are on different networks, then by punching a hole through the NAT/firewall, we might be able to use the public IP address that gets allocated to our machine to communicate with the remote peer.

Why do we need a public IP address on a TURN server?

If all else fails, then we need to relay our media through a “third party”. That third party is a TURN server.

Local IP addresses as a privacy risk

That part of sharing local IP addresses? Can really improve things in getting calls connected.

It is also something that is widely used and common in VoIP services. The difference though is that VoIP services that aren’t WebRTC and don’t run in the browsers are a bit harder to hack or abuse. They need to be installed first.

WebRTC gives web developers “superpowers” in knowing your local IP address. That scares privacy advocates who see this is as a breach of privacy and even gave it the name “WebRTC Leak”.

A few things about that:

- Any application running on your device knows your IP address and can report it back to someone

- Only WebRTC (as far as I know) gives the ability to know your local IP addresses in the JavaScript code running inside the browser (Flash had that also, but it is now quite dead)

- People using VPNs assume the VPNs takes care of that (browsers do offer mechanisms to remove local IP addresses), but they sometimes fail to add WebRTC support properly

- Local IP addresses can be used by JavaScript developers for things like fingerprinting users or deciding if there’s a browser bot or a real human looking at the page, though there are better ways of doing these things

- There is no security risk here. Just privacy risk – leaking a local IP address. How much risk does that entail? I don’t really know

Is WebRTC being abused to harvest local IP addresses?

Yes, we have known that problem ever since the NY Times used a webrtc-based script to gather IP addresses back in 2015. “WebRTC IP leak” is one most common search terms (SEO hacking at its best).

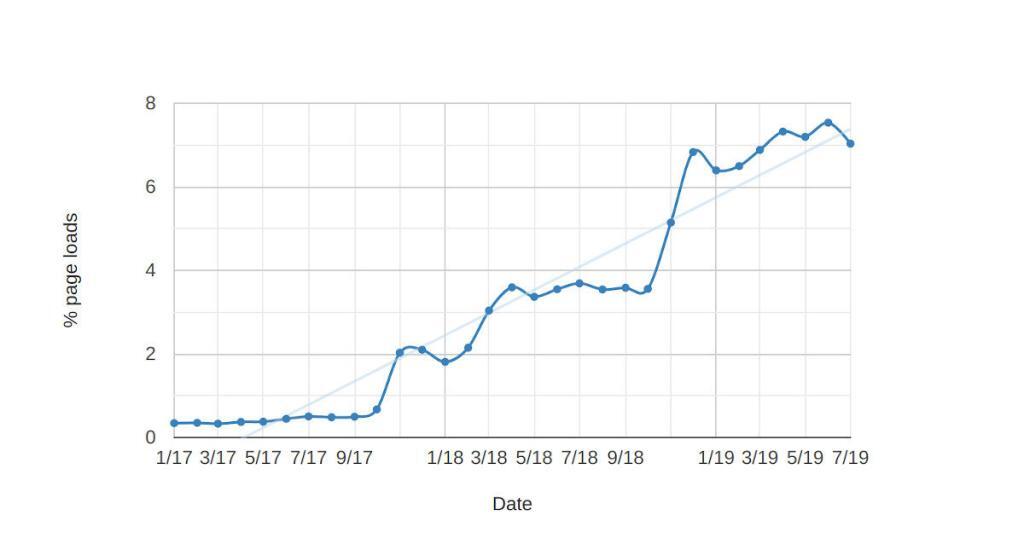

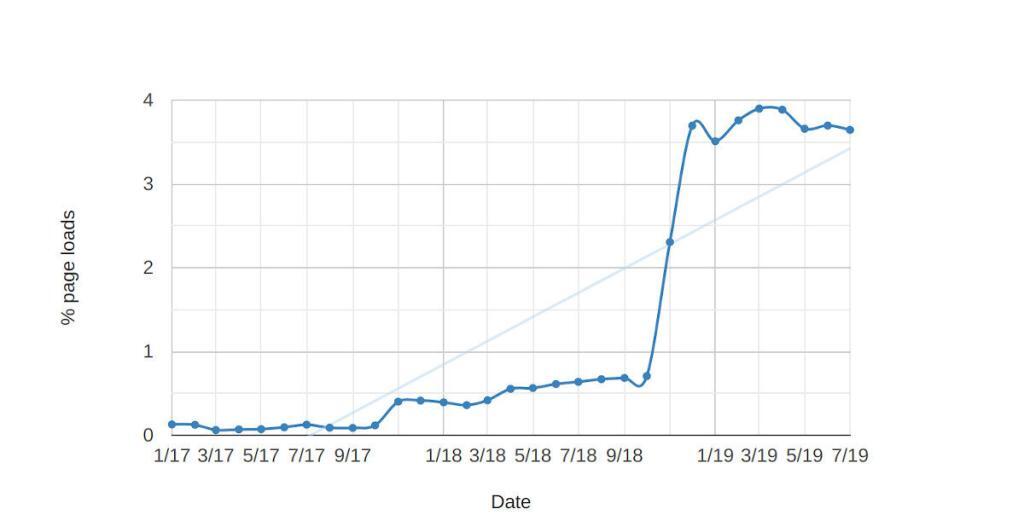

Luckily for us, Google is collecting anonymous usage statistics from Chrome, making the information available through a public chromestatus metrics site. We can use that to see what percentage of the page loads WebRTC is used. The numbers are quite… big:

RTCPeerConnection calls on % of Chrome page loads (see here)

Currently, 8% of page loads create a RTCPeerConnection. 8%. That is quite a bit. We can see two large increases, one in early 2018 when 4% of pageloads used RTCPeerConnection and then another jump in November to 8%.

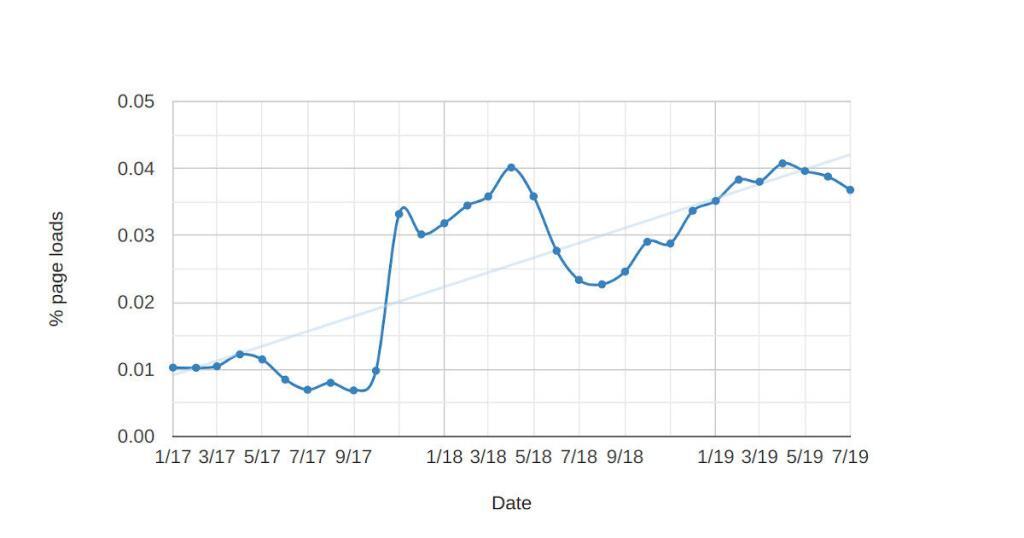

Now that just means RTCPeerConnection is used. In order to gather local IPs the setLocalDescription call is required. There are statistics for this one as well:

setLocalDescription calls on % of Chrome page loads (see here)

The numbers here are significantly lower than for the constructor. This means a lot of peer connections are constructed but not used. It is somewhat unclear why this happens. We can see a really big increase in November 2018 to 4%, at about the same time that PTC calls jumped to 7-8%. While it makes no sense, this is what we have to work with.

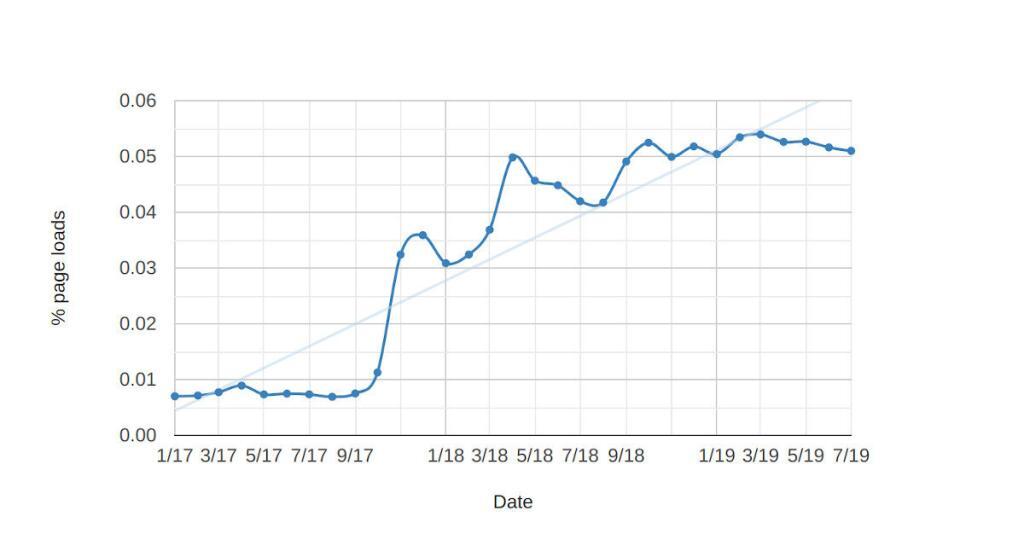

Now, WebRTC could be used legitimately to establish a peer-to-peer connection. For that we need both setLocalDescription and setRemoteDescription and we have statistics for the latter as well:

setRemoteDescription calls on % of Chrome page loads (see here)

Since the big jump in late 2017 (which is explained by a different way of gathering data) the usage of setRemoteDescription hovers between 0.03% and 0.04% of pageloads. That’s close to 1% of the pages a peer connection is actually created on.

We can get another idea about how popular WebRTC is from the getUserMedia statistics:

getUserMedia calls on % of Chrome page loads (see here)

This is consistently around 0.05% of pageloads. A bit more than RTCPeerConnection being used to actually open a session (that setRemoteDescription graph) but there are use-cases such as taking a photo which do not require WebRTC.

Here’s what we’ve arrived with, assuming the metrics collection of chromestats reflects real use behavior. We have 0.04% of pageloads compared to 4%. Assuming the numbers and math is correct, then a considerable percentage of the RTCCPeerConnections are potentially used for a purpose other than what WebRTC was designed for. That is a problem that needs to be solved.

* credits and thanks to Philipp Hancke for assisting in collecting and analyzing the chromestats metrics

What is mDNS?

Switching to a different topic before we go back to WebRTC leaks and local IP addresses.

mDNS stands for Multicast DNS. it is defined in IETF RFC 6762.

mDNS is meant to deal with having names for machines on local networks without needing to register them on DNS servers. This is especially useful when there are no DNS servers you can control – think of a home with a couple of devices who need to interact locally without going to the internet – Chromecast and network printers are some good examples. What we want is something lightweight that requires no administration to make that magic work.

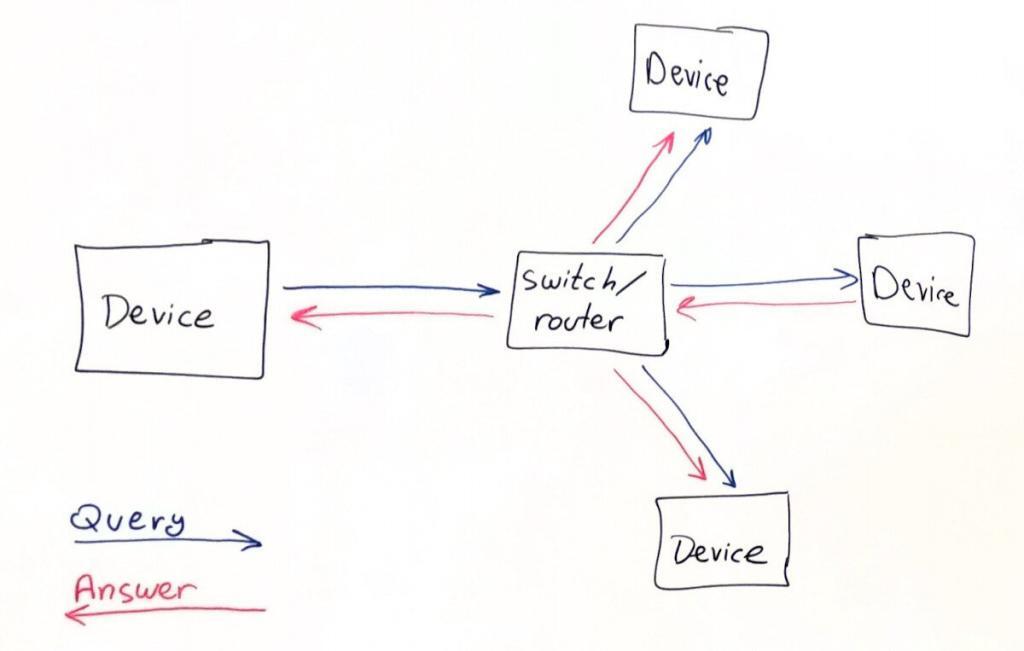

And how does it work exactly? In a similar fashion to DNS itself, just without any global registration – no DNS server.

At its basic approach, when a machine wants to know the IP address within the local network of a device with a given name (lets say tsahi-laptop), it will send out an mDNS query on a known multicast IP address (exact address and stuff can be found in the spec) with a request to find “tsahi-laptop.local”. There’s a separate registration mechanism whereby devices can register their mDNS names on the local network by announcing it within the local network.

Since the request is sent over a multicast address, all machines within the local network receive it. The machine with that name (probably my laptop, assuming it supports mDNS and is discoverable in the local network), will return back with its IP address, doing that also over multicast.

That means that all machines in the local network heard the response and can now cache that fact – what is the IP address on the local network for a machine called tsahi-laptop.

How is mDNS used in WebRTC?

Back to that WebRTC leak and how mDNS can help us.

Why do we need local IP addresses? So that sessions that need to take place in a local network don’t need to use public IP addresses. This makes routing a lot simpler and efficient in such cases.

But we also don’t want to share these local IP addresses with the Java Script application running in the browser. That would be considered a breach of privacy.

Which is why mDNS was suggested as a solution. There It is a new IETF draft known as draft-ietf-rtcweb-mdns-ice-candidates-03. The authors behind it? Developers at both Apple and Google.

The reason for it? Fixing the longstanding complaint about WebRTC leaking out IP addresses. From its abstract:

WebRTC applications collect ICE candidates as part of the process of creating peer-to-peer connections. To maximize the probability of a direct peer-to-peer connection, client private IP addresses are included in this candidate collection. However, disclosure of these addresses has privacy implications. This document describes a way to share local IP addresses with other clients while preserving client privacy. This is achieved by concealing IP addresses with dynamically generated Multicast DNS (mDNS) names.

How does this work?

Assuming WebRTC needs to share a local IP address which it deduces is private, it will use an mDNS address for it instead. If there is no mDNS address for it, it will generate and register a random one with the local network. That random mDNS name will then be used as a replacement of the local IP address in all SDP and ICE message negotiations.

The result?

- The local IP address isn’t exposed to the Java Script code of the application. The receiver of such an mDNS address can perform a lookup on his local network and deduce the local IP address from there only if the device is within the same local network

- A positive side effect is that now, the local IP address isn’t exposed to media, signaling and other servers either. Just the mDNS name is known to them. This reduces the level of trust needed to connect two devices via WebRTC even further

Why this breaks WebRTC applications?

Here’s the rub though. mDNS breaks some WebRTC implementations.

mDNS is supposed to be innocuous:

- It uses a top-level domain name of its own (.local) that shouldn’t be used elsewhere anyway

- mDNS is sent over multicast, on its own dedicated IP and port, so it is limited to its own closed world

- If the mDNS name (tsahi-laptop.local) is processed by a DNS server, it just won’t find it and that will be the end of it

- It doesn’t leave the world of the local network

- It is shared in places where one wants to share DNS names

With WebRTC though, mDNS names are shared instead of IP addresses. And they are sent over the public network, inside a protocol that expects to receive only IP addresses and not DNS names.

The result? Questions like this recent one on discuss-webrtc:

Weird address format in c= line from browser

I am getting an offer SDP from browser with a connection line as such:

c=IN IP4 3db1cebd-e606-4dc1-b561-e0af5b4fd327.local

This is causing trouble in a webrtc server that we have since the parser is bad (it is expecting a normal ipv4 address format)

[…]

This isn’t a singular occurrence. I’ve had multiple clients approach me with similar complaints.

What happens here, and in many other cases, is that the IP addresses that are expected to be in SDP messages are replaced with mDNS names – instead of x.x.x.x:yyyy the servers receive <random-ugly-something>.local and the parsing of that information is totally different.

This applies to all types of media servers – the common SFU media server used for group video calls, gateways to other systems, PBX products, recording servers, etc.

Some of these have been updated to support mDNS addresses inside ICE candidates already. Others probably haven’t, like the recent one above. But more importantly, many of the deployments made that don’t want, need or care to upgrade their server software so frequently are now broken as well, and should be upgraded.

Could Google have handled this better?

In January, Google announced on discuss-webrtc this new experiment. More importantly, it stated that:

No application code is affected by this feature, so there are no actions for developers with regard to this experiment.

Within a week, it got this in a reply:

As it stands right now, most ICE libraries will fail to parse a session description with FQDN in the candidate address and will fail to negotiate.

More importantly, current experiment does not work with anything except Chrome due to c= line population error. It would break on the basic session setup with Firefox. I would assume at least some testing should be attempted before releasing something as “experiment” to the public. I understand the purpose of this experiment, but since it was released without testing, all we got as a result are guaranteed failures whenever it is enabled.

The interesting discussion that ensued for some reason focused on how people interpret the various DNS and ICE related standards and does libnice (an open source implementation of ICE) breaks or doesn’t break due to mDNS.

But it failed to encompass the much bigger issue – developers were somehow expected to write their code in a way that won’t break the introduction of mDNS in WebRTC – without even being aware that this is going to happen at some point in the future.

Ignoring that fact, Google has been running mDNS as an experiment for a few Chrome releases already. As an experiment, two things were decided:

- It runs almost “randomly” on Chrome browsers of users without any real control of the user or the service that this is happening (not something automated and obvious at least)

- It was added only when local IP addresses had to be shared and no permission for the camera or microphone were asked for (receive only scenarios)

The bigger issue here is that many view only solutions of WebRTC are developed and deployed by people who aren’t “in the know” when it comes to WebRTC. They know the standard, they may know how to implement with it, but most times, they don’t roam the discuss-webrtc mailing list and their names and faces aren’t known within the tight knit of the WebRTC community. They have no voice in front of those that make such decisions.

In that same thread discussion, Google also shared the following statement:

FWIW, we are also considering to add an option to let user force this feature on regardless of getUserMedia permissions.

Mind you – that statement was a one liner inside a forum discussion thread, from a person who didn’t identify in his message with a title or the fact that he speaks for Google and is a decision maker.

Which is the reason I sat down to write this article.

mDNS is GREAT. AWESOME. Really. It is simple, elegant and gets the job done than any other solution people would come up with. But it is a breaking change. And that is a fact that seems to be lost to Google for some reason.

By enforcing mDNS addresses on all local IP addresses (which is a very good thing to do), Chrome will undoubtedly break a lot of services out there. Most of them might be small, and not part of the small majority of the billion-minutes club.

Google needs to be a lot more transparent and public about such a change. This is by no means a singular case.

Just digging into what mDNS is, how it affects WebRTC negotiation and what might break took me time. The initial messages about an mDNS experiment are just not enough to get people to do anything about it. Google did a way better job with their explanation about the migration from Plan B to Unified Plan as well as the ensuing changes in getStats().

My main worry is that this type of transparency doesn’t happen as part of a planned rollout program. It is done ad-hoc with each initiative finding its own creative solution to convey the changes to the ecosystem.

This just isn’t enough.

WebRTC is huge today. Many businesses rely on it. It should be treated as the mission critical system that developers who use it see in it.

It is time for Google to step up its game here and put the mechanisms in place for that.

What should you do as a developer?

First? Go check if mDNS breaks your app. You can enable this functionality on chrome://flags/#enable-webrtc-hide-local-ips-with-mdns

Next? Understand WebRTC security and privacy.

In the long run? My best suggestion would be to follow messages coming out of Google in discuss-webrtc about their implementation of WebRTC. To actively read them. Read the replies and discussions that take place around them. To understand what they mean. And to engage in that conversation instead of silently reading the threads.

Test your applications on the beta and Canary releases of Chrome. Collect WebRTC behavior related metrics from your deployment to find unexpected changes there.

Apart from that? Nothing much you can do.

As for mDNS, it is a great improvement. I’ll be adding a snippet explanation about it to my WebRTC Tools course, something new that will be added next month to the WebRTC Course. Stay tuned!

I think It’s indeed a bug in implementing by parser developers or may be Google team.

c=IN IP4 X.X.X.X

This expect IP4 only.

Otherwise it has to be replaced with c= IN IP6 I guess, or mDNS.

What do you think?

Let me clarify first: Non-getUserMedia is not only “receive only media” but also “data channels only”.

I see another issue with the current approach: mDNS does not work in segmented networks, which is absolutely normal even in small offices. This means that there are scenarios where you formerly would have been able to establish a connection within the same network, where you now fall back to server reflexive and relay candidates. Even worse, NAT hairpinning support is not that common in which case data is being routed via a TURN server. This will almost always have significant bandwidth and latency impairments. And use cases that cannot use getUserMedia have no way to break out of it.

Thus, the current way mDNS is being introduced by Google is not only an interop issue but also weakens the user experience for same use cases. In addition, it discriminates receive only media and data only use cases further which were already discriminated by being limited to IP handling mode 2.

Lennart – as always, thanks for the correction and insights 🙂

I presume mDNS value does not change from one session to another. If that is so why can’t that be used for fingerprinting? It looks like it may be even unique among a large group of devices.

Or does the browser use a session specific value and broadcasts that?

I am not sure if it doesn’t change or when it changes. As for fingerprinting, there are so many other better and easier ways to fingerprint today that I don’t see this as a real issue. The main concern that was addressed here was the ability to find a local IP address of the device in JS code.

I was confused here.

An application that uses only DataChannel will now have to request access to a successful camera or microphone (or both)?

And in case this application that uses only DataChannel does not request access the media would now be “broken” outside of Chrome?

How would DataChannels that do not request media access be treated with this change, there is an official consideration for this?

Data Channels are affected in the same way as anything else (assuming Google decides to roll out mDNS everywhere in WebRTC).

You don’t need to call getUserMedia, but the local IP address that gets shared will be translated to an mDNS name before being shared with the application code or over the network.

Hi Tsahi,

nice article.

Do you have any estimation when will mDNS local candidates be adopted by browsers?

Nope. Don’t think there is a time estimation for this one yet.

I see that there is no estimate for mDNS local candidates to be the default in chrome. Any gut feel wither the change is this year or the next?

My gut feel? It happens this year.

Does it matter? No it doesn’t.

Google is already running this as an experiment. Companies running their services in production over the internet already see the.local candidates in their SDP messages (and sometimes this causes them failures as well).

My suggestion – look at it as if it already shipped and go to crisis mode to fix it. Not tomorrow. Yesterday.

Browser actually is supposed to allocate and broadcast a new mDNS name for each browser session. This means new random name is used for each session which should prevent fingerprinting.

P.S. Thank you for quoting me in the article.

🙂

Thanks for the clarification, Roman

You know what is interesting? Even after this blog post there is absolutely no “official” update that this is rolling out in M75 (even though the interop problem is now labelled as a release blocker)

Business as usual sadly.

Its true that mDNS would now fail on segmented networks, however, it is also the case that many implementations where segmented local networks are used, also enable an mDNS proxy (such as Avahi), such that printers, AppleTVs/Chromecast and other local link services are available outside the local subnet, so in this scenario, even with segmented networks, local connections will still work. mDNS proxies have become quite widespread, most WiFi systems have it (because sometimes a WiFi client is assigned a different subnet than services like printers so it is basically necessary to have this feature), and many firewalls do too.

This is something Google should definitely handle better:

Here is what I experienced in the past few days that I really cannot relate to “No application code is affected by this feature, so there are no actions for developers with regard to this experiment.”:

publisher only (chrome) —> SFU (ICE stack based on libnice)

publisher send offer, SFU send answer. SFU is faster on sending candidates back to chrome.

Chrome tries to connect to our SFU and succeeded in that, so it only send a mdns.local candidate to SFU.

this candidate is not already supported by libnice, which drops that candidate.

Chrome sees the peerconnection in Connected state, so it doesn’t bother to send other candidates to our SFU.

But for libnice, no remote candidates are received since the only mdns.local was dropped.

This prevents libnice to start it’s own connectivity check (by design, there are few proposal to handle this mdns candidates), so for our SFU, no clients are currently “connected”, even if chrome is indeed in connected state.

So my current workaround is to “fake” a remote candidate when a .local is received and I wasn’t able to resolve it, to force libnice to start connectivity check and let him “know” that a remote peer is already connected, so it calls a callback and our codebase can start working.

For sure there is some work to do by libnice to handle this, but I think chrome should always send at least one “stun” or “relay” candidate alongside with the local one to ensure nothing breaks on current SFU implementations.

And this came out out of the blue. Not nice Google

Francesco, this is why I wrote this article.

That said, the best place to write such commently is the discuss-webrtc mailing list – it is where Google engineers will actually be looking for such things.

* It was a subscriber only, so no GUM was accepted, sorry

Why do you mean by: “It runs almost “randomly” on Chrome browsers”? Is there any way to know when is this feature enabled? I am making some tests and it seems to be absolutely unpredictable, even in the same session.

Daniel,

I am not sure about predictability within the same session or same browser. The experiment runs in a way that a small population of those running a Chrome browser would use mDNS while others won’t. While you can force a browser to act as if he is part of the experiment, doing the opposite isn’t possible as far as I am aware – and it is definitely impossible from the side of the server.

Hey! Thanks for sharing this. It was very helpful. I hava a question. In recent versions of Firefox we have the same problem, but I couldn’t find a setting like the one that exists in Chrome. Do you have any idea of what we can do please?

What do you mean by “the same problem”? It shares mDNS addresses for local candidates?

The control in Firefox which re-enables including LAN IP addresses in SDPs is:

1. In a Firefox tab, enter URL “about:config”.

2. Search for field “media.peerconnection.ice.obfuscate_host_addresses”.

3. Change from TRUE to FALSE.

BlogGeek,

WebRTC question. How do I initiate a video chat using WebRTC on my phone if I don’t know the recipients IP address. I only have his phone number.

It seems that not knowing the recipients private IP addresses will limit WebRTC adoption.

Bob

Bob,

Signaling is out of scope of WebRTC. If you only have a phone number then you can’t use WebRTC. Usually services have their users either registered or access specific designated “rooms” where they can reach out to each other or meet. It is up to the application using WebRTC to figure this part out.

Unusable "SEO" article. Million words for sale course… Meh