Water and oil? WebRTC and VoIP.

Let’s start by saying this for starters:

WebRTC is VoIP

That said, it is different than VoIP in the most important of ways:

- In the ways entrepreneurs make use of it to bring their ideas to life

- In the ways developers yield it to build applications

Why is that?

Because WebRTC lends itself to two very different worlds, all running over the Internet: The World Wide Web. And VoIP.

And these two worlds? They don’t mix much. Beside the fact that they both run over IP, there’s not a lot of resemblance between them. Well, that and the fact that both SIP and HTTP has a 200 OK message.

Everyone is focused on the browser implementation of WebRTC. But what of the needed backend? Join my free mini video WebRTC course that explains the server story of WebRTC.

If you ever developed anything in the world of VoIP, then you know how calls get connected. You’re all about ring tones and the many features that comprise a Class 5 softswitch. The truth of the matter is, that this kind of knowledge can often be your undoing when it comes to WebRTC.

If you are looking for WebRTC talent, make sure to also read my WebRTC hiring tips!

Here are 10 major differences between developing with WebRTC and developing with VoIP:

#1 – You are No Longer in Control

With VoIP, life was simple. All pieces of the solution was yours.

The server, the clients, whatever.

When something didn’t work, you’d go in, analyze it, fix the relevant piece of software, and be done with it.

WebRTC is different.

You’ve got this nagging thing known as the “browser”.

4 of them.

And they change. And update. A lot.

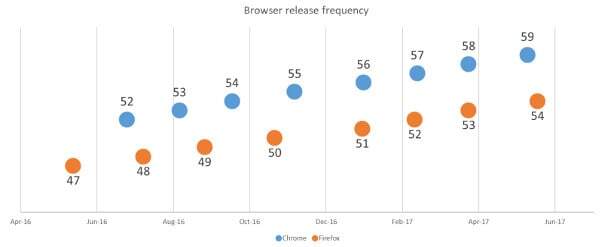

Here’s what happened in the past year with Chrome and Firefox:

A version every 6-8 weeks. For each of them.

And these versions? They tend to change things in how the browsers change their behavior when it comes to WebRTC. These changes may cause services to falter.

These changes means that:

- You are not in control over the whole software running your service

- You are not in control of when pieces of your deployment get upgraded (browsers will upgrade without you having a say in it)

VoIP doesn’t work this way.

You develop, integrate, deploy and then you decide when to upgrade or modify things. With WebRTC that isn’t the case any longer.

You must continuously test against future browser versions (beta, unstable, Canary and nightly should become part of your vocabulary). You need to have the means to easily and quickly upgrade a production service – at runtime. And be prepared to do it rather frequently.

#2 – Javascript is King in WebRTC

My pedigree comes from VoIP.

I am a VoIP developer.

I did development, project management, product management and then been a CTO of a business unit where what we did was develop VoIP software SDKs that were used (and are still used) in many communication products.

I am a great developer. Really. One of the best I know. At least when it comes to coding in C.

VoIP was traditionally developed in C/C++ and Java.

With Javascript I know my way but by no means am I even an average developer. My guess is that a lot of VoIP engineers have a similar background to me.

WebRTC is all about Javascript.

In WebRTC, JavaScript is King

Yes. WebRTC has a Javascript API. But that’s half the story. Many of the backend systems written for use with WebRTC ends up using Node.js. Which uses Javascript.

WebRTC isn’t limited to Javascript. There are systems written in C, Java, Python, C#, Erlang, Dart and even PHP that are used. There are .Net systems. On mobile, native apps use Objective C, Swift or Java in their implementations of client-side WebRTC SDKs.

But the majority? That’s Javascript.

Three main reasons I can see for it:

- Fashion. Node.js is fashionable and new. WebRTC is also new, so there’s a fit

- Asynchronous. The signaling in WebRTC needs to be snappy and interactive. It needs to have a backend that can fit nicely with its model of asynchronous interactions and interfaces. Node.js offers just that and makes it easier to think of signaling on the frontend and backend at the same time. Which leads us to the third and probably most important reason –

- Javascript. You use it in the frontend and backend. Easier for developers to use a single language for both. Easier to shift bits and pieces of code from one side to the other if and when needed

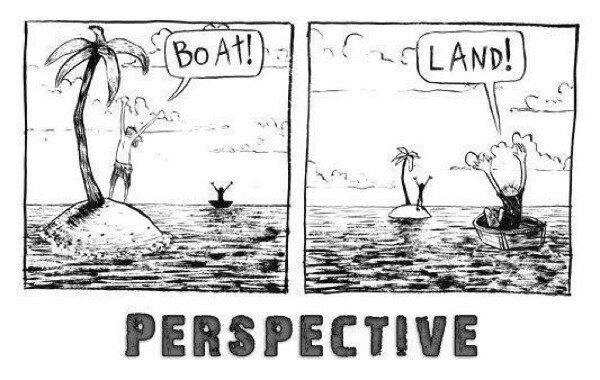

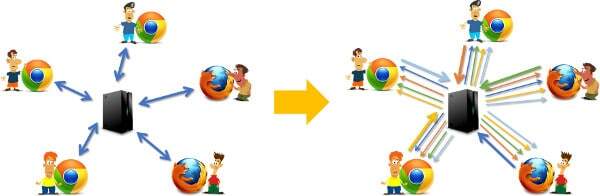

#3 – A Big Island: WebRTC vs. VoIP

VoIP is all about interoperability. A big happy family of vendors. All collaborating and cooperating. The idea is that if you purchase a phone from one vendor, you *should* be able to dial another vendor’s phone with it via a third vendor’s PBX. It works. Sometimes. And it requires a lot of effort in interoperability testing and tweaking. An ongoing arduous task. The end result though is a system where you end up testing a small set of vendors that are approved to work within a certain deployment.

VoIP and interoperability abhors the idea of islands. Different communication services that can’t connect to each other.

WebRTC is rather different. You no longer build one VoIP product or device that is designed to communicate with VoIP devices of other vendors. You build the whole shebang.

An island of sorts, but a rather big one. One where you can offer access through all browsers, operating systems and mobile devices.

You no longer care about interoperability with other vendors – just with interoperability of your service with the browsers you are relying on. It simplifies things some while complicating the whole issue of being in control (see #1 above).

#4 – It is Cloudy in the VoIP land

It seems like VoIP was always mean to run in local deployments. There are a few cases where you see it deployed globally, but they aren’t many. Usually, there’s a geography that goes into the process.

This is probably rooted with the origins of VoIP – as a replacement / digital copy of what you did in telecom before. It also relates to the fact that the world was bigger in the past – the cloud as we know it today (AWS and the many other cloud providers that followed) didn’t really exist.

Skype is said to have succeeded so much as it did due to the fact that it had a great speech codec at the time that was error resilient (it had FEC built-in at a time companies conceptualized about bickering in the IETF and the ITU standard bodies about adding FEC in the RTP layer). It also had NAT traversal that just worked (again, when STUN and TURN were just ideas). The rest of the world? We were all happy enough to instruct customers to install their gatekeepers and B2BUAs in the DMZ.

Since then VoIP has evolved a lot. It turned towards the SBC (more on this in #10).

WebRTC has bigger challenges and requirements ahead of it.

For the most part, and with most deployments of WebRTC, there are three things that almost always are apparent:

- Deployments are global. You never know from where the users will be joining. Not globally and not their type of network

- Networks are unmanaged. This is similar to the above. You have zero control over the networks, but your users will still complain about the quality (just check out any of Fippo’s analysis posts)

- We deploy them on AWS. All the time. On virtual machines. Inside Docker containers. Layers and layers of abstraction. For a real time service. It seems to work.

#5 – Bring Your Own Signaling in WebRTC

VoIP is easy. It is standardized. Be it SIP, H.323, XMPP or whatever you bring to the table. You are meant to use a signaling protocol. Something someone else has thought of in the far dark rooms in some standards organization. It is meant to keep you safe. To support the notion and model of interoperability. To allow for vendor agnostic deployments.

WebRTC did away with all this, opting to not have a signaling protocol at all out of the box.

Some complain about it (mostly VoIP people). I’ve written about it some 4 years ago – about the death of signaling.

With WebRTC you make the decision on what signaling protocol you will be using. You can decide to go for a standards based solution such as SIP over WebSocket, XMPP over BOSH or WebSocket – or you can use a newly created signaling protocol invented only for your specific scenario – or use whatever you already have in your app to signal people.

As with anything in WebRTC, it opens up a few immediate questions:

- Should you use a standards based signaling protocol or a proprietary one?

- Should you built it on your own from scratch or use a third party framework for it?

- Should you host and manage it on your own or use it as a service instead?

All answers are now valid.

#6 – Encryption and Privacy are MANDATORY

With VoIP, encryption was always optional. Seldom used.

I remember going to these interoperability events as a developer. The tests that almost never really succeeded were the ones that used security. Why? You got to them last during the week long event, and nobody got that part quite the same as others.

That has definitely changed over the years, but the notion of using encryption hasn’t. VoIP products are shipped to customers and deployed without encryption. The encryption piece is an optional configuration that many skip. Encryption makes it hard to use wireshark to understand what goes in the network, it takes up CPU (not much anymore, but still conceptually it is), it complicates things.

WebRTC on the other hand, has only encryption configured into it. No way to use it with clear RTP. even if you really really want to. Even if you swear all browsers and their communications run inside a secure network. Nope. can’t take security out of WebRTC.

You can learn more about WebRTC security.

#7 – If it is New, WebRTC Will be Using it

When WebRTC came out, it made use of the latest most recent RFCs that were VoIP related in the media domain.

Ability to bundle RTP and RTCP on the same stream? Check.

Ability to multiplex audio and video on the same stream? Check.

Ability to send FIR commands over RTCP and not signaling? Check.

Ability to negotiate keys over DTLS-SRTP instead of SDES? Check.

There are many other examples for it.

And in many cases, WebRTC went to the extreme of banning the other, more common, older mechanisms of doing things.

VoIP was always made with options in mind. You have at least 10 different ways in the standard to do something. And all are acceptable.

WebRTC takes what makes sense to it, throwing the rest out the window, leaving the standard slightly cleaner in the end of it.

Just recently, a decision was made about supporting non-multiplexed streams. This forced Asterisk and all of its users to upgrade.

VoIP and SIP were never really that important to WebRTC. Live with it.

#8 – Identity Management and Authorization are Tricky

There’s no identity management in WebRTC.

There’s also no clear authorization model to be heard of.

Here’s a simple one:

With SIP, the way you handle users is giving them usernames and passwords.

The user clicks that into the client and this gets used to sign up to the service.

With regular apps, it is easy to set that username/password as your TURN credentials as well. But doing it with WebRTC inside a browser opens up a world of pain with the potential of harvesting that information to piggyback on your TURN servers, costing you money.

So instead you end up using ephemeral passwords in TURN with WebRTC. Here’s an explanation how to do just that.

In many other cases, you simply don’t care. If the user already logged into the page, and identified and authenticated himself in front of your service, then why have an additional set of credentials for him? You can just as easily piggyback a mechanism such as Facebook connect, Twitter, LinkedIn or Google accounts to get the authentication part going for you.

#9 – Route. Don’t Mix

If you come from VoIP, then you know that for more than two participants in a call you mix the media. You do it usually for audio, but also for the video. That’s just how things are (were) done.

But for WebRTC, routing media through an SFU is how you do things.

It makes the most sense because of a multitude of reasons:

- For many use cases, this is the only thing that can work when it comes to meeting your business model. It strikes that balance between usability and costs

- This in turn, brings a lot of developers and researchers to this domain, improving media routing and SFU related technologies, making it even better as time goes by

- In WebRTC, the client belongs to the server – the server sends the client as HTML/JS code. With the added flexibility of getting multiple media streams, comes an added flexibility to the UI’s look and feel as well as behavior

There are those who are still resistant to the routing model. When these people have a VoIP pedigree, they’ll lean towards the mixing model of an MCU, calling it superior. It will usually cost 10 times or more to deploy an MCU instead of an SFU.

Be sure to know and understand SFUs if you plan on using WebRTC.

#10 – SBCs are Useless

Or at least not mandatory anymore.

Every. SBC. vendor. out. there. is. adding. WebRTC.

And I get it. If you’re building an SBC – a Session Border Controller – then you should also make sure it supports WebRTC so all these pesky people looking to get access through the browser can actually get it.

An SBC was an abomination added to VoIP. It was a necessary evil.

It served the purpose of sitting in the DMZ, making sure your internal network is protected against malicious VoIP access. A firewall for VoIP traffic.

Later people bolted on that SBC the ability to handle interoperability, because different vendor products never really worked well with one another (we’ve already seen that in #3). Then transcoding was added, because we could. And then other functions.

And at some point, it was just obvious to place SBCs in VoIP infrastructure. Well… WebRTC doesn’t need an SBC.

VoIP needs an SBC that handles WebRTC. But if you’re planning on doing a WebRTC based application that doesn’t have much of VoIP in it, you can skip the SBC.

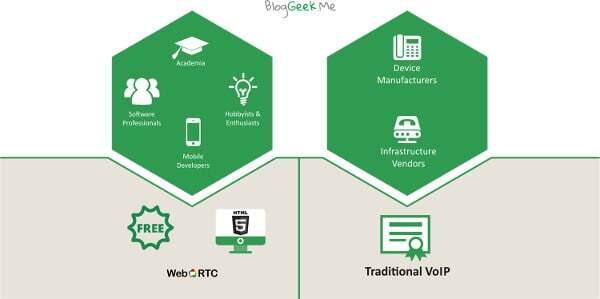

#11 – Ecosystem Created by the API and Not the Specification

Did I say 10 differences? So here’s a bonus difference.

Ecosystems in VoIP are created around the network protocol.

You get people to understand the standard specification of the network protocol, and from there you build products.

In WebRTC, the center is not the network protocol (yes, it is important and everything) – it is the WebRTC APIs. The ones implemented in the browsers that enable you to build a client on top. One that theoretically should run across all browsers.

That’s a huge distinction.

Many of the developers in WebRTC are clueless about the network, which is a shame. On the other hand, many VoIP developers think they understand the network but fail to understand the nuanced differences between how the network works in VoIP and in WebRTC.

What’s Next in WebRTC VoIP world?

If you’re on the web side of things, then be sure to read this article as well.

If you have VoIP background, then there are things for you to learn when shifting your focus towards WebRTC. And you need to come at it with an open mind.

WebRTC seems very similar to VoIP – and it is – because it is VoIP. But it is also very different. In the ways it is designed, thought of and used.

Knowing VoIP, you should have a head start on others. But only if you grok the differences.

Need to warm up to WebRTC? Try my free WebRTC server side mini course.

And if you’re really serious, enroll to my Advanced WebRTC Architecture Course.