WHIP & WHEP are specifications to get WebRTC into live streaming. But is this really what is needed moving forward?

WebRTC is great for real time. Anything else – not as much. Recently two new protocols came to being – WHIP and WHEP. They work as signaling to WebRTC to better support live streaming use cases.

In recent months, there has been a growing adoption in the implementation of these protocols (the adoption of actual use isn’t something I am privy to so can’t attest either way). This progress is a positive one, but I can’t ignore the feelings I have that this is only a temporary solution.

Table of contents

What are WHIP and WHEP?

WHIP stands for WebRTC-HTTP Ingestion Protocol. WHEP stands for WebRTC-HTTP Egress Protocol. They are both relatively new IETF drafts that define a signaling protocol for WebRTC.

👉 WebRTC explicitly decided NOT to have any signaling protocol so that developers will be able to pick and choose any existing signaling protocol of their choice – be it SIP, XMPP or any other alternative. For the media streaming industry, this wasn’t a good thing – they needed a well known protocol with ready-made implementations. Which led to WHIP and WHEP.

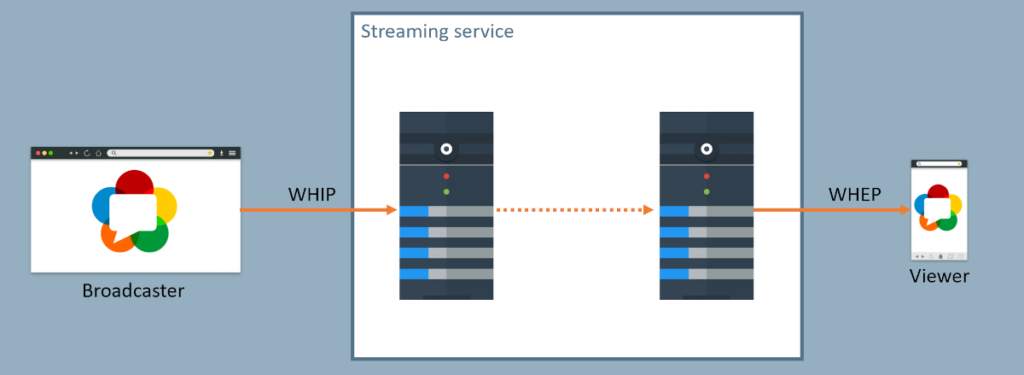

To understand them how they fit into a solution, we can use the diagram below:

In a live streaming use case, we have one or more broadcasters who “Ingest” their media to a media server. That’s where WHIP comes in. The viewers on the other side, get their media streams on the egress side of the media servers infrastructure.

For a technical overview of WHIP & WHEP, check out this Kranky Geek session by Sergio Garcia Murillo from Dolby:

In video conferencing, WebRTC transformed the market and how it thought of meetings and interoperability by practically killing the notion of interoperability across vendors on the protocol level, shifting it to the application level and letting users install their own apps on devices or just load web pages on demand.

The streaming industry is different – it relies on 3 components, which can easily come from 3 different vendors:

- Media servers – the cloud or on premise infrastructure that processes the media and routes it around the globe

- Ingress/Ingestion – the media source. In many cases these are internet cameras connected via RTP/RTSP or OBS and GStreamer-based sources

- Egress/Viewers – those who receive the media, often doing so on media players

When a broadcaster implements his application, he picks and chooses the media servers and media players. Sometimes he will also pick the ingestion part, but not always. And none of the vendors in each of these 3 categories can really enforce the use of his own components for the others.

This posed a real issue for WebRTC – it has no signaling protocol – this is left for the implementers, but how do you develop such a solution that works across vendors without a suitable signaling protocol?

The answer for that was WHIP and WHEP –

- WHIP connects Ingress/Ingestion to Media servers

- WHEP connects Media servers to Egress/Viewers

These are really simple protocols built around the notion of a single HTTP request – in an attempt to get the streaming industry to use them and not shy away from the complexities hidden in WebRTC.

Strengths 💪

Here’s what’s working well for WHIP and WHEP:

- Simple to implement

- These protocols for the main flow requires a single round trip – a request and a response

- Some of the features of WebRTC were removed or made lenient in order to enable that, which is a good thing in this case

- Operates similarly to other streaming protocols

- The purpose of it all is to be used in an industry that already exist with existing solutions and vendors

- The closer we can get to them the easier for them and the more likely they are to adopt it

- Adoption

- The above two got it to be adopted by many players in the industry already

- This adoption is in the form of demos, POCs and actual products

- WebRTC

- It is here for over 10 years now and proven as a technology

- Connecting it to video conferences to stream them live or to add external streams into them using WHIP is not that hard – there are quite a few playing with these use cases already

Weaknesses 🤒

There’s the challenging side of things as well:

- Too simple

- Edge cases aren’t clearly managed and handled

- Things like renegotiation when required, ICE restarts, etc

- WebRTC

- While WebRTC is great, it wasn’t originally designed for streaming

- We’re now using it for live streaming, but live isn’t the only thing streaming needs to solve

This last weakness – WebRTC – leads me to the next issue at hand.

Streaming, latency and WebRTC

Streaming comes in different shapes and sizes.

The scenario might have different broadcasters:viewers count – 1:1, 1:many, few:1, few:many – each has its own requirements and nuances as to what I’d prefer using on the sending side, receiving end and on the media server itself.

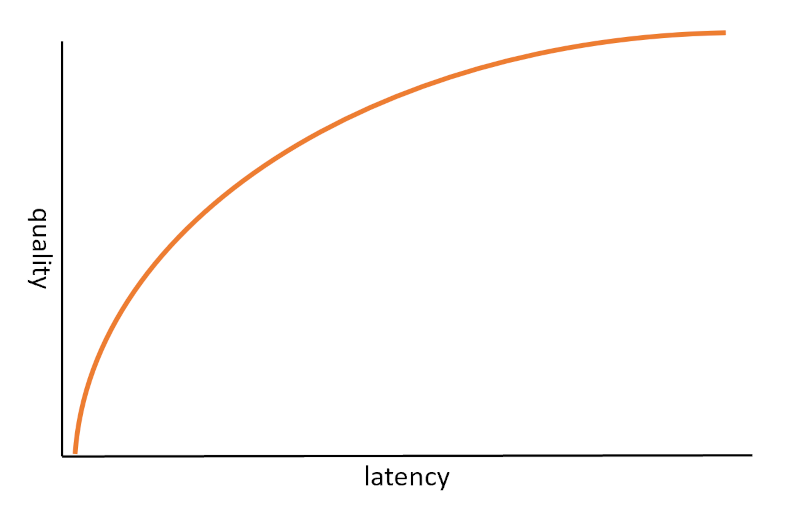

What really changes everything here is latency. How much latency are we willing to accept?

The lower the latency we want the more challenging the implementation is. The closer to live/real time we wish to get, the more sacrifices we will need to make in terms of quality. I’ve written about the need to choose either quality or latency.

WebRTC is razor focused on real time and live. So much so that it can’t really handle something that has latency in it. It can – but it will sacrifice too much for it at a high complexity cost – something you don’t really want or need.

What does that mean exactly?

- WebRTC runs over UDP and falls back to TCP if it must

- The reason behind it is that having the generic retransmissions built into TCP is mostly counterproductive to WebRTC – if a packet is lost, then resending it is going to be too late in many cases to make use of it 👉 live – remember?

- So WebRTC relies on UDP and uses RTP, enabling it to decide how to handle packet losses, bitrate fluctuations and other network issues affecting real time communications

- If we have a few seconds of latency, then we can use retransmissions on every packet to deal with packet losses. This is exactly what Netflix and YouTube do for example. With its focus on low latency, WebRTC doesn’t really allow that for us

This is when a few tough questions need to be asked – what exactly does your streaming service need?

- Sub-second latency because it is real time and interactive?

- If the viewer receives the media two seconds after it has been broadcasted. Is that a huge problem or is it ok?

- What about 5 seconds?

- And 30 seconds?

- Is the stream even live to begin with or is it pre-recorded?

If you need things to be conducted in sub-second latency only, then WebRTC is probably the way to go. But if you have in your use case other latencies as well, then think twice before choosing WebRTC as your go-to solution.

A hybrid WebRTC approach to “live” streaming

An important aspect that needs to be mentioned here is that in many cases, WebRTC is used in a hybrid model in media streaming.

Oftentimes, we want to ingest media using WebRTC and view the media elsewhere using other protocols – usually because we don’t care as much about latency or because we already have the viewing component solved and deployed – here WebRTC ingest is added to an existing service.

Adding the WHIP protocol here, and ingesting WebRTC media to the streaming service means we can acquire the media from a web browser without installing anything. Real time is nice, but not always needed. Browser ingest though is mostly about reducing friction and enabling web applications.

The 3 horsemen: WebTransport, WebCodecs and WebAssembly

That last suggestion would have looked different just two years ago, when for real time the only game in town for browsers was WebRTC. Today though, it isn’t the case.

In 2020 I pointed to the unbundling of WebRTC. The trend in which WebRTC is being split into its core components so that developers will be able to use each one independently, and in a way, build their own solution that is similar to WebRTC but isn’t WebRTC. These components are:

- WebTransport – means to send anything over UDP at low latency between a server and a client – with or without retransmissions

- WebCodecs – the codecs used in WebRTC, decoupled from WebRTC, with their own frame by frame encoding and decoding interface

- WebAssembly – the glue that can implement things with high performance inside a browser

Theoretically, using these 3 components one can build a real time communication solution, which is exactly what Zoom is trying to do inside web browsers.

In the past several months I’ve seen more and more companies adopting these interfaces. It started with vendors using WebAssembly for background blurring and replacement. Moved on to companies toying around with WebTransport and/or WebCodecs for streaming and recently a lot of vendors are doing noise suppression with WebAssembly.

Here’s what Intel showcased during Kranky Geek 2021:

This trend is only going to grow.

How does this relate to streaming?

Good that you asked!

These 3 enables us to implement our own live streaming solution, not based on WebRTC that can achieve sub second latency in web browsers. It is also flexible enough for us to be able to add mechanisms and tools into it that can handle higher latencies as needed, where in higher latencies we improve upon the quality of the media.

Strengths 💪

Here’s what I like about this approach:

- I haven’t read about it or seen it anywhere, so I like to think of it as one I came up with on my own 😜 but seriously…

- It is able with a single set of protocols and technologies to support any latency requirement we have in our service

- Support for web browsers (not all yet, but we will be getting there)

- No need for TURN or STUN servers – less server footprint and headaches and better firewall penetration (that’s assuming WebTransport becomes as common as WebSocket and gets automatically whitelisted by firewalls)

Weaknesses 🤒

It isn’t all shiny though:

- Still new and nascent. We don’t know what doesn’t work and what the limitations are

- Not all modern browsers support it properly yet

- We’re back to square one – there’s no streaming protocol to support it that way, which means we don’t support the media streaming ecosystem as a whole

- Connecting it to WebRTC when needed might not be straightforward

- You need to build-your-own spec at the moment, which means more work to you

Is WebRTC the future of live streaming?

I don’t know.

WHIP and WHEP are here. They are gaining traction and have vendors behind them pushing them.

On the other hand, they don’t solve the whole problem – only the live aspect of streaming.

The reason WebRTC is used at the moment is because it was the only game in town. Soon that will change with the adoption of solutions based on WebTransport+WebCodecs+WebAssembly where an alternative to WebRTC for live streaming in browsers will introduce itself.

Can this replace WebRTC? For media streaming – yes.

Is this the way the industry will go? This is yet to be seen, but definitely something to track.

There was some exploration of using WebRTC within DASH for real time streaming

https://dashif.org/news/webrtc/

https://dashif.org/webRTC/report.html

A Whip/Whep server using rust: https://github.com/harlanc/xiu

Great insights on WHIP and WHEP! I’m really intrigued by the potential of WebRTC for live streaming. It seems like it could greatly enhance real-time communication and reduce latency issues. Looking forward to seeing how this technology evolves!

Great insights in this post! I’m really intrigued by the potential of WebRTC for live streaming. Its low latency and peer-to-peer capabilities seem like game changers for real-time interactions. I can’t wait to see how it evolves and the new opportunities it will create for content creators!

WHIP was just declared as an official standard: https://datatracker.ietf.org/doc/rfc9725/

This is a fascinating discussion on the potential of WebRTC in live streaming! The comparison between WHIP and WHEP really highlights the flexibility of WebRTC for various use cases. I’m excited to see how these technologies continue to evolve and shape the future of live content delivery!