When using WebRTC you should always strive to send media over UDP instead of TCP. at least if you care about media quality ????

Every once in a while I bump into a person (or a company) that for some unknown reason made a decision to use TCP for its WebRTC sessions. By that I mean prioritizing TURN/TCP or ICE-TCP connections over everything else – many times even barring or ignoring the existence of UDP. The ensuing conversation is usually long and arduous – and not always productive I am afraid.

So I decided to write this article, to explain why for the most part, WebRTC over UDP is far superior to WebRTC over TCP.

Table of contents

UDP and TCP

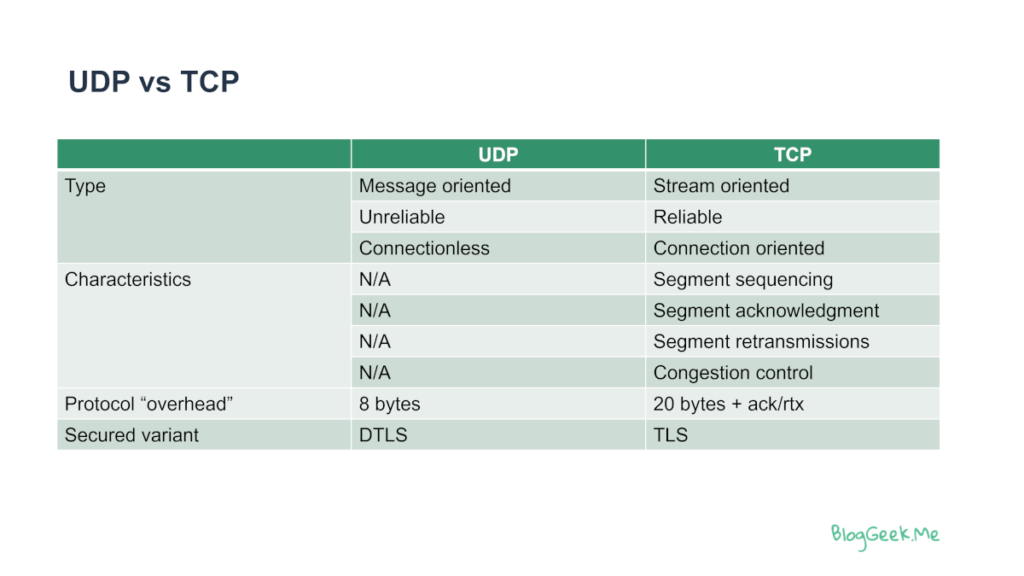

Since the dawn of time the internet, we had UDP and TCP as the underlying transport protocols that carry data across the network. While there are other transports, these are by far the most common ones.

And they are different from one another in every way.

UDP is the minimal must that a transport protocol can offer (you can get lower than that, but what would be the point?).

See also: Reasons for WebRTC to discard media packets

With UDP you get the ability to send data packets from one point to another over the network. There are no guarantees whatsoever:

- Your data packets might get “lost” along the way

- They might get reordered

- Or duplicated

No guarantees. Did I mention that part?

With TCP you get the ability to send a stream of data from one point to another over a “connection”. And it comes with everything:

- Guaranteed delivery of the data

- The data is received in the exact order that it is sent

- No duplication or other such crap

That guaranteed delivery requires the concept of retransmissions – what gets lost along the way needs to be retransmitted. More on that fact later on.

We end up with two extremes of the same continuum. But we need to choose one or the other.

TCP rules the web

Reading this page? You’re doin’ that over HTTPS.

HTTPS runs over a TLS connection (I know, there’s HTTP/3 but bear with me here).

And TLS is just TCP with security.

And if you are using a WebSocket instead, then that’s also TCP (or TLS if it is a secure WebSocket).

No escaping that fact, at least not until HTTP/3 becomes common place (which is slightly different than running on top of TCP, but that’s for another article).

???? Up until WebRTC came to our lives, everything you did inside a web browser was based on TCP in one way or another.

UDP rules VoIP

VoIP or Voice over IP or Video over IP or Real Time Communications (RTC) or… well… WebRTC – that takes place over UDP.

Why? Because this whole thing around guaranteed delivery isn’t good for the health of something that needs to be real time.

Let’s assume a latency of 50 milliseconds in each direction over the network, which is rather good. This translates to a round trip time of 100 milliseconds.

If a packet is lost, then it will take us at least a 100 milliseconds until the one who sent that packet will know about that – anything lower than that won’t allow the receiver to complain. Usually, it will take a bit more than 100 milliseconds.

For VoIP, we are looking to lower the latency. Otherwise, the call will sound unnatural – people will overtalk each other (happens from time to time in long distance calls for example). Which means we can’t really wait for these retransmissions to take place.

Which is why VoIP, in general, and WebRTC in particular, chose to use UDP to send its media streams. The concept here is that waiting will cause a delay for the whole duration of the session reducing the experience altogether, while the need to deal with lost packets, trying to conceal that fact would cause minor issues for the most part.

???? With WebRTC, you want and PREFER to use UDP for media traffic over TCP or TLS.

WebRTC ICE: Preferences and best effort

We don’t always get what we want. Which is why sometimes our sessions won’t open with WebRTC over UDP. Not because we don’t want them to. But because they can’t. Something is blocking that alternative from us.

That something is called a firewall. One with nasty rules that… well… don’t allow UDP traffic. The reasons for that are varied:

- The smart IT person 30 years ago decided that UDP is bad and not used over the internet, so better to just block it

- Another IT person didn’t like people at work bittorrenting the latest shows on the corporate network, so he blocked UDP traffic of the encrypted kind (which is essentially how WebRTC media traffic looks like)

This means that you’ll be needing TCP or TLS to be able to connect your users on that WebRTC session.

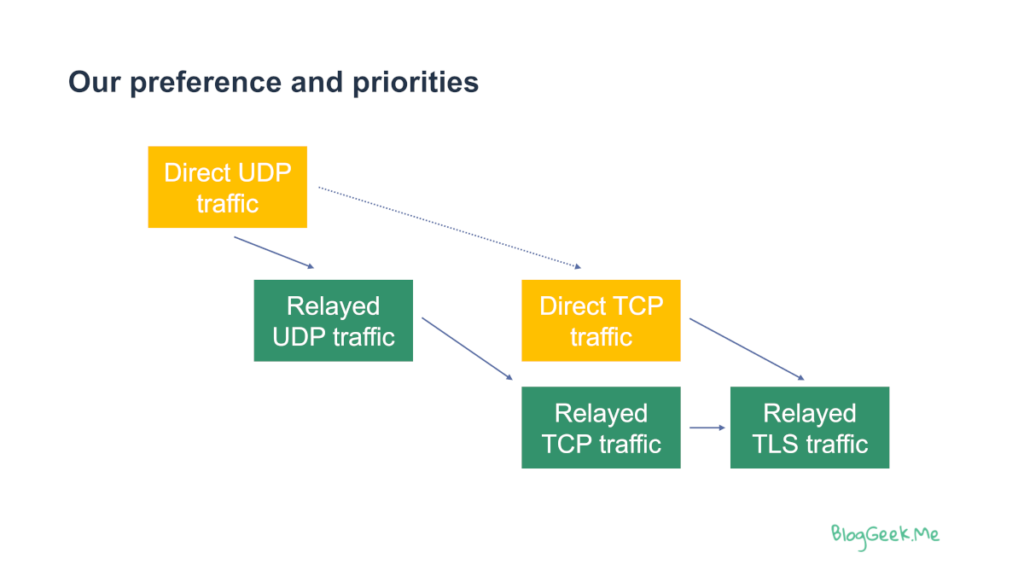

But – and that’s a big BUT. You don’t always want to use TCP or TLS. Just when it is necessary. Which brings us to ICE.

ICE is a procedure that enables WebRTC to negotiate the best way to connect a session by conducting connectivity checks.

In broad strokes, we will be using this type of logic (or strive to do so):

The diagram above shows the type of preferences we’d have while negotiating a session with ICE.

- We’d love to use direct UDP

- If impossible then relay via a TURN/UDP server would be just fine

- Then direct TCP connection would be nice

- Otherwise relay via a TURN/TCP or a TURN/TLS server

UDP comes first.

When is TCP (or TLS) good for WebRTC media?

The one and only reason to use TCP or TLS as your transport for WebRTC media is because UDP isn’t available.

There. Is. No. Other. Reason. Whatsoever.

And yes. It deserved a whole section of its own here so you don’t miss it.

???? TCP for me is a last resort for WebRTC. When all else fails

When will TCP break as a media transport for WebRTC?

The moment you’ll have packet loss on the network, TCP will break. By breaking I don’t mean the connection will be lost, but the media quality you’ll experience will degrade a lot farther than what it would with UDP.

Packet loss due to congestion is going to be the worst. Why? Because it occurs due to a switch or router along the route of your data getting clogged and starting to throw packets it needs to handle.

Here are all the things that will go wrong at such a point:

- TCP will retransmit packets – since they weren’t acknowledged and are deemed lost

- Retransmitting them will take time. Time we don’t have

- For a video stream, in all likelihood, the packet loss will be translated to a request to a new I-frame

- So the sender will then generate a new I-frame, which is bigger than other frames

- This in turn will cause more data to be sent over the network

- And since the network is already congested… this will just worsen the situation

- Here’s the “nice” thing though. TCP is retransmitting the lost data

- So we now have data we don’t need being sent through the network

- Which is congested already. Causing more congestion. For things we’re not going to use anyways

- It is actually hurting our ability to send out that I-frame the receiver is trying to ask for

- We’re also running on top of TCP, so there’s no easy way for us to know that things are being lost and retransmitted since TCP is hiding all that important data

- So the moment we know about packet loss in WebRTC is way too later

- No ability to use logic like intra packets delay (that’s smart-talk for saying figuring out potential near-future congestion, which also feels like smart-talk)

- And no way to employ algorithms to correct congestion and packet loss scenarios quickly enough

If you don’t believe me, then check out the test we conducted a few years back at testRTC, seeing how TURN/TCP affects WebRTC streams with and without packet losses.

Bottom line – TCP causes packet loss issues to worsen the situation a lot further than they are, with a lot less leeway on how to solve them than we have running on top of UDP.

???? The assumptions TCP makes over the data being sent are all wrong for real time communications requirements that we have in protocols like WebRTC

Time to learn WebRTC

I’ve had my fair share of discussions lately with vendors who were working with WebRTC but didn’t have enough of an understanding of WebRTC. Often that ends up badly – with solutions that don’t work at all or seem to work until they hit the realities of real networks, real users and real devices.

I just completed a massive update to my Advanced WebRTC Architecture training course for developers. In this round, I also introduced a new lesson about bandwidth estimation in WebRTC.

Next week, we will start another round of office hours as part of the course, letting those taking this WebRTC training ask questions openly as well as join live lessons on top of all the recorded and written materials found in the course.

If you are planning to use WebRTC or even using WebRTC, there isn’t going to be any better timing to join than this week.

"Prefer UDP over TCP for WebRTC" – this would be really practical if WebRTC would use a special video codec resilient to frame-loss.

But MPEG-based codecs are NOT resilient to frame loss. So, with H264 codec, if you lose couple of p-frames, you see ugly artifacts, and if you lose an i-frame, you are totally dead. Therefore, with H264 codec, if you have considerable packet loss, nothing can help you – neither TCP nor UDP; the quality will not be acceptable either way.

With US-European public networks quality of 2020-s, TCP is not much different from UDP; the packet loss and retransmissions are rare. Therefore, it's preferable to use TCP for WebRTC, just to deliver that rare packet that may be occasionally lost. If it's just one-two packets per minute, browsers will play these packets and you will not notice any degradation, so TCP will help.

But if it's 1-2% or higher packet loss, like in Asian public networks, then, like I say before, nothing can help for WebRTC – it will not work right.

With UDP you will see a lot of artifacts and freezes; with TCP you will see increasing latency and gaps in video.

I've rarely seen artifacts with WebRTC – the implementation in Chrome does not incomplete frames to the decoder even which avoids the artifacts you're talking about. NACK-based recovery works great. For higher losses flexfec helps.

Couple of things that makes VoIP frameworks resilient are the Jitter buffers, audio codecs , video codecs, FEC etc with right codecs and

media frameworks users can't perceive the loss of few packets of voice and few non important frames of Video,

UDP is perfect and only choice as of now for real time communications, as mentioned in the article in some rare cases TCP was forced option due to firewall.

webRTC is one of the best VoIP media frameworks available with easy to use interface in web, native devices..

I'd argue that if frame skipping is a matter for you then TCP is still a must (it depends if you just talk, or you are actually transmit high quality content). Think about Streamyard/Teams where host is sharing screen with a video on screen (60 fps trailer or anything else where quality does matter). It will appear laggy if you use UDP

Then the more users you have in a WebRTC conference, the more chances you get packet loss.

And then there's also the use case where only a host speaks, and interaction is rare or not happening at all. There's no reason to prefer UDP when interaction is limited as latency doesn't matter

All of that doesn't make UDP as obvious as described here.

Chen,

For WebRTC, TCP is bad no matter what. WebRTC is built for low latency – it can’t really do “high latency” use cases at all.

As such, the suggestions you make and the validity of TCP for them is all true, but in a way, it has nothing to do with WebRTC since I wouldn’t be using or recommending the use of WebRTC to scenarios where latency is less important than quality.