Here’s how you handle multipoint for small groups using WebRTC.

This is the third post dealing with multipoint:

- Introduction

- Broadcast

- Small groups (this post)

- Large groups

–

Let’s first define small groups, and place the magical number 4 on small groups. Why? Because it’s a nice rounded number for a geek like me.

Running a conference with multiple participants isn’t easy – I can say that from both participating in quite a few of those and from implementing them. When handling multiple endpoints in a conference, there are usually two ways of implementation available if you are ditching the idea of a central server:

- Someone becomes the server to all the rest:

- All endpoints send their media to that central endpoint

- That central endpoint mixes all inputs and sends out a single media stream for all

- Each to his own

- All endpoints send their media to all other endpoints

- All endpoints now need to make sense of all incoming media streams

When it came to traditional VoIP systems, the preference was usually the first option. Fortunately it isn’t that easy to implement (i.e. – impossible as far as I can tell) with WebRTC, which leaves us with the second option.

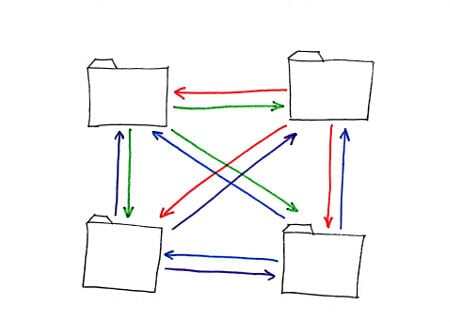

Each to his own, with our magical number 4 will look something like this:

Some assumptions before we continue:

- Each participant sends the exact same stream to all other participants.

- Each participant sends a resolution that is acceptable – no need to scale or process the video on the receiving end other than displaying it

You might notice that these assumptions should make our lives easier…

Let’s analyze what this requires from us:

- Bandwidth. Reasonable amount…

- If all participants need to send out 500Kbps for their video, then we are at 1.5Mbps per sender which gets us to 6Mbps for the whole conference.

- If we had a centralized MCU, then we would be at about the same ballpartk: 500Kbps towards the MCU from each participant, and then probably 1Mbps or more back to each participant.

- Going to any larger number of participants will mess the calculations here, making a centralized server consume less bandwidth overall.

- Decoding multiple streams

- There’s this nasty need now to decode multiple video streams

- I bet you that there is no mobile chipset today that can decode H.264 by hardware multiple video streams

- And if there is, then I bet you that the driver developed and used for it can’t

- And if there is, then I bet you that the OS on top of it doesn’t provide access to that level

- And if there is, then it is probably a single device. The other 100% of the market has no access to such capabilities

- So we’re down to software video decoding, which is fine, as we are talking VP8 for now

- But then it requires the browsers to support it. And some of them won’t. probably most of them won’t

- CPU and rendering

- There’s a need to deal with the incoming video data from multiple sources, which means aligning the inputs, taking care of the different latencies of the different streams, etc.

- Non-trivial stuff that can be skipped but will affect quality

- You can assume that the CPU works harder for 4 video streams of 500Kbps than it does for a single video stream of 2Mbps

- Geographic location

- We are treating multiple locations, where each needs to communicate directly with all others

- A cloud based solution might have multiple locations with faster/better networks between its regional servers

- This can lead to a better performing solution even with the additional lag of the server

When I started this post, I actually wanted to show how multipoint can be done on the client side in WebRTC, but now I don’t think that can work that well…

It might get there, but I wouldn’t count on it anytime soon. Prepare for a server side solution…

–

If you know of anyone who does such magic well using WebRTC – send him my way – I REALLY want to understand how he made it happen.

OneKlikStreet.com is a bridge service for webrtc clients. The server architecture is video mixing service. We can do four party conference at less than 300 kbps today. Do try out the service and let me know your thoughts. If you have any queries regarding our service architecture, send us an email at info@turtleyogi.com

One more company to add to my watch list 🙂 Thanks Rishi!

Hi,

Do you think peer5 can be used to broadcast/stream video and sounds from multiple clients? I.e one-to-many or many-to-many? I’m thinking it would work similar to how bitTorrent works for filesharing… Right now it’s only possible to have about four participants all using WebRTC to communicate with eachother in a video conference.

I don’t think it would fit. Peer assisted delivery solutions today require adding a bit of latency – a few seconds to tens of seconds in order to bring any gains. Also, when you want to build a full mesh, then you still end up sending and receiving over the network the same amount between clients, which doesn’t solve your initial problem with mesh which is uplink and CPU.

thanks for the reply!

I figured as much. What I’m trying to do is provide video conferencing/chat for multiple users (maybe dozens) with WebRTC. I was hoping there would be a way to build such a mesh without relying on or relaying all connections through some central server (which brings the costs up significantly).

At this point in time, for dozens of users your only option would be using central servers. Meshes will break due to the cpu and network load on the browsers. The server architecture may vary.

What about services like TokBox, that you mentioned in next post? they obviously cost but seem to provide those kinds of services.

thanks again

Well… they do a lot more than multipoint. And yes – TokBox and other WebRTC API Platforms usually have multipoint support.

Hello, do you have services or company who can provide restreaming rtmp(rtsp) to webrtc?

Look at http://flashphoner.com/ they might have what you’re looking for

Thank you! Maybe you are familiar with alternative?

Most will shy away from using Flash these days, opting for a WebRTC solution with no Flash unless they must.

The mesh idea is good technically, however you need to have permission before using someone else’s bandwidth. Remember not all users will consent to someone using their bandwidth to send content, because the cost of retransmitting the stream is taken up by them. Viewing content is one thing being a part of its distribution is a completely different issue. If I can send another person a stream that is resent from that node, I could just as easily send you two streams. The first won’t get you into trouble, but the second one sure will.

Michael,

I think you misunderstood the mesh here. The idea is that every participant in the call sends his own media to all other participants, creating a mesh.

are their any tutorials on how to implement this?

Avishka,

Most open source signaling frameworks for WebRTC that support multiparty without a media server will end up using mesh. That said, I have yet to see a good mesh solution that actually works and is battle tested – most are just proof of concepts. If you ask me, you should focus on using a media server for your user case – whatever it may be.

My Idea is on Caller computer (for computer only no mobile) use canvas to render video groups and then captureStream from there into single stream source and send each peer. so we saved a lot of peer connections and bandwidth

Good luck with that approach… it probably won’t get you far.

Here are some challenges with this approach:

* Uplink bitrate is something you don’t control. The larger the group, the more diverse the users will be, and the harder it will be to decide on a single bitrate to send out (=impossible)

* Encoding from a canvas comes with its own performance challenges. This definitely isn’t the main focus of Google in Chrome and most definitely not in other browsers

* You probably don’t control the performance envelope of the machines, so selecting who is the mixer is going to be challenging to impossible as well