Discover the hidden dangers of packet loss and its impact on your WebRTC application. Find out how to optimize your network performance and minimize packet loss.

If there’s one thing that can give you better media quality in WebRTC it is going to be the reduction (or elimination?) of packet loss. Nothing else will be as effective as this.

What I want to do here, is to explain packet loss, what it is inevitable, and the many ways we have at our disposal to increase the resilience and quality of our media in WebRTC in the face of packet losses.

Table of contents

Why do we have packet loss in WebRTC?

There are many reasons for packet losses to occur on modern networks and with WebRTC. To count a few of these:

- Wireless and cellular networks may suffer due to the distance between the device and the access point, as well as other obstructions (physical or just aerial interference)

- Routers and switches can get congested, causing delays as well as dropped packets

- Ethernet cables can be faulty at times

- Connections between switches are not always as clean as they could be

- Media servers not doing their job correctly or just getting overtaxed with traffic

- Entropy. The more we miniaturize and condense things, the more entropy will kick in (I added this one just to sound smart)

- Devices might not be faring too well at times either

We think of the internet as a reliable network. You direct a browser to a web page. And magically the page loads. If it doesn’t, then the network or server is down. End of story. That’s because packet losses there are handled by retransmitting what is lost. The cost? You wait a wee bit longer for your page to load.

With WebRTC we are dealing with real time communications. So if something gets lost there is little time to fix that.

???? Packet losses are a huge headache for WebRTC applications

What to do to overcome packet losses?

Packet loss is an inevitability when it comes to WebRTC and VoIP in general. You can’t really avoid them. The question then becomes what can we do about this?

There are four different approaches here that can be combined for a better user experience:

- Have less packet losses – if we have less of these, then user experience will increase

- Conceal packet losses (PLC) – once we have packet losses, we need to try and figure out what to do to conceal that fact from the user

- Retransmit lost packets (RTX) – we might want to try and retransmit what was lost, assuming there’s enough time for it

- Correct packet losses in advance (FEC) – when we know there’s high probability of packet losses, we might want to send packets more than once or add some error correction mechanism to deal with the potential packet losses

From here on, let’s review each one of these four approaches.

Have less packet losses

This is the most important solution.

Because I don’t want you to miss this, I’ll write this again:

This is the most important solution.

If there is less packet loss, there is going to be less headache to deal with when trying to “fix” this situation. So reducing packet loss should be your primary objective. Since you can’t fully eradicate packet loss, we will still need to use other techniques. But it starts with reducing the amount of packet losses.

Location of infrastructure elements in WebRTC

Where you place your media servers and TURN servers and how you route traffic for your WebRTC service will have a huge impact on packet loss.

Best practice today is having the first server that WebRTC media hits as close to the user as possible. The understanding behind that is that this reduces the number of hops and network infrastructure components that the media packets need to traverse over the open internet. Once on your server, you have a lot more control over how that data gets processed and forwarded between the servers.

Having a single data center in the US cater for all your traffic is great. Assuming your users are from that region – once users start joining from across the pond – say… France. Or India. You will start seeing higher latencies and with it higher levels of packet loss.

A few things here:

- Where you place your servers highly depends on your users and their behavior

- TURN servers are important to spread globally, but at the end of the day, check how much of your actual traffic gets related through TURN servers

- Media servers are something I’d try to spread globally more, assuming these are needed in all meetings. I’d also focus on cascaded/distributed architectures where users join the closest media server (versus allocating a specific server for all users in the same meeting)

Where to start?

???? Know the latency (RTT) of your users. Monitor it. Strive towards improving it. Learn more about reducing latency

???? Check if there are locations and users that are routed across regions. Beef up your infrastructure in the relevant regions based on this data

???? Since we want to reduce packet loss, you should also monitor… packet loss

Better bandwidth estimation

I should have called this better bandwidth management, but for SEO reasons, kept it bandwidth estimation ????

Here’s the thing:

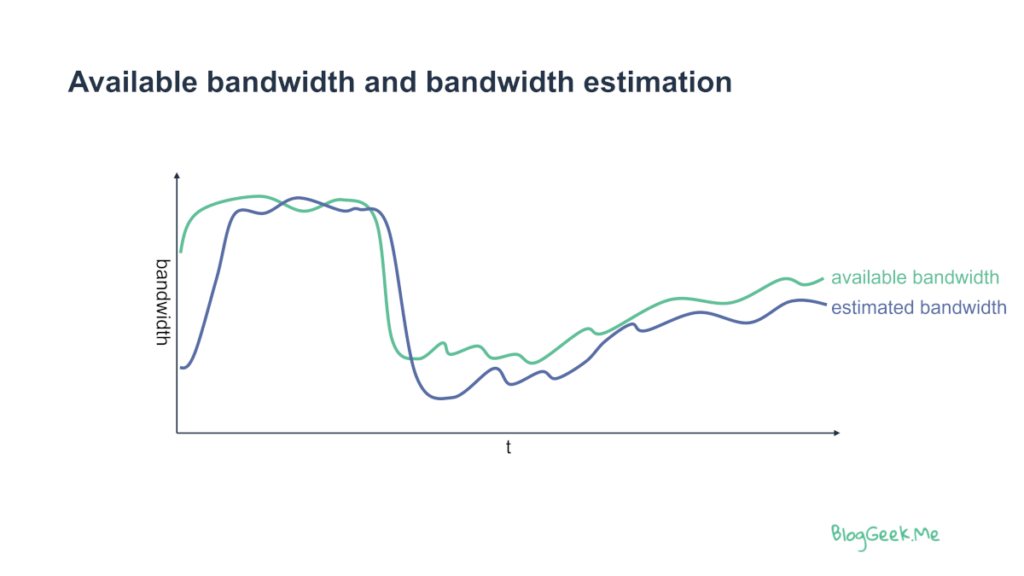

Sending more than the network can handle, the sender can send or the receiver can receive leads to packet loss and packet drops.

Fixing that boils down to bandwidth management – you don’t want to send too little since media quality will be lower than what you can achieve. And you don’t want to send too much since… well… packet loss.

Your service needs to be able to estimate bandwidth. That needs to happen on both the uplink and the downlink for each user.

The challenge is that available bandwidth is dynamic in nature. At each point in time, we need to estimate it. If we overshoot – packets are going to be delayed or lost. If we undershoot, we are going to reduce media quality below what we can achieve.

Web browser implementations of WebRTC have their own bandwidth management algorithms and they are rather good. Media servers have different implementations and their quality varies.

For media servers, we also need to remember that we aren’t dealing only with bandwidth estimation but rather with bandwidth management. Once we approximately know the available bandwidth, we need to decide which of the streams to send over the connection and at which bitrates; doing that while seeing the bigger picture of the session (hence bandwidth management and not estimation).

Conceal packet losses (PLC)

Packet loss concealment is what we do after the fact. We lost packets, but we need to play out something for the user. What should we do to conceal the problem of packet loss?

This may seem like the last thing to deal with, but it is the first we need to tackle. There are two reasons why:

- No matter what kind of techniques and resiliency mechanisms you use, at the end of the day, some level of packet loss is bound to occur

- Other techniques we have are more sophisticated. Usually we will get to implement them later on. We NEED to have a rock solid concealment strategy before adding more techniques

Audio and video are different, which is why from here on, we will distinguish between the two in the techniques we are going to use.

Audio and packet loss concealment

With audio, a loss of an audio packet almost always translates immediately to a loss of one or more audio frames (and we usually have 50 audio frames per second).

“Skipping” them doesn’t work so well, as it leads to robotic audio when there’s packet loss.

Other naive approaches here include things like playing back the last frame received – either as is or with a reduction in its volume.

More sophisticated approaches try to estimate what should have been received by way of machine learning (or what we love calling it these days – generative AI). Google has such a capability inhouse (though not inside the open source implementation of WebRTC that they have). If you are interested in learning more about this, you can check out Google’s explanation of WaveNetEQ.

A few things to remember here:

???? For the most part, this isn’t something in your control, unless you own/compile your WebRTC stack on the device side

???? Knowing how browsers behave here enables you to be slightly smarter with the other techniques you are going to use (by deciding when to use them and how aggressively)

???? In your own native application? You can improve on things, but you need to know what you’re doing and you need to have a compelling reason to take this route

Video and packet loss concealment ???? frame dropping

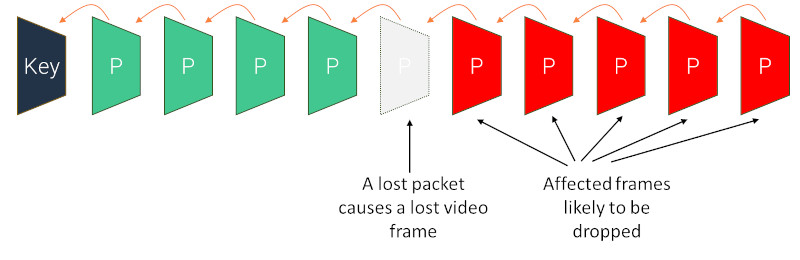

Video is trickier with packet losses:

- With video coding, each frame is usually dependent on past frames (to improve upon compression rates)

- A video frame is almost always composed of multiple packets

One lost packet translates into a lost frame, which can easily cause loss of the whole video sequence:

Packet loss concealment in video means dropping a frame, and oftentimes freezing the video until the next keyframe arrives.

What can the receiver do in case of such a loss? If it believes it won’t recuperate quickly (which is most commonly the case), he can send out a FIR or PLI message over RTCP to the sender. These messages indicate to the sender that there’s a loss that needs to be addressed, where the usual solution is to reset the encoder and send a new keyframe.

In the past, systems used to try and overcome packet losses by continuing to decode without the missing packets. The end result was smearing artifacts on the video until a new keyframe arrived. Today, best practice is to freeze the video until a keyframe arrives (which is what all browser implementations do).

A few things to remember here:

???? You have more control here than in audio. That’s because a lost packet means you will receive FIR or PLI message on the other end. If that’s your media server receiving these messages, you can decide how to respond

???? Sending a keyframe means investing more on bitrate for that frame. If there’s congestion over the network, then this will just put more burden. Most media servers would avoid sending too many of these in larger group meetings

???? There are video coding techniques that reduce the dependencies between frames. These include temporal scalability and SVC

Retransmitting lost packets (RTX)

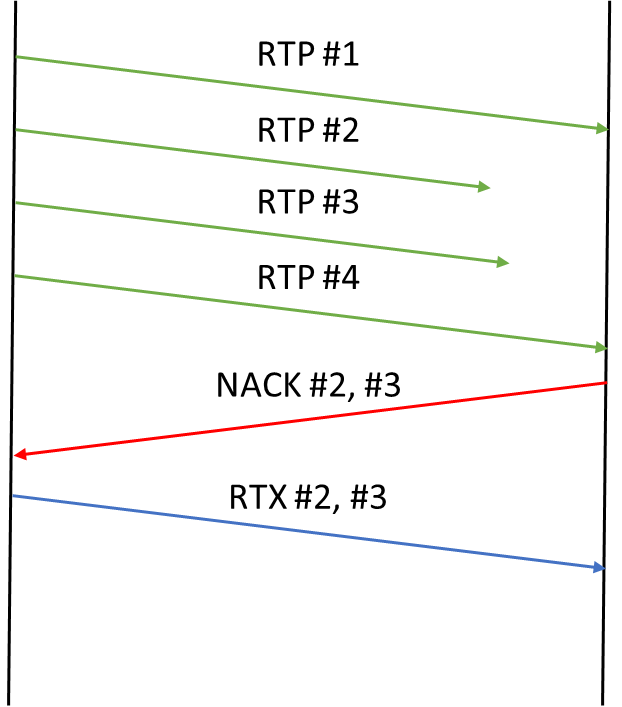

If a packet is missing, then the first solution we can go for is to retransmit it.

The receiver knows what packets it is missing. Once the sender knows about the missing packets (via

NACK messages), it can resend them as RTX packets.

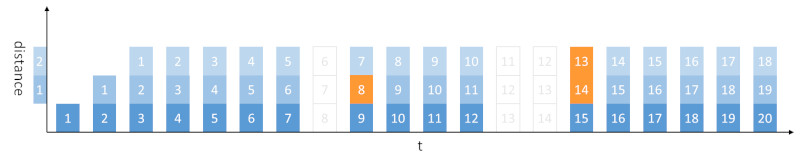

Retransmission is the most economic solution in terms of network resources. It is the least wasteful solution. It is also the hardest to make use of. That’s because it ends up looking something like this:

In order to retransmit, we need to:

- Know there are missing packets (by receiving a newer packet)

- Decide that the older ones won’t be arriving and are lost

- Let the sender know they are lost

- Have the sender retransmit them

This takes time. A long time.

The question then becomes, is it going to be too late to retransmit them.

Video and RTX

Video can make real use of retransmissions (and it does in WebRTC).

With video compression, we have a kind of hierarchy of frames. Some frames are more important than others:

- Keyframes (or I-frames) are the most important. They are “standalone” frames that aren’t reliant on any past frames

- In SVC and temporal scalability, some frames are a kind of a dead-end, with nothing reliant on them, while in other cases, have frames reliant on them

The above illustration, for example, shows how keyframes and temporal scalability build dependency chains. Key denotes the keyframe while L0 has higher usability than L1 frames (L1 frames are dependent on L0 frames and nothing depends on them).

When we have such a dependency tree of frames, we can do some interesting things with resiliency. One of them is deciding if it is worthwhile to ask for a retransmission:

- If the missing packets are from a keyframe, then asking for a retransmission is useful even if the keyframe itself won’t be displayed due to the time that passed

- Similarly, we can decide to do this for L0 frames (these being quote important)

- And we can just skip packets of L1 frames that are lost – we might not have time to playback this frame once the retransmission arrives, and that data will be useless anyway

Audio and RTX

Audio compression doesn’t enjoy the same dependency tree that video compression does. Which is why libwebrtc doesn’t have code to deal with audio RTX.

Would having RTC for audio be useful? It can. Audio packets usually wait for video packets to arrive for lip synchronization purposes. If we can use that wait time to retransmit, then we can improve upon audio quality. Google likely deemed this not important enough.

Correct packet losses in advance (FEC)

We could ask for a retransmission after the fact, but what about making sure there’s no need? This is what FEC (Forward Error Correction) is all about.

Think of it this way – if we had one shot at what we want to send and it was super important – would it make sense to send 100 copies of it, knowing that the chances that one of these copies would reach its destination is high?

FEC is about sending more packets that can be used to reconstruct or replace lost packets.

There are different FEC schemes that can be used, with the main 3 of them being:

- Duplication (send the same thing over and over again)

- XOR (add packets that XOR the ones we wish to protect)

- Reed Solomon (similar to XOR just more complex and more resilient)

WebRTC supports duplication and XOR out of the box.

The biggest hurdle of FEC is its use of bitrate – it is quite network hungry in that regard.

Audio FEC

Audio FEC comes in two different manners:

- In-codec FEC (such as Opus in-band FEC), where the FEC mechanism is part of the codec implementation itself

- RTP-based FEC, where the FEC mechanism is part of the RTP protocol

In-band FEC is implemented as part of the Opus codec library. It is ok’ish at best – nothing to write home about.

Then there’s RED – Redundancy Encoding – where each audio packet holds more than a single audio frame. And the ones it holds are just slightly older frames, so that if a packet is lost, we get it in another packet.

RED is implemented in libwebrtc. Support is limited to 1 level of redundancy for RED (meaning recovering up to one sequential lost packet). You can use WebRTC’s Insertable Streams mechanism to generate RED packets at higher redundancy or dynamic redundancy in the browser though.

In the above, Philipp Hancke explains RED (along with other resiliency features for audio in WebRTC).

Video FEC

FEC for video is considered wasteful. If we need to increase bitrate by 20% or more to introduce robustness using FEC, then it comes at a cost of video quality that we could increase by using higher video bitrate.

For the most part, WebRTC ignores FEC for video, which is a shame. When using temporal scalability or SVC, the same way that we can decide to retransmit only important packets, we can also decide to only add FEC protection only to more important frames.

Wrapping it all up

Dealing with packet loss in WebRTC isn’t a simple task. It gets more complex over time, as more techniques and optimizations are bolted on to the implementation. What I want to do here is to list the various tools at our disposal to deal with packet losses. When and how we decide to use them would determine the resulting robustness and media quality of the implementation.

Here’s a quick table to sum things up a bit:

| PLC | RTX | FEC | |

| Focus | What to playback to the user | When to ask for missing packets | When to send duplicated packets |

| Advantages | None. You must have this logic implemented | Low network footprint | Low latency overhead |

| Challenges | Audio may sound roboticVideo will freeze | Increases latency. Might not be usable due to it | High network footprint. Can be quite wasteful |

| Audio | Duplicate last frames or reduce volumeUse Gen AI to estimate what was lost | Not commonly used for audio in WebRTC | FlexFEC used by WebRTCCan use RED if you want to |

| Video | Skip video framesAsk for a fresh keyframe to reset the video stream | Can be optimized to retransmit packets of important frames only | Not commonly used for video in WebRTC |

Oh – and make sure you first put an effort to reduce the amount of packet losses before starting to deal with how to overcome packet losses that occur…

Learn more about WebRTC (and everything about it)

Packet loss is one of the topics you need to deal with when writing WebRTC applications. There are many aspects affecting media quality – packet loss is but one of them. This time, we looked into the tools available in WebRTC for dealing with packet losses.

To learn more about media processing and everything else related to WebRTC, check out these services:

And if what you want is to test, monitor, optimize and improve the performance of your WebRTC application, then I’d suggest checking out testRTC.