At Google I/O 2019, the advances Google made in AI and machine learning were put to use for improving privacy and accessibility.

I’ve attended Google I/O in person only once. It was in 2014. I’ve been following this event from afar ever since, making it a point to watch the keynote each year, trying to figure out where Google is headed – and how will that affect the industry.

This weekend I spend some time going over te Google I/O 2019 keynote. If you haven’t seen it, you can watch it over on YouTube – I’ve embedded it here as well.

The main theme of Google I/O 2019

Here’s how I ended my review about Google I/O 2018:

Where are we headed?

That’s the big question I guess.

More machine learning and AI. Expect Google I/O 2019 to be on the same theme.

If you don’t have it in your roadmap, time to see how to fit it in.

In many ways, this can easily be the end of this article as well – the tl;dr version.

Google got to the heart of their keynote only in around the 36 minute mark. Sundar Pichai, CEO of Google, talked about the “For Everyone” theme of this event and where Google is headed. For Everyone – not only for the rich (Apple?) or the people in developed countries, but For Everyone.

The first thing he talked about in this For Everyone context? AI:

From there, everything Google does is about how the AI research work and breakthroughs that they are doing at their scale can fit into the direction they want to take.

This year, that direction was defined by the words privacy, security and accessibility.

Privacy because they are being scrutinized over their data collection, which is directly linked to their business model. But more so because of a recent breakthrough that enables them to run accurate speech to text on devices (more on that later).

Security because of the growing number of hacking and malware attacks we hear about all the time. But more so because the work Google has put into Android from all aspects is placing them ahead on competition (think Apple) based on third party reports (Gartner in this case).

Interestingly, Apple is attacking Google around both privacy and security.

Accessibility because that’s the next billion users. The bigger market. The way to grow by reaching ever larger audiences. But also because it fits well with that breakthrough in speech to text and with machine learning as a whole. And somewhat because of diversity and inclusion which are big words and concepts in tech and silicon valley these days (and you need to appease the crowds and your own employees). And also because it films well and it really does benefit the world and people – though that’s secondary for companies.

The big reveal for me at Google I/O 2019? Definitely its advances in speech analytics by getting speech to text minimized enough to fit into a mobile device. It was the main pillar of this show and for things to come in the future if you ask me.

A lot of the AI innovations Google is talking about is around real time communications. Check out the recent report I’ve written with Chad Hart on the subject:

Event Timeline

I wanted to understand what is important to Google this year, so I took a rough timeline of the event, breaking it down into the minutes spent on each topic. In each and every topic discussed, machine learning and AI were apparent.

| Time spent | Topic |

| 10 min | Search; introduction of new feature(s) |

| 8 min | Google Lens; introduction of new feature(s) – related to speech to text |

| 16 min | Google assistant (Duplex on the web, assistant, driving mode) |

| 19 min | For Everyone (AI, bias, privacy+security, accessibility) |

| 14 min | Android Q enhancements and innovations (software) |

| 9 min | Next (home) |

| 9 min | Pixel (smartphone hardware) |

| 16 min | Google AI |

Let’s put this in perspective: out of roughly 100 minutes, 51 were spent directly on AI (assistant, for everyone and AI) and the rest of the time was spent about… AI, though indirectly.

Watching the event, I must say it got me thinking of my time at the university. I had a neighbor at the dorms who was a professional juggler. Maybe not professional, but he did get paid for juggling from time to time. He was able to juggle 5 torches or clubs, 5 apples (while eating one) and anywhere between 7-11 balls (I didn’t keep track).

One evening he comes storming into our room, asking us all to watch a new trick he was working on and just perfected. We all looked. And found it boring. Not because it wasn’t hard or impressive, but because we all knew that this was most definitely within his comfort zone and the things he can do. Funny thing is – he visited us here in Israel a few weeks back. My wife asked him if he juggles anymore. He said a bit, and said his kids aren’t impressed. How could they when it is obvious to them that he can?

Anyways, there’s no wow factor in what Google is doing with machine learning anymore. It is obvious that each year, in every Google I/O event, some new innovation around this topic will be introduced.

This time, it was all about voice and text.

Time to dive into what went on @ Google I/O 2019 keynote.

Speech to text on device

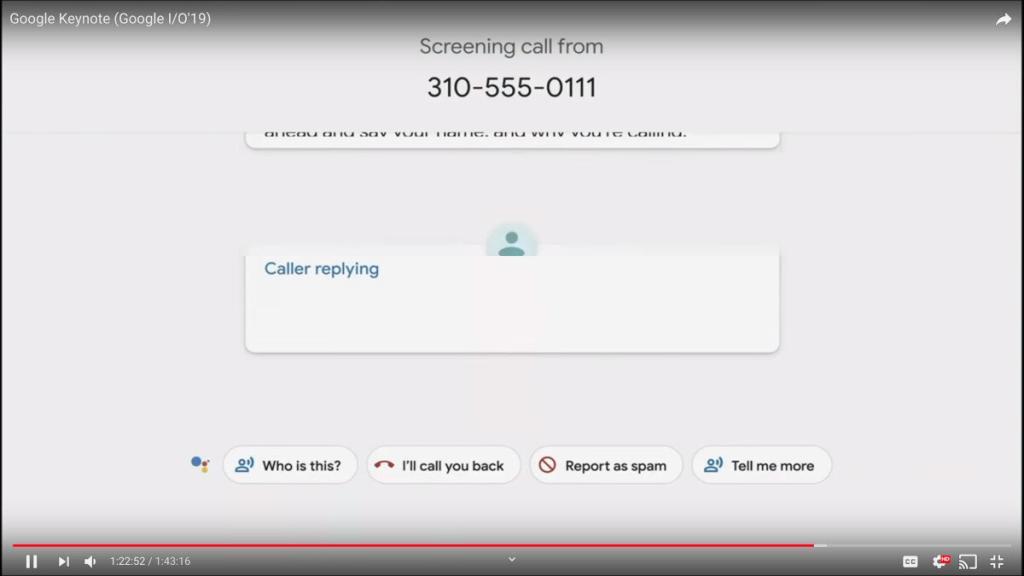

We had a glimpse of this piece of technology late last year when Google introduced call screening to its Pixel 3 devices. This capability allows people to let the Pixel answer calls on their behalf, see what people are saying using live transcription and decide how to act.

This was all done on device. At Google I/O 2019, this technology was just added across the board on Android 10 to anything and everything.

On stage, the explanation given was that the model used for speech to text in the cloud is 2.5Gb in size, and Google was able to squeeze it down to 80Mb, which meant being able to run it on devices. It was not indicated if this is for any language other than English, which probably meant this is an English only capability for now.

What does Google gain from this capability?

- Faster speech to text. There’s no need to send audio to the cloud and get text back from it

- Ability to run it with no network or with poor network conditions

- Privacy of what’s being said

For now, Google will be rolling this out to Android devices and not just Google Pixel devices. No mention of if or when this gets to iOS devices.

What have they done with it?

- Made the Google assistant more responsive (due to faster speech to text)

- Created system-wide automatic captioning for everything that runs on Android. Anywhere, on any app

Search

The origins of Google came from Search, and Google decided to start the keynote with search.

Nothing super interesting there in the announcements made, besides the continuous improvements. What was showcased was news and podcasts.

How Google decided to handle Face News and news coverage is now coming to search directly. Podcasts are now made searchable and better accessible directly from search.

Other than that?

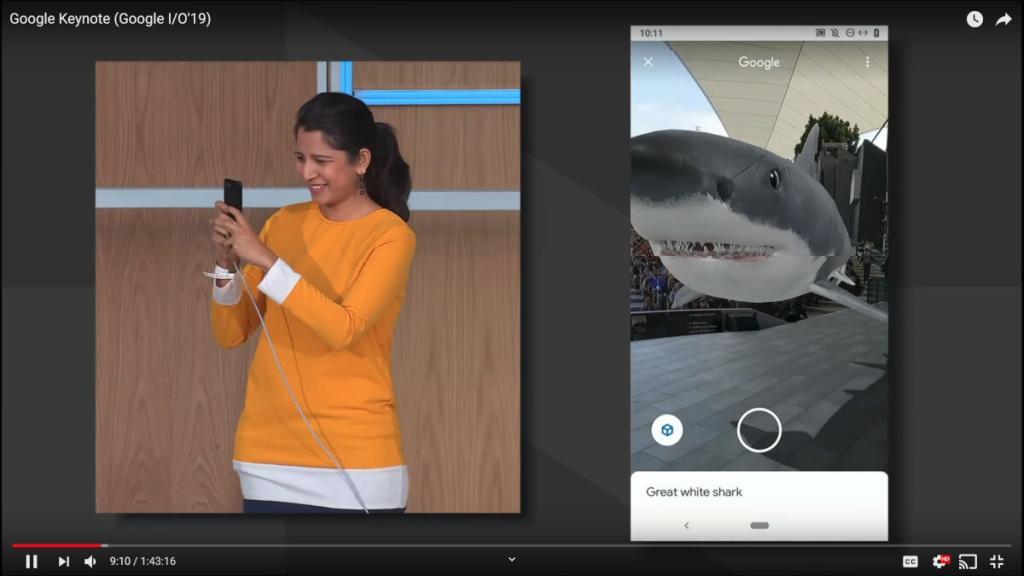

A new shiny object – the ability to show 3D models in search results and in augmented reality.

Nice, but not earth shattering. At least not yet.

Google Lens

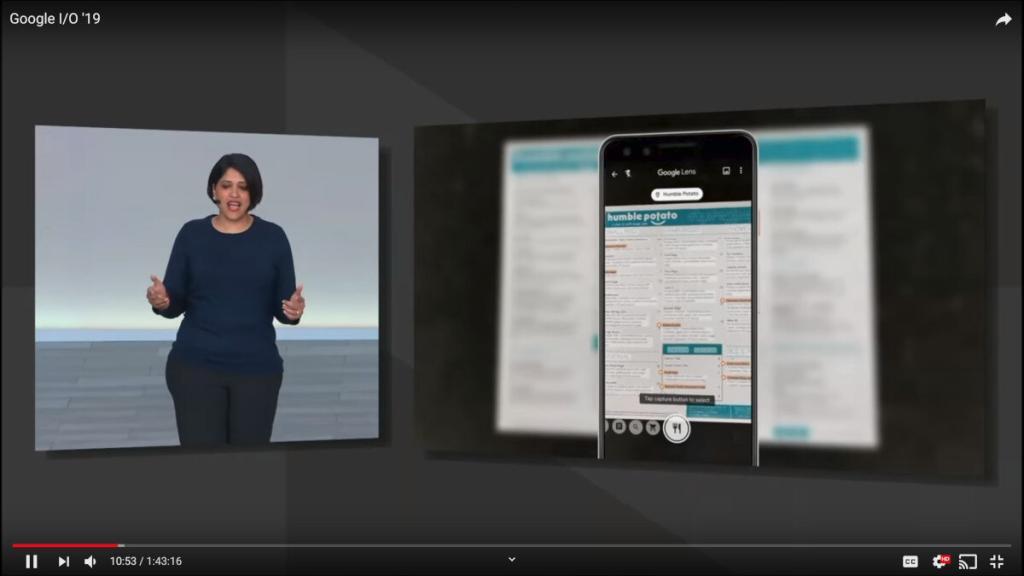

After Search, Google Lens was showcased.

The main theme around it? The ability to capture text in real time on images and do stuff with it. Usually either text to speech or translation.

In the screenshot above, Google Lens marks the recommended dishes off a menu. While nice, this probably requires each and every such feature to be baked into lens, much like new actions need to be baked into the Google Assistant (or skills in Amazon Alexa).

This falls nicely into the For Everyone / Accessibility theme of the keynote. Aparna Chennapragada, Head of Product for Lens, had the following to say (after an emotional video of a woman who can’t read using the new Lens):

“The power to read is the power to buy a train ticket. To shop in a store. To follow the news. It is the power to get things done. So we want to make this feature to be as accessible to as many people as possible, so it already works in a dozen of languages.”

It actually is. People can’t really be part of our world without the power to read.

It is also the only announcement I remember that the number of languages covered was mentioned (which is why I believe speech to text on device is English only).

Google made the case here and in almost every part of the keynote in favor of using AI for the greater good – for accessibility and inclusion.

Google assistant

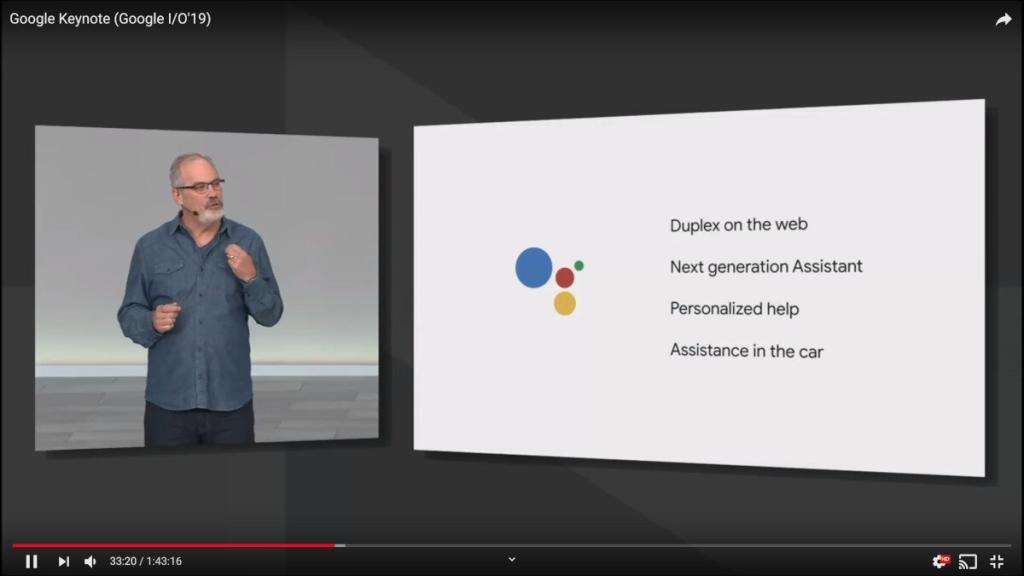

Google assistant had its share of the keynote with 4 main announcements:

Duplex on the web is a smarter auto fill feature for web forms.

Next generation Assistant is faster and smarter than its predecessor. There were two main aspects of it that were really interesting to me:

- It is “10 times faster”, most probably due to speech to text on the phone which doesn’t necessitate the cloud for many tasks

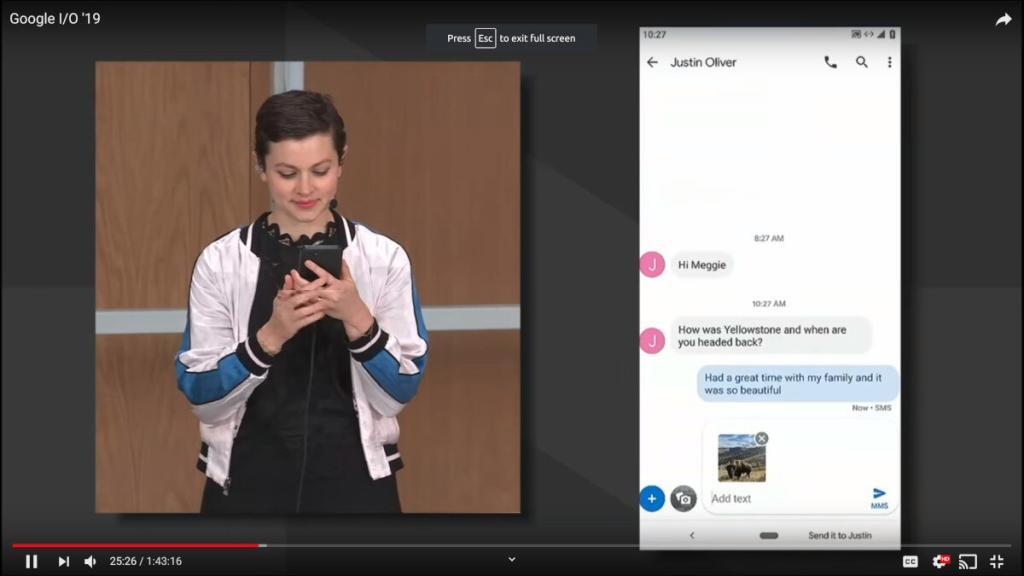

- It works across tabs and apps. A demo was shown, where a the woman instructed the Assistant to search for a photo, picking one out and then asking the phone to send it on an ongoing chat conversation just by saying “send it to Justin”

Every year Google seems to be making Assistant more conversational, able to handle more intents and actions – and understand a lot more of the context necessary for complex tasks.

For Everyone

I’ve written about For Everyone earlier in this article.

I want to cover two more aspect of it, federated learning and project euphonia.

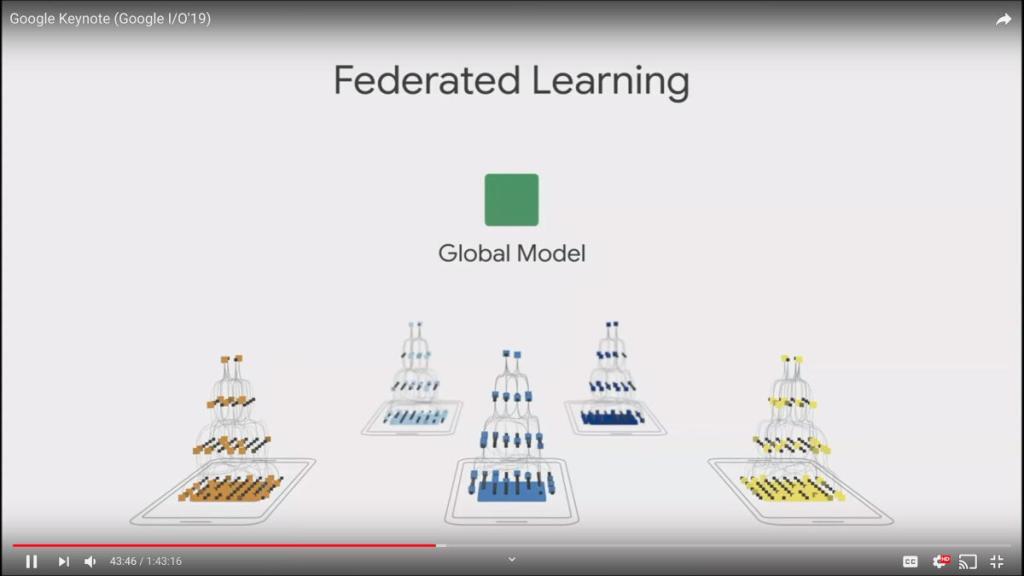

Federated Learning

Machine learning requires tons of data. The more data the better the resulting model is at predicting new inputs. Google is often criticized for collecting that data, but it needs it not only for monetization but also a lot for improving its AI models.

Enter federated learning, a way to learn a bit at the edge of the network, directly inside the devices, and share what gets learned in a secure fashion with the central model that is being created in the cloud.

This was so important for Google to show and explain that Sundar Pichai himself showed and gave that spiel instead of leaving it to the final part of the keynote where Google AI was discussed almost separately.

At Google, this feels like an initiative that is only starting its way with the first public implementation of it embedded as part of Google’s predictive keyboard on Android and how that keyboard is learning new words and trends.

Project Euphonia

Project Euphonia was also introduced here. This project is about enhancing speech recognition models towards hard to understand speech.

Here Google stressed the work and effort it is putting on collecting recorded phrases from people with such problems. The main issue here being the creation or improvement of a model more than anything else.

Android Q

Or Android 10 – pick your name for it.

This one was more than anything else a shopping list of features.

Statistics were given at the beginning:

- 2.5 billion active devices

- Over 180 device makers

Live captions was again explained and introduced, along with on-device learning capabilities. AI at its best baked into the OS itself.

For some reason, the Android Q segment wasn’t followed with the Pixel one but rather with the Nest one.

Nest (helpful home)

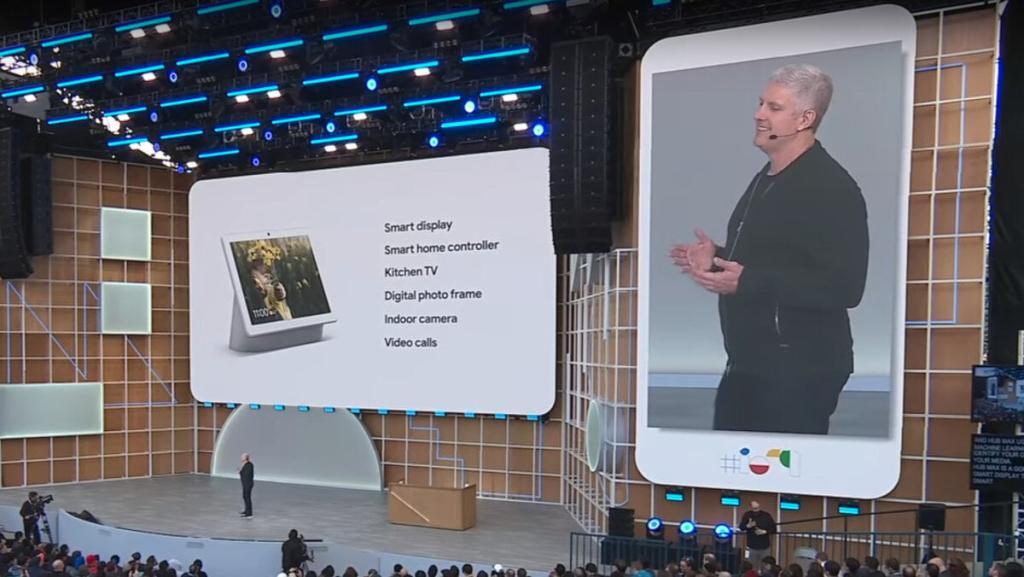

Google rebranded all of its smart home devices under Nest.

While at it, the decided to try and differentiate from the rest of the pack by coining their solution the “helpful home” as opposed to the “smart home”.

As with everything else, AI and the assistant took center stage, as well as a new device, the Nest Hub Max, which is Google’s answer to the Facebook Portal.

The solution for video calling on the Next Hub Max was built around Google Duo (obviously), with a similar ability to auto zoom that Facebook Portal has, at least on paper – it wasn’t really demoed or showcased on stage.

The reason no demo was really given is that this device will ship “later this summer”, which means it wasn’t really ready for prime time – or Google just didn’t want to spend more precious minutes on it during the keynote.

Interestingly, Google Duo’s recent addition of group video calling wasn’t mentioned throughout the keynote at all.

Pixel (phone)

The Pixel section of the keynote showcased a new Pixel phone device, the Pixel 3a and 3a XL. This is a low cost device, which tries to make do with lower hardware spec by offering better software and AI capabilities. To drive that point home, Google had this slide to show:

Google is continuing with its investment in computational photography, and if the results are as good as this example, I am sold.

The other nice feature shown was call screening:

The neet thing is that your phone can act as your personal secretary, checking for you who’s calling and why, and also converse with the caller based on your instructions. This obviously makes use of the same innovations in Android around speech to text and smart reply.

My current phone is Xiaomi Mi A1, an Android One device. My next one may well be the Pixel 3a – at $399, it will probably be the best phone on the market at that price point.

Google AI

The last section of the keynote was given by Jeff Dean, head of Google.ai. He was also the one closing the keynote, instead of handing this back to Sundar Pichai. I found that nuance interesting.

In his part he discussed the advancements in natural language understanding (NLU) at Google, the growth of TensorFlow, where Google is putting its efforts in healthcare (this time it was oncology and lung cancer), as well as the AI for Social Good initiative, where flood forecasting was explained.

That finishing touch of Google AI in the keynote, taking 16 full minutes (about 15% of the time) shows that Google was aiming to impress and to focus on the good they are making in the world, trying to reduce the growing fear factor of their power and data collection capabilities.

It was impressive…

Next year?

More of the same is my guess.

Google will need to find some new innovation to build their event around. Speech to text on device is great, especially with the many use cases it enabled and the privacy angle to it. Not sure how they’d top that next year.

What’s certain is that AI and privacy will still be at the forefront for Google during 2019 and well into 2020.

A lot of the AI innovations Google is talking about is around real time communications. Check out the recent report I’ve written with Chad Hart on the subject: