Discover effective strategies to optimize WebRTC and enhance the quality of your video and audio streaming services.

In my update to the Video API report this time, I had the chance of reviewing what the vendors have done in the last 12 months or so. Some added new features and capabilities. Others not so much. Many were improving and optimizing their offering – better background replacement, less peer connections, more users in a single call, additional devices, …

WebRTC is a marathon and not a sprint. You can’t just write once and forget. You need to work at it. Day in, day out. Improving and optimizing your application.

Part of these optimizations are around WebRTC performance. Here are 8 places to validate the next time you need to optimize your WebRTC application’s performance:

Table of contents

- The video version

- 1. Send and receive less bytes

- 2. Use better video codecs

- 3. Don’t send all audio streams all the time

- 4. Use simulcast and SVC only when needed

- 5. Treat different configurations differently

- 6. Have more media servers

- 7. Allocate users to closer servers

- 8. Collect, measure and monitor your metrics

- Final thoughts on optimizing WebRTC performance

The video version

If you’re more of a visual person, then here are the tips, all wrapped up in a video:

Just note that it might still be slightly different, with different examples 💡

1. Send and receive less bytes

Here’s a shocker – if you send and receive less bytes (especially of the video kind), you are going to have higher performance. Your device will use less network and CPU resources (which will make it perform better). The media servers will have less data to route through them.

I know that what we want at the end of the day is the best possible 4K resolution at 60fps in a crisp look. And that’s before you start dreaming of doing VR or 8K.

But here’s the thing – do you really need 4K or even full HD on a smartphone with a 5” or 6” display? Is that 4K from the webcam useful when you’re also sharing your display at the same time and the other participant cares about your display and not your looks?

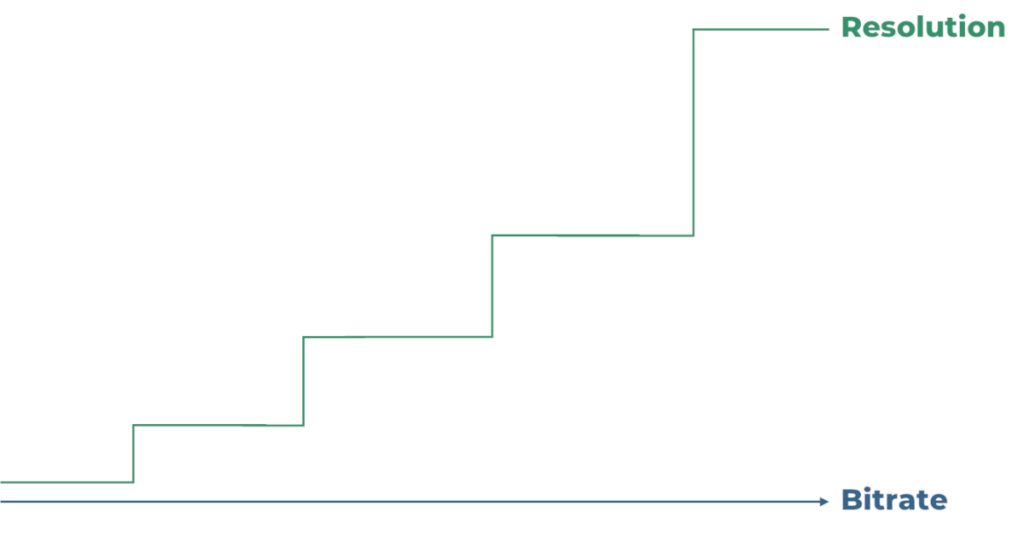

👉 Why did I switch here from bytes to resolution? Because the higher the bitrate (=bytes) the higher the resolution I can compress at reasonable quality

We call this the resolution ladder – for a given bitrate, we match a suitable resolution, and we go up or down the ladder based on how much bitrate we have. The numbers vary per the video codec, frame rate, type of content and if you’re going up or down the ladder, but that’s for another time.

Oh, and you don’t control where the rungs on the ladder are – that’s a decision left to the browser to make 🤷♂️

–

So… first things first.

Go count your pixels. Check your bitrate. See if it is optimal for your use case. Ask yourself if, where and how can you reduce that bitrate. Either on the incoming or the outgoing streams. To think about it in a simpler way, start by focusing on the resolution and framerate and move your way from there towards bitrate and bytes.

2. Use better video codecs

Did I mention that video codecs affect bitrate and quality?

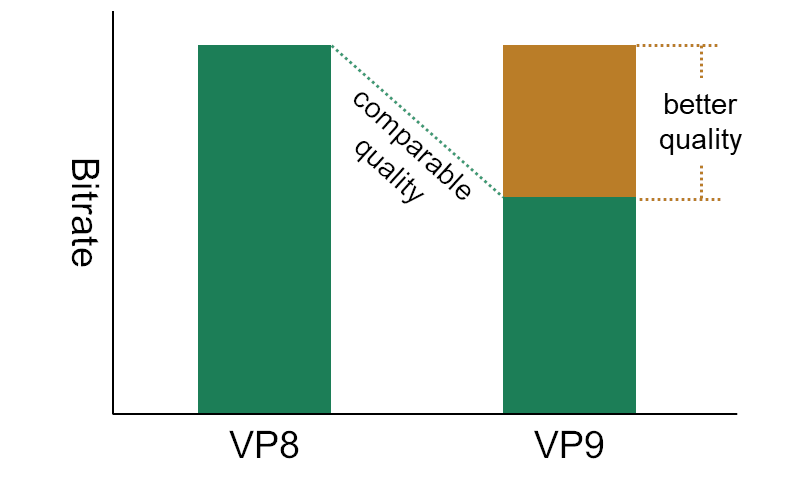

For the same bitrate budget, the quality you get will be something like this for each video codec:

VP8 < VP9 < AV1

AV1 will give better quality than VP9 which in turn offers better quality than VP8 (for the same bitrate).

So yes. Picking a newer video codec means lower bitrate. But it also means higher CPU and memory use. This makes the decision non-trivial…

When you pick a better video codec, there’s another decision to be made – are you going to use the added bitrate to improve quality or will you reduce the bitrate and maintain the same level of quality?

And this isn’t the only question to deal with in a multi video codec environment. You need to pick the video codec that is suitable for the specific scenario you’re in:

- AV1 is a great codec to use today. But not on older devices. And not when the resolution and bitrate might be too high

- AV1 is also great for text in screen sharing (text legibility at even low bitrates is way better than the other alternatives)

- H.264 can be a great codec on the right devices – it comes with hardware acceleration in many cases, which means lower CPU use and having mobile handsets that don’t warm up on long video calls

- VP8 is rock solid, available everywhere

- HEVC is an Apple thing for Apple devices that might or might not be available

- VP9 today is a kind of a transition point between VP8 and AV1

Which. One. Do. You. Use?

It depends.

And we will leave it at that. Just know that optimizing WebRTC for performance means figuring out which video codec to use in which scenario.

3. Don’t send all audio streams all the time

During Covid, I had a customer asking to be able to recreate the experience of a stadium full of people. Hearing the people around you and the crowd cheering together when a goal is scored.

The problem, besides the CPU and/or network required to make that happen, was that the WebRTC implementation from Google at the time (that’s libWebRTC) wasn’t fond of mixing too many audio sources. It simply took the 3 incoming streams with the loudest audio and mixed them – ignoring all others.

The good thing about it? It reduced CPU load. And frankly, if you have more than 3 people speaking in a meeting you have other issues than the WebRTC implementation – likely something you’ll need to settle between the people speaking anyway.

What happened is that Google a year or two ago decided to remove that optimization. It will now mix all incoming audio streams thrown at it. Theoretically, you can now give that stadium audience the vocal experience of everyone cheering. In reality? Your users might be suffering from CPUs that warm up a lot more due to the extra mixing effort.

What should you do?

“3 loudest” approach to audio mixing

Decide on the maximum number of audio streams you wish to mix. If you aren’t sure – just pick the magic number 3.

👉 3 was the magic number libWebRTC used for over a decade. Now there’s no limit in libWebRTC. But… Google Meet still decide on 3 as its magic number.

Now that you have that number, make sure in your SFU to never send more than the 3 loudest streams to send towards the listeners. What do you do with the rest? Replace their media with DTX or just don’t send them… up to you and your architecture.

That will improve your session’s scale and optimize WebRTC performance for both network and CPU.

4. Use simulcast and SVC only when needed

Simulcast is great! SVC? Even better!

But not every problem is a nail with that hammer you call simulcast (or SVC for that matter).

Let’s take simulcast as an example. We use it to generate multiple video streams in various bitrates so that a group meeting will be able to deal with users on different networks and devices. It improves the average user experience of the meeting for its participants.

But… done in a 1:1 meeting, it is just wasteful.

The sender here is sending too many streams, causing it to waste precious CPU and network resources instead of using the same resources to improve the quality of that meeting with a single video stream.

You need to figure out when to use and when not to use these features…

5. Treat different configurations differently

That example around simulcast above? Let’s generalize it a bit, shall we?

Your application will have different configurations for its WebRTC operation. It might be due to the number of users, their locations, the devices used, their network quality or even what it is that they are doing in the meeting itself.

Take all of these different permutations, let’s call them configurations. And now for each, figure out what is the best approach to optimize the performance of your WebRTC stack for it.

Is it worth the effort to optimize in such a way?

Does this configuration happen often enough? To important users/customers?

How complex is it to implement that kind of optimization?

What about switching from one configuration to another – can you smoothly turn on and off the various optimizations you have in place?

This is important. Go do the work.

6. Have more media servers

If you want to optimize a WebRTC application for performance, you might as well throw more media servers on the problem.

Throwing more hardware is great, but the point I want to make here is that these servers need to be CLOSER to the users.

Got all your media servers in a single data center in US East? You need to add another region.

Covered the US and Europe? Time to add Asia.

Etc.

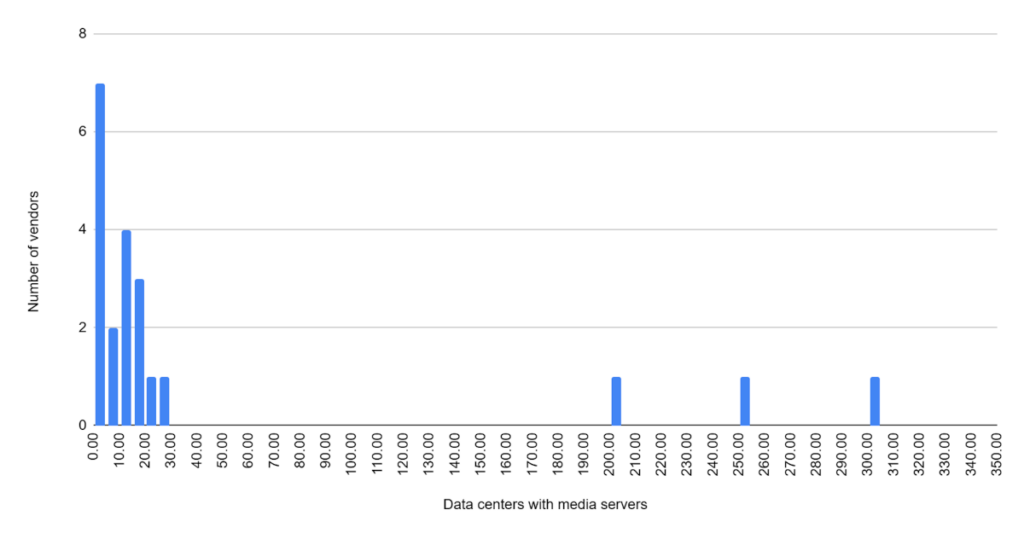

In my Video API report, there’s the whole gamut of deployments:

Everything from a single region, single continent to over 200 regions. And it seems that you’re either happy with 10-30 or you strive for 200+ regions.

Check where your users are from. Populate the data centers around them with your media servers.

Oh – and you don’t really need to overdo it. Many of the bigger vendors (who have high media quality) make do with less than 20 different regions.

7. Allocate users to closer servers

Got your servers sprinkled all over the globe? Great!

Now where do you end up connecting your users? To which location?

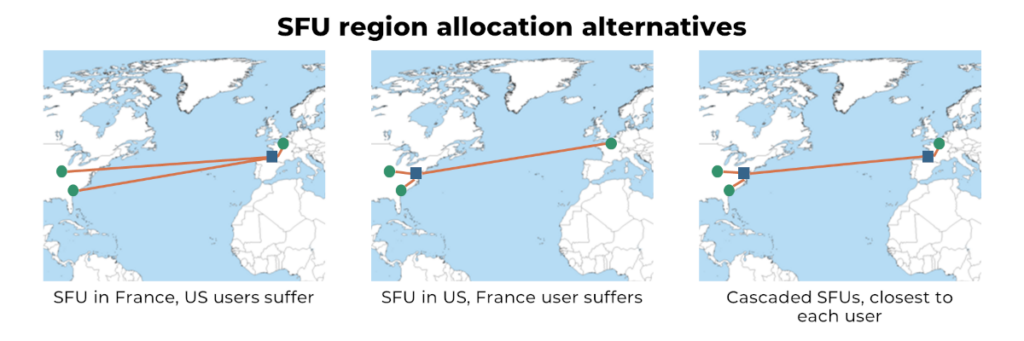

If there’s a meeting between 2 people in the US and 1 in France. Which regions do you have media servers covering this headache of a meeting?

- If it is in France… then the two in the US are going to have a poor experience. Especially when they talk to one another in the meeting (their media flows over the Atlantic ocean for no good reason)

- If it is in the US… well… that guy in France might suffer from a poor connection over that same ocean and end up with more packet losses and latency than you wish for

- You could cascade this and have multiple media servers in multiple regions handle the session. But that takes effort. Make it happen

The point I am trying to make? Media server allocation for group meetings isn’t trivial. Take your time figuring it out and implementing it properly.

8. Collect, measure and monitor your metrics

If you don’t know what’s wrong and why, there’s no way you’re going to be able to fix things. Or improve. Or optimize.

I started off by saying that WebRTC is a marathon and not a sprint. When it comes to optimizing WebRTC performance, it means that you need to improve over time your application.

❓ Where and what to improve?

❓ What gives the highest ROI for your effort?

❓ Did your changes make a dent and actually improve things?

To answer these questions requires you to collect metrics, measure and analyze the data. And monitor continuously for it.

Make that a top priority for you.

Why?

Because the time will come when you will have users complaining. I’ve seen it happen multiple times with the companies I help.

Starting to put these monitoring tools in place at that point in time means you’re working with urgency of churning customers, which isn’t fun.

Start earlier than that.

Final thoughts on optimizing WebRTC performance

This is what came out of the top of my head the other day about optimizing WebRTC performance. There are likely at least 8 more ways to do that – all of them important and useful.

Don’t neglect this part in your WebRTC application development planning.

Optimizing a WebRTC application is great. But what about successfully launching it?

Check out my 3-step WebRTC launch action plan – a free resource that will show you what I do with every consulting project that deals with launching WebRTC applications.

Very useful insights. Thank you!

????