Both.

VP8 and VP9 are video codecs developed and pushed by Google. Up until recently, we had only VP8 in Chrome’s WebRTC implementation and now, we have both VP8 and VP9 codec. This lead me to several interesting conversations with customers around if and when to adopt VP9 – or should they use H.264 instead (but that’s a story for another post). More recently, we’ve also seen discussions around AV1 vs HEVC in WebRTC.

This whole VP8 vs VP9 topic is usually misunderstood, so let me try to put some order in things.

First things first:

- VP8 is currently the default video codec in WebRTC. Without checking, it is probably safe to say that 90% or more of all WebRTC video sessions use VP8

- VP9 is officially and publicly available from Chrome 49 or so (give or take a version). But it isn’t the default codec in WebRTC. Yet

- VP8 is on par with H.264

- VP9 is better than VP8 when it comes to resultant quality of the compressed video

- VP8 takes up less resources (=CPU) to compress video

With that in mind, the following can be deduced:

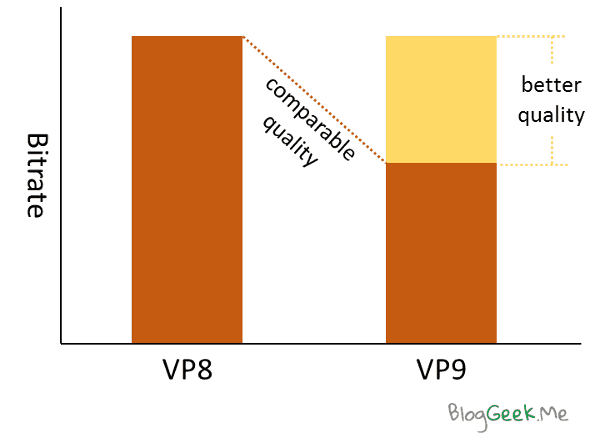

You can use the migration to VP9 for one of two things (or both):

- Improve the quality of your video experience

- Reduce the bitrate required

Let’s check these two alternatives then.

1. Improve the quality of your video experience

If you are happy with the amount of bandwidth required by your service, then you can use the same amount of bandwidth but now that you are using VP9 and not VP8 – the quality of the video will be better.

When is this useful?

- When the bandwidth available to your users is limited. Think 500 kbps or less – cellular and congested networks comes to mind here

- When you plan on supporting higher resolutions/better cameras etc.

2. Reduce the bitrate required

The other option is to switch to VP9 and strive to stay with the same quality you had with VP8. Since VP9 is more efficient, it will be able to maintain the same quality using less bitrate.

When is this useful?

- When you want to go “down market” to areas where bandwidth is limited. Think a developed countries service going to developing countries

- When you want to serve enterprises, who need to conduct multiple parallel video conferences from the same facility (bandwidth towards the internet becomes rather scarce in such a use case)

How is bitrate/quality handled in WebRTC by default?

There is some thing that is often missed. I used to know it about a decade ago and then forgot until recently, when I did the comparison between VP8 and VP9 in WebRTC on the network.

The standard practice in enterprise video conferencing is to never use more than you need. If you are trying to send a VGA resolution video, any reputable video conferencing system will not take more than 1 Mbps of bitrate – and I am being rather generous. The reason for that stems from the target market and timing.

Enterprise video conferencing has been with us for around two decades. When it started, a 1 mbps connection was but a dream for most. Companies who purchased video conferencing equipment needed (as they do today) to support multiple video conferencing sessions happening in parallel between their facilities AND maintain reasonable internet connection service for everyone in the office at the same time. It was common practice to reduce the internet connection for everyone in the company every quarter at the quarterly analyst call for example – to make sure bandwidth is properly allocated for that one video call.

Even today, most enterprise video conferencing services with legacy in their veins will limit the bitrate that WebRTC takes up in the browser – just because.

WebRTC was developed with internet thinking. And there, you take what you are given. This is why WebRTC deals less with maximum bandwidth and more with available bandwidth. You’ll see it using VP8 with Chrome – it will take up 1.77 Mbps (!) when the camera source is VGA.

This difference means that without any interference on your part, WebRTC will lean towards improving the quality of your video experience when you switch to VP9.

One thing to note here – this all changes with backend media processing, where more often than not, you’ll be more sensitive to bandwidth and might work towards limiting its amount on a per session basis anyway.

All Magic Comes with a Price

We haven’t even discussed SVC here and it all looks like pure magic. You switch from VP8 and VP9 and life is beautiful.

Well… like all magic, VP9 also comes with a price. For start, VP9 isn’t as stable as VP8 is yet. And while this is definitely about to improve in the coming months, you should also consider the following challenges:

- If you thought VP8 is a resource hog, then expect VP9 to be a lot more voracious with its CPU requirements

- It isn’t yet available in hardware coding, so this is going to be challenging (VP8 usually isn’t either, but we’re coping with it)

- Mobile won’t be so welcoming to VP9 now I assume, but I might be mistaken

- Microsoft Edge won’t support it any time soon (assuming you care about this Edge case)

This is a price I am willing to pay at times – it all depends on the use case in question.

Need to understand video codecs better? Here’s a free mini video course to help you with that.

Hey there,

VP8 hardware-accelerated encoding (8-bit) is present on Intel Skylake SKUs via VAAPI on Linux (Equivalent to Intel’s branded QuickSync on Windows).

Kabylake also supports VP9 (8 and 10-bit) hardware-accelerated encoding, and will soon be exposed via VAAPI to pipelines such as FFmpeg and libav. If you need to test that now, use gstreamer-vaapi’s package.

Dennis – thanks for the update. I haven’t known of these new developments.

does having vp8 decoding capability in the gpu help in vp9 decoding?

Osman,

To tell you the truth, I don’t know…