WebAssembly in WebRTC will enable vendors to create differentiation in their products, probably favoring the more established, larger players.

In Kranky Geek two months ago, Google gave a presentation covering the overhaul of audio in Chrome as well as there is WebRTC headed next. That what’s next part was presented by Justin Uberti, creator and lead engineer for Google Duo and WebRTC.

The main theme Uberti used was the role of WebAssembly, and how deeper customizations of WebRTC are currently being thought of/planned for the next version of WebRTC (also known as WebRTC NV).

Before we dive into this and where my own opinions lie, let’s take a look at what WebAssembly is and what makes it important.

Looking to learn more about WebRTC? Start from understanding the server side aspects of it using my free mini video course.

What is WebAssembly?

Here’s what webassembly.org has to say about WebAssembly:

WebAssembly (abbreviated Wasm) is a binary instruction format for a stack-based virtual machine. Wasm is designed as a portable target for compilation of high-level languages like C/C++/Rust, enabling deployment on the web for client and server applications.

To me, WebAssembly is a JVM for your browser. The same as Java is a language that gets compiled into a binary code that then gets interpreted and executed on a virtual machine, WebAssembly, or Wasm, allows developers to take the hard core languages (which means virtually any language), “compile” it to a binary representation that a Wasm virtual machine can execute efficiently. And this Wasm virtual machine just happen to be available on all web browsers.

WebAssembly allows vendors to do some really cool things – things that just weren’t possible to do with JavaScript. JavaScript is kinda slow compared to using C/C++ and a lot of hard core stuff that’s already written in C/C++ can now be ported/migrated/compiled using WebAssembly and used inside a browser.

Here are a few interesting examples:

- Construct 3 decided to use Opus in browsers. Even when it isn’t available – by implementing Opus with Wasm

- Zoom uses WebAssembly in order NOT to use WebRTC (probably because it doesn’t want to transcode at the edge of its network)

- Unity, the popular gaming engine has adopted Wasm for its Unity WebGL target

What’s in WebRTC NV?

While the ink hasn’t dried yet on WebRTC 1.0 (I haven’t seen a press release announcing its final publication), discussions are taking place around what comes next. This is being captured in a W3C document called WebRTC Next Version Use Cases – WebRTC NV in short.

The current list of use cases includes:

- Multiparty voice and video communications for online gaming – mainly more control on how streams are created, consumed and controlled

- Improved support in mobile networks – the ability to manage and switch across network connections

- Better support for media servers

- New file sharing capabilities

- Internet of Things – giving some love, care and attention to the data channel

- Funny hats – enabling AI (computer vision) on video streams

- Machine learning – like funny hats, but a bit more generic in its nature and requirements

- Virtual reality – ability to synchronize audio/video with the data channel

While some of these requirements will end up being added as APIs and capabilities to WebRTC, a lot of them will end up enabling someone to control and interfere with how WebRTC works and behaves, which is where WebAssembly will find (and is already finding) a home in WebRTC.

Google’s example use case for WebAssembly in WebRTC

At the recent Kranky Geek event, Google shared with the audience their recent work in the audio pipeline for WebRTC in Chrome and the work ahead around WebRTC NV.

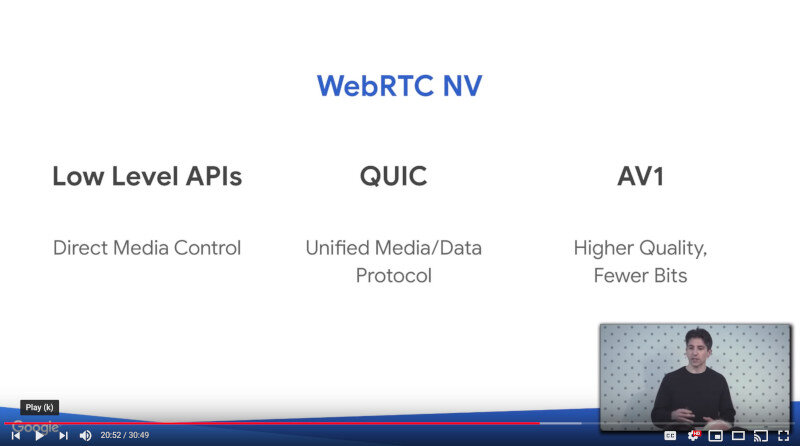

For Google, WebRTC NV means these areas:

The Low Level APIs is about places where WebAssembly can be used.

You should see the whole session, but here it is from where Justin Uberti starts talking about WebRTC NV – and mainly about WebAssembly in WebRTC:

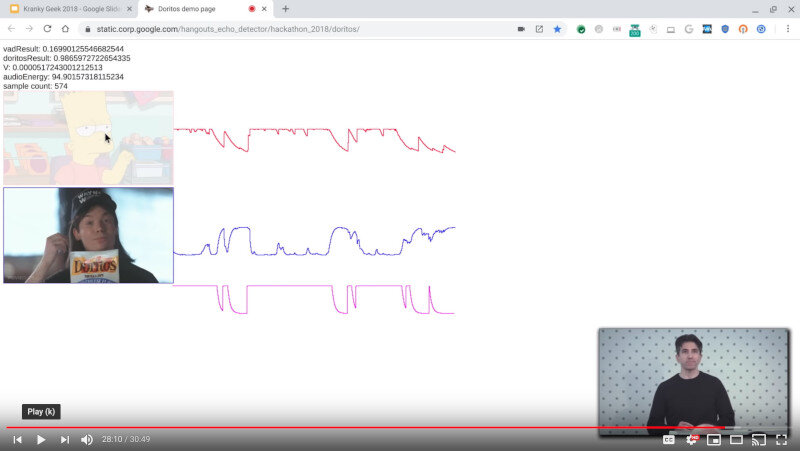

WebAssembly is a really powerful tool. To give a taste of it with WebRTC, Justin Uberti resorted to the domain of noise separation – distinguishing between speech and noise. To do that, he put up an online demo that takes RNNoise, a noise suppression algorithm based on machine learning, ported it to WebAssembly, and built a small demo around it. The idea is that in a multiparty conference, the system won’t switch to a camera of a person unless he is really speaking – ignoring all other interfering noises (key strokes, falling pen, eating, moving furniture, etc).

Interestingly enough, the webpage hosting this demo is internal to Google and has a URL called hangouts_echo_detector/hackathon_2018/doritos – more on that later.

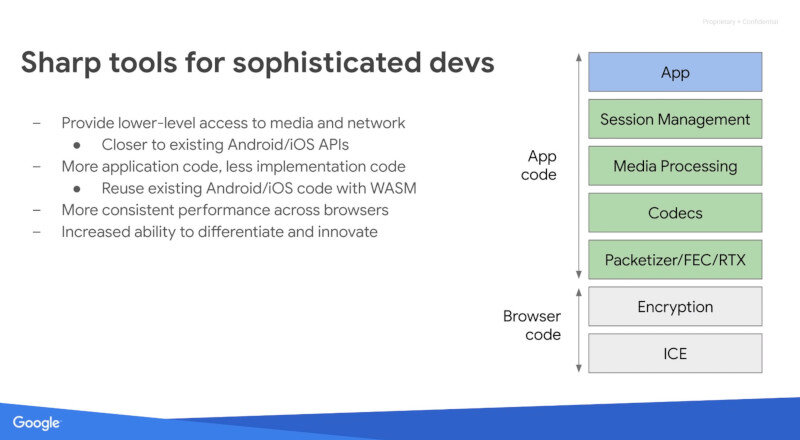

To explain the intent, Justin Uberti showed this slide:

As he said, the “stuff in green” (that’s Session Management, Media Processing, Codecs and Packetizer/FEC/RTX) can now be handled by the application instead of by WebRTC’s PeerConnection and enable higher differentiation and innovation.

I am not sure if this should make us happier or more worried.

In favor of differentiation and innovation through WebAssembly in WebRTC

Savvy developers will LOVE WebAssembly in WebRTC. It allows them to:

- have way more control over the browser behavior with WebRTC

- add their own shtick

- do stuff they can’t do today – without waiting on Google and the other browser vendors

In 2018, I’ve seen a lot of companies using customized WebRTC implementations to solve problems that are very close to what WebRTC does, but with a difference. These mainly revolved around streaming and internet of things type of use cases, where people aren’t communicating with each other in the classic sense. If they’d have low level API access, they could use WebAssembly and run these same use cases in the browser instead of having to port, compile and run their own stand-alone applications.

This theoretically allows Zoom to use WebRTC and by using WebAssembly get it to play nice with its current Zoom infrastructure without the need to modify it. The result would give better user experience than the current Zoom implementation in the browser.

Enabling WebAssembly in WebRTC can increase the speed of innovation and spread it across a larger talent pool and vendors pool.

In favor of a level playing field for WebRTC

The best part about WebRTC? Practically any developer can get a sample application up and running in no time compared to the alternatives. It reduced the barrier of entry for companies who wanted to use real time communications, democratizing the technology and making it accessible to all.

Since I am on a roll here – WebRTC did one more thing. It leveled the playing field for the players in this space.

Enabling something like WebAssembly in WebRTC goes in the exact opposite direction. It favors the bigger players who can invest in media optimizations. It enables them to place patents on media processing and use it not only to differentiate but to create a legal mote around their applications and services.

The simplest example to this can be seen in how Google itself decided to share the concept by taking RNNoise and porting it to WebAssembly. The demo itself isn’t publicly available. It was shown at Kranky Geek, but that’s about it. Was it because it isn’t ready? Because Google prefers having such innovations to itself (which it is certainly allowed to do)? I don’t know.

There’s a dark side to enabling WebAssembly in WebRTC – and we will most definitely be seeing it soon enough.

Where do we go from here?

WebRTC is maturing, and with it, the way vendors are trying to adopt it and use it.

Enabling WebAssembly in WebRTC is going to take it to the next level, allowing developers more control of media processing. This is going to be great for those looking to differentiate and innovate or those that want to take WebRTC towards new markets and new use cases, where the current implementation isn’t suitable.

It is also going to require developers to have better understanding of WebRTC if they want to unlock such capabilities.

Looking to learn more about WebRTC? Start from understanding the server side aspects of it using my free mini video course.

The level playing field was greatly helped by Chromium forcing 100%. In order to get something for Hangouts, the code had to be opensource (mostly; once Emil Ivov caught folks because of certain simulcast log lines which were not present in webrtc.org) and others could use the features. Simulcast is probably the main example for this.

Looks like the 100% opensource rule is gone though, I’ve seen webassembly files delivered with Hangouts Meet which build inside/against WebRTC without being included.

100% open source is a myth.

Google are allowed to add their own secret sauce as others do. I am guessing the issue starts when Chrome itself is getting “private”/”undocumented” interfaces that only Google can use.

100% Open Source is not a myth. GNU (Free Software with apologies to rms….) and lots of other great projects are doing it.

Chromium and libwebrtc are at best viewable source. If you work at Google you get the fast path to make whatever change you want, if you don’t it is a much steeper slope. There are also plenty of crbugs that are marked private, and other issues you are going to run into.

It is a shame, because it gets the label ‘Open Source’ while other projects that are actually community owned get ignored.

@Philipp Hancke who knows what hasn’t been caught yet.

Sean, I guess you should read this: https://blog.usejournal.com/open-source-business-models-considered-harmful-2e697256b1e3

I tend to agree with it.

This is a highly important area. I am using this tech for slot of the use cases mentioned.

But the premise is wrong imho.

QuicRTC is coming. 12 months away..

Http3 is getting ratified and it’s QUIC. 8 months away.

So everything is moving to UDP and always encrypted.

So you have dependency on the IS kernel for networking. Take it anywhere. Playing field kevelled..

Telcos will no longer be able to do QOS / traffic shaping or “network / contention management :)”. Flattening the playing field ….

The 5G system policy architects are using quic for backbone Comms.

AV1 is marching forward.

Its is going to level things – bye bye royalty rent seeking.

I do agree that WASM helps. It’s a huge innovation.

But when you combine wasm and webrtc then you don’t need servers ! So huge level playing field because no capex running costs.

Webrtc NV is interesting and am doing some of those.

I think there are a ton more use cases here that will become apparent too as people get into this and be brave and defy the dogma we all fall into over time.

P2P might really really take off

Gerard,

I am somewhat less optimistic as to the timeframe all these things will happen. It will probably be a lot longer (at least for QUIC in WebRTC, implemented in browsers, and with infrastructure and vendors using it).

Look how long WebRTC 1.0 is taking. I was promised it will get done in 2015.

We are working for took some very known videoconference brand, that they don’t like WebRTC… to WebRTC 😉