How many WebRTC RTCPeerConnection objects should we be aiming for?

This is something that bothered me in recent weeks during some analysis we’ve done for a specific customer at testRTC.

That customer built a service and wanted to get a clear answer to the question “how many users in parallel can fit into a single session?”

To get the answer, we had to first help him stabilize his service, which meant digging deeper into the statistics. That was a great opportunity to write an article, which is something I meant to do a few weeks from now. A recent question on Stack Overflow compelled me to do so somewhat earlier than expected – Maximum number of RTCPeerConnection:

I know web browsers have a limit on the amount of simultaneous http requests etc. But is there also a limit on the amount of open RTCPeerConnection’s a web page can have?

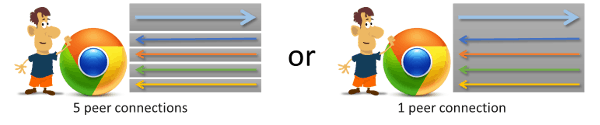

And somewhat related: RTCPeerConnection allows to send multiple streams over 1 connection. What would be the trade-offs between combining multiple streams in 1 connection or setting up multiple connections (e.g. 1 for each stream)?

The answer I wrote there, slightly modified is this one:

Not sure about the limit. It was around 256, though I heard it was increased. If you try to open up such peer connections in a tight loop – Chrome will crash. You should also not assume the same limit on all browsers anyway.

Multiple RTCPeerConnection objects are great:

- They are easy to add and remove, so offer a higher degree of flexibility when joining or leaving a group call

They can be connected to different destinationsThat said, they have their own challenges and overheads:

- Each RTCPeerConnection carries its own NAT configuration – so STUN and TURN bindings and traffic takes place in parallel across RTCPeerConnection objects even if they get connected to the same entity (an SFU for example). This overhead is one of local resources like memory and CPU as well as network traffic (not huge overhead, but it is there to deal with)

- They clutter your webrtc-internals view on Chrome with multiple tabs (a matter of taste), and SSRC might have the same values between them, making them a bit harder to trace and debug (again, a matter of taste)

A single RTCPeerConnection object suffers from having to renegotiate it all whenever someone needs to be added to the list (or removed).

I’d like to take a step further here in the explanation and show a bit of the analysis. To that end, I am going to use the following:

- testRTC – the service I’ll use to collect the information, visualize and analyze it

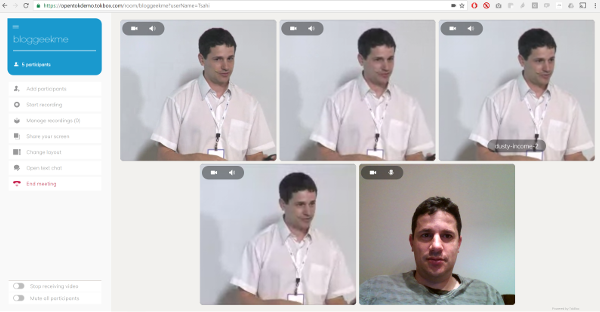

- Tokbox’ Opentok demo – Tokbox demo, running a multiparty video call, and using a single RTCPeerConnection per user

- Jitsi meet demo/service – Jitsi Videobridge service, running a multiparty video, and using a shared RTCPeerConnection for all users

If you rather consume your data from a slidedeck, then I’ve made a short one for you – explaining the RTCPeerConnection count issue. You can download the deck here

But first things first. What’s the relationship between these multiparty video services and RTCPeerConnection count?

WebRTC RTCPeerConnection and a multiparty video service

While the question on Stack Overflow can relate to many issues (such as P2P CDN technology), the context I want to look at it here is video conferencing that uses the SFU model.

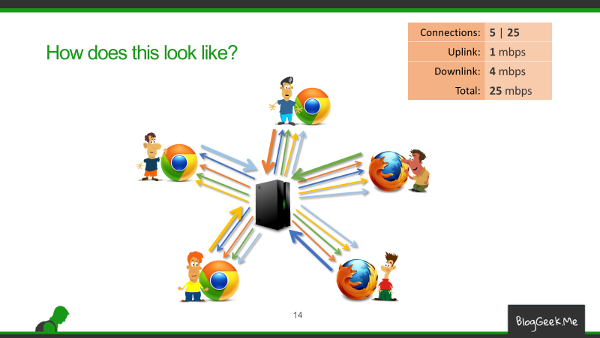

The illustration above shows a video conferencing between 5 participants. I’ve “taken the liberty” of picking it up from my Advanced WebRTC Architecture Course.

What happens here is that each participant in the session is sending a single media stream and receiving 4 media streams for the other participants. These media streams all get routed through the SFU – the box in the middle.

So. Should the SFU box create 4 RTCPeerConnection objects in front of each participant, each such object holding the media of one of the other participants, or should it just cram all media streams into a single RTCPeerConnection in front of each participant?

Let’s start from the end: both options will work just fine. But each has its advantages and shortcomings.

Opentok: RTCPeerConnection per user

If you are following the series of articles Fippo wrote with me about how to read webrtc-internals, then you should know a thing or two about its analysis.

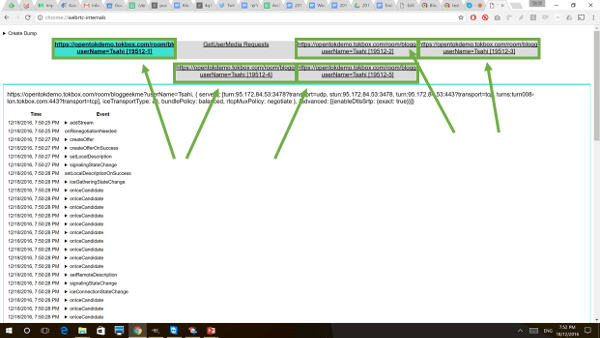

Here’s how that session looks like when I join on my own and get testRTC to add the 4 additional participants into the room:

Here’s a quick screenshot of the webrtc-internals tab when used in a 5-way video call on the Opentok demo:

One thing that should pop up by now (especially with them green squares I’ve added) – TokBox’ Opentok uses a strategy of one RTCPeerConnection per user.

One of these tabs in the green squares is the outgoing media streams from my own browser while the other four are incoming media streams from the testRTC browser probes that are aggregated and routed through the TokBox SFU.

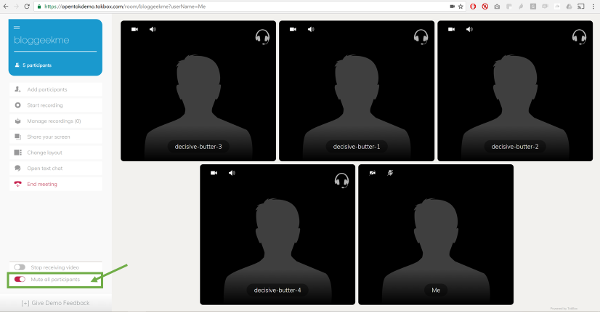

To understand the effect of having open RTCPeerConnections that aren’t used, I’ve ran the same test scenario again, but this time, I had all participants mute their outgoing media streams. This is how the session looked like:

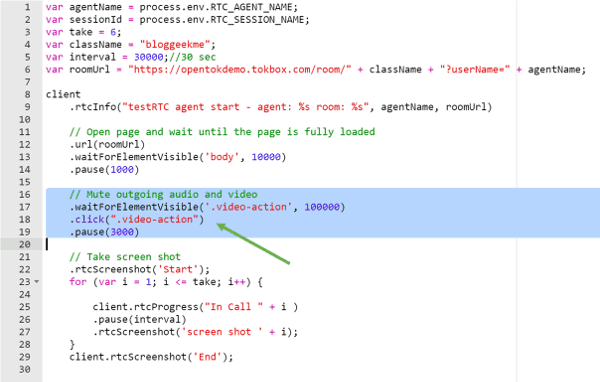

To achieve that with the Opentok demo, I had to use a combination of the onscreen mute audio button and having all participants mute their video when they join. So I added the following lines to the testRTC script – practically clicking on the relevant video mute button on the UI:

After this most engaging session, I looked at the webrtc-internals dump that testRTC collected for one of the participants.

Let’s start with what testRTC has to offer immediately by looking at the high level graphs of one of the probes that participated in this session:

- There is no incoming data on the channels

- There is some out going media, though quite low when it comes to bitrate

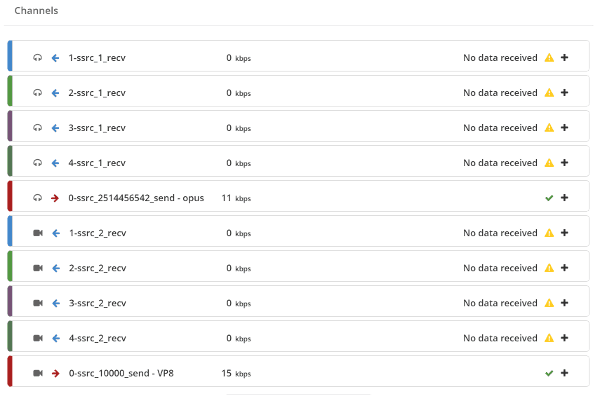

What we will be doing, is ignore the outgoing media and focus on the incoming one only. Remember – this is Opentok, so we have 5 peer connections here: 1 outgoing, 4 incoming.

A few things to note about Opentok:

- Opentok uses BUNDLE and rtcp-mux, so the audio and video share the same connection. This is rather typical of WebRTC services

- Opentok “randomly” picks SSRC values to be numbered 1, 2, … – probably to make it easy to debug

- Since each stream goes on a different peer connection, there will be one Conn-audio-1-0 in each session – the differences between them will be the indexed SSRC values

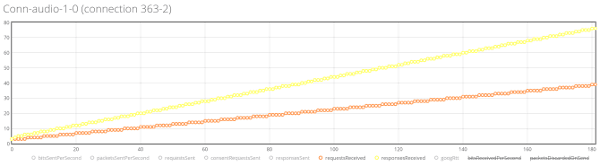

For this test run that I did, I had “Conn-audio-1-0 (connection 363-1)” up to “Conn-audio-1-0 (connection 363-5)”. The first one is the sender and the rest are our 4 receivers. Since we are interested here in what happens in a muted peer connection, we will look into “Conn-audio-1-0 (connection 363-2)”. You can assume the rest are practically the same.

Here’s what the testRTC advanced graphs had to show for it:

I removed some of the information to show these two lines – the yellow one showing responsesReceived and the orange one showing requestsReceived. These are STUN related messages. On a peer connection where there’s no real incoming media of any type. That’s almost 120 incoming STUN related messages in total for a span of 3 minutes. As we have 4 such peer connections that are receive only and silent – we get to roughly 480 incoming STUN related messages for the 3 minutes of this session – 160 incoming messages a minute – 2-3 incoming messages a second. Multiply the number by 2 so we include also the outgoing STUN messages and you get this nice picture.

There’s an overhead for a peer connection. It comes in the form of keeping that peer connection open and running for a rainy day. And that is costing us:

- Network

- Some small amount of bitrate for STUN messages

- Maybe some RTCP messages going back and forth for reporting purposes – I wasn’t able to see them in this streams, but I bet you’d find them with Wireshark (I just personally hate using that tool. Never liked it)

- This means we pay extra on the network for maintenance instead of using it for our media

- Processing

- That’s CPU and memory

- We need to somewhere maintain that information in memory and then work with it at all times

- Not much, but it adds up the larger the session is going to be

Now, this overhead is low. 2-3 incoming messages a second is something we shouldn’t fret about when we get around 50 incoming audio packets a second. But it can add up. I got to notice this when a customer at testRTC wanted to have 50 or more peer connections with only a few of them active (the rest muted). It got kinda crowded. Oh – and it crashed Chrome quite a lot.

Jitsi Videobridge: Shared RTCPeerConnection

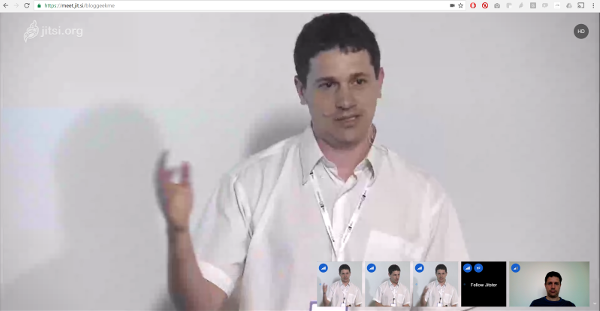

Now that we know how a 5-way video call looks like on Opentok, let’s see how it looks like with the Jitsi Videobridge.

For this, I again “hired” the help of testRTC and got a simple test script to bring 4 additional browsers into a Jitsi meeting room that I joined with my own laptop. The layout is somewhat different and resembles the Google Hangouts layout more:

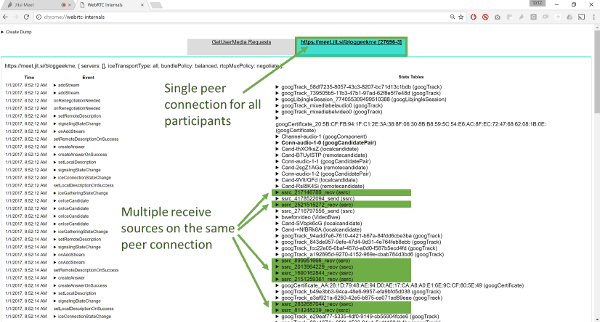

What we are interested here is actually the peer connections. Here’s what we get in webrtc-internals:

A single peer connection for all incoming media channels.

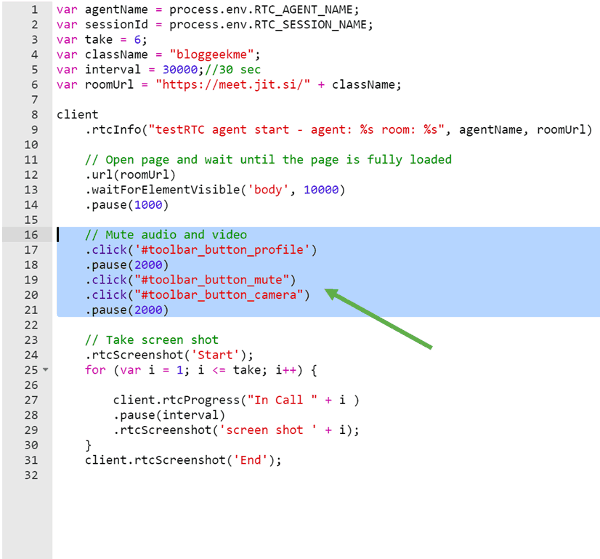

And again, as with the TokBox option – I’ll mute the video. For that purpose, I’ll need to get the participants to mute their media “voluntarily”, which is easy to achieve by a change in the testRTC script:

What I did was just was instruct each of my automated testRTC friends that are joining Jitsi to immediately mute their camera and microphone by clicking the relevant on-screen buttons based on their HTML id tags (#toolbar_button_mute and #toolbar_button_camera), causing them to send no media over the network towards the Jitsi Videobridge.

To some extent, we ended up with the same boring user experience as we did with the Opentok demo: a 5-way video call with everyone muted and no one sending any media on the network.

Let’s see if we can notice some differences by diving into the webrtc-internals data.

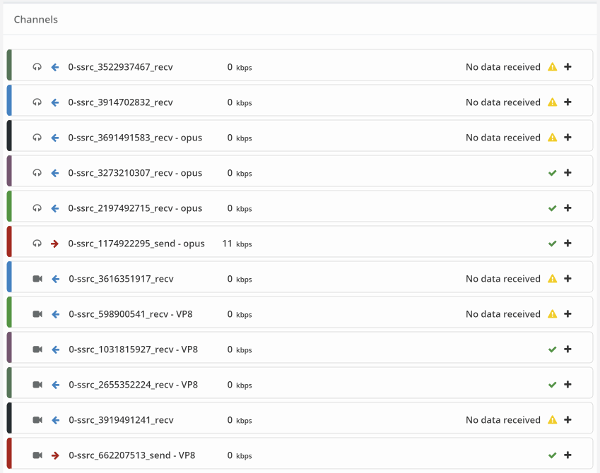

A few things we can see here:

- Jitsi Videobridge has 5 incoming video and audio channels instead of 4. Jitsi reserves and pre-opens an extra channel for future use of screen sharing

- Bitrates are 0, so all is quiet and muted

- Remeber that all channels here share a single peer connection

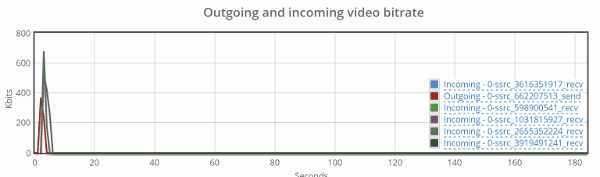

To make sure we’ve handled this properly, here’s a view of the video channels’ bitrate values:

There’s the obvious initial spike – that’s the time it took us to mute the channels at the beginning of the session. Other than that, it is all quiet.

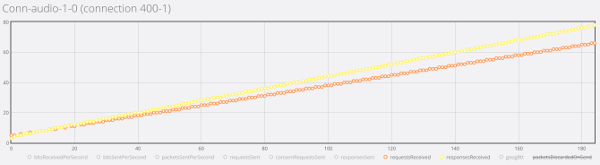

Now here’s the thing – when we look at the active connection, it doesn’t look much different than the ones we’ve seen in Opentok:

We end up with 140 incoming messages for the span of 3 minutes – but we don’t multiply it by 4 or 5. This happens once for ALL media channels.

Shared or per user RCTPeerConnection?

This is a tough question.

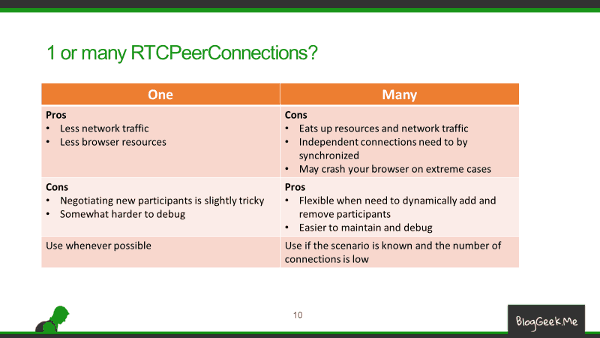

A single RTCPeerConnection means less overhead on the network and the browser resources. But it has its drawbacks. When someone needs to join or leave, there’s a need to somehow renegotiate the session – for everyone. And there’s also the extra complexity of writing the code itself and debugging it.

With multiple RTCPeerConnection we’ve got a lot more flexibility, since the sessions are now independent – each encapsulated in its own RTCPeerConnection. On the other hand, there’s this overhead we’re incurring.

Here’s a quick table to summarize the differences:

What’s Next?

Here’s what we did:

- We selected two seemingly “identical” services

- The free Jitsi Videobridge service and the Opektok demo

- We focused on doing a 5-way video session – the same one in both

- We searched for differences: Opentok had 5 RTCPeerConnections whereas Jitsi had 1 RTCPeerConnection

- We then used testRTC to define the test scripts and run our scenario

- Have 4 testRTC browser probes join the session

- Have them mute themselves

- Have me join as another participant from my own laptop into the session

- Run the scenario and collect the data

- Looked into the statistics to see what happens

- Saw the overhead of the peer connection

I have only scratched the surface here: There are other issues at play – creating a RTCPeerConnection is a traumatic event. When I grew up, I was told connecting TCP is hellish due to its 3-way handshake. RTCPeerConnection is a lot more time consuming and energy consuming than a TCP 3-way handshake and it involves additional players (STUN and TURN servers).

Rather consume your information from a slidedeck? Or have it shared/printed with others in your office? You can download the RTCPeerConnection count deck here.

Another advantage of single peer connection is establishment time, adding new participants should be faster.

An advantage of using multiple peer connections is easier (or less risky) browser compatibility.

Regarding “customer wanted to have 50 or more peer connections” the bigger overhead is probably the buffers and encoders/decoders and you are going to have the same amount of them no matter if you use 1 peer connection of multiple ones. The optimal solution for those use cases should be a single peer connection with a single media stream and the SFU switching which participant to forward (kind of what hangouts do).

Gustavo,

Thanks for sharing.

I am old. I don’t come from a world of abundance. I was taught to count bits and reserve resources. To write code in C to get it optimized. To look at what goes over the network. While it may seem neglidgable it does add up and cost resources – especially when the intent is to hold connections opened but not stream media on them all the time – that’s what killed the customer’s use case and not the number of encoders/decoders. But that’s a matter for another article I guess 🙂

That said, I admit that for many use cases, the differences are neglidgable.

hi thank yo for your report. I have a question. We have a live streaming video website using webrtc, is it possible for the user to have multiple tabs and watching streams simultaneously ? or have the ability to jump from tab to tab while multiple tabs are streaming live ?

thank you

I don’t think there’s a problem.

Easiest way would be to try it out yourself. Just open Jitsi meet or appear.in to more than a single room from multiple tabs in two or more machines and see what happens and if it fits the user experience you’re looking for.

if you’re counting beans you shouldn’t forget the overhead from the extra RTCP RRs and the more frequent REMBs.

Jitsi has the advantage that it could say “sorry, no firefox” until Firefox implemented Unified Plan. Tokbox always had to work with Firefox. And changing the model is hard.

Very interesting, thank you!

How about RTCDataChannel? Is it possible for example to have 1 RTCPeerConnection and 2 RTCDataChannel with two different peers? (before reading this article I thought not, but now I’m confused).

As far as my understanding goes, you can only have a single RTCDataChannel in a peer connection.

You can open multiple separate peer connections between the same two peers.

Hello,

Thank you for your posting!

I learned a lot about multi users communications.

I am preparing a service for max 40 users in each chat room with voice. Do you think WebRTC is a good option for this service? or any other protocol recommend for it?

Yoonjoo,

I don’t think that any other protocol would do these days. Not because of technical issues, but rather because of where we’re headed and where the focus is these days.

Being able to scale depends on your backend more that it does on the protocol you choose.

40 users is achievable with WebRTC. The devil is in the details. Things like how many will be viewable at the time, what level of interactivity you need, their location, etc.

This is a very good post. Thanks.

Hey Tsahi,

I would like to know more about the 1 to many connections. I have successfully implemented many to many peer connections, but was not able to find any relevant code for using 1 peer to handle multiple peers.

Can you please share some code or provide an insight on how to setup the peer to handle multiple peer connections.

Thanks

Ashwani,

Look at unified plan. It is how peer connections are currently handled. Most vendors have decided to pick the route of a peer connection per stream. A few are now on the single peer connection route – not sure if any of the open source alternatives out there support such an implementation.

Hello,

This is a great post, thanks very much.

What kind of approach would you use for a service where only one user shares media to a vast (as vast as possible) audience? Do you have any recommendations for media servers?

Thanks,

Matteo

Matteo,

To self-develop, look at Janus and mediasoup. For something a bit more targeted, check Red5 Pro and Ant Media. For SaaS look at millicast, Limelight and Phenix. Maybe also Agora and TokBox.

Hi! Excuse my ignorance, but I’m a bit confused. Suppose I have 3 simultaneous users – surely each user would have to have 2 RTC peer connections, correct? Is this article about allowing that to be 1? If so, could you briefly outline how this is possible?

With mesh, you must have 2 peer connections.

If you are using a media server, then today this can be implemented as a single peer connection between each user and the media server. In most cases though, most media servers today still use multiple peer connections for this scenario as well.

Hey Tsahi, you bring up some really important details. We are looking to have up to 100 people upstream to a single chrome instance as their peer (hub spoke). We are not looking for any downstream from the single Chrome peer, or any connections between the 100 peers. Any suggestions on if this will work and how to optimize it, with regard to peer connections. The upstream connections will all be active at the same time. Thank you!

Michael,

Not sure I understand your requirement or intent exactly, but if this is to have 100 browsers connect to each other without a media server, then you’re in for a huge headache – especially if you want to stream video on all of them all the time…

Hi , I’m learning webrtc recently. I want to build a SFU , with some help of google , I figure out how to implement ICE and DTLS-SRTP key exchange.

The next step is about video forwarding , After reading your article , I decide to use single peer-connection solution . But for now I have no idea how to negotiate when new participant joins. Any resource about that ?

Look at https://webrtc.org/getting-started/unified-plan-transition-guide

To use a single peer connection you’ll need to implement unified plan.

Hi Tsahi, i have a peer connection on which i am using exchanging the stream (local and remote) now i want to send the getDisplayMedia stream as another stream to the other peer so i can display both my video and getDisplayMedia stream both at the same time on different Video element how can i do that? any reference to source code or anything will be very helpful

thanks in advance

Mooman, this question is more suitable for stackoverflow or discuss-webrtc. Somewhat less in a comment on an article on this blog.

Hi Tsahi Levent-Levi, you are the go to person when learning more in deep details of WebRTC.

I am implementing a multi party call app, I started with one to one video calls, and now I am using Kurento media server to achieve mutli party calls without doing a mesh architecture.

But for the examples I saw, each user needs to open a receive only peer connection per each participant to receive their stream. This means if there are 5 people in the call. There will be 1 connection for sending your media and other 4 WebRTC connections to receive the corresponding media of the other users. How is this different from a mesh architecture? Why people compare Mesh vs SFU if you still need to open connections to receive medias from each other user? The only difference is that you upload your own media once.

So how can we avoid that? According to your blog one of the solutions is Just have 1 connection to send and 1 connection to receive multiple medias? Is that part of official WEBRTC standards?

Can that still be considered SFU? Is it a new architectural approach?

Thanks in advance.

Carlos,

A few thoughts on this one:

1. I wouldn't use Kurento. Not well maintained. Popularity is dropping. I'd use something else (mediasoup, Jitsi, Janus, …)

2. Peer Connections count is important, but in the case of 5-way calls not critical these days. There's no need to make this your main parameter for decision making

3. With an SFU, media flows to the media server which then routes it around. This means a lot less strain on the uplink, where users are more limited in available bitrate (not to mention the reduction in CPU requirements form the client)