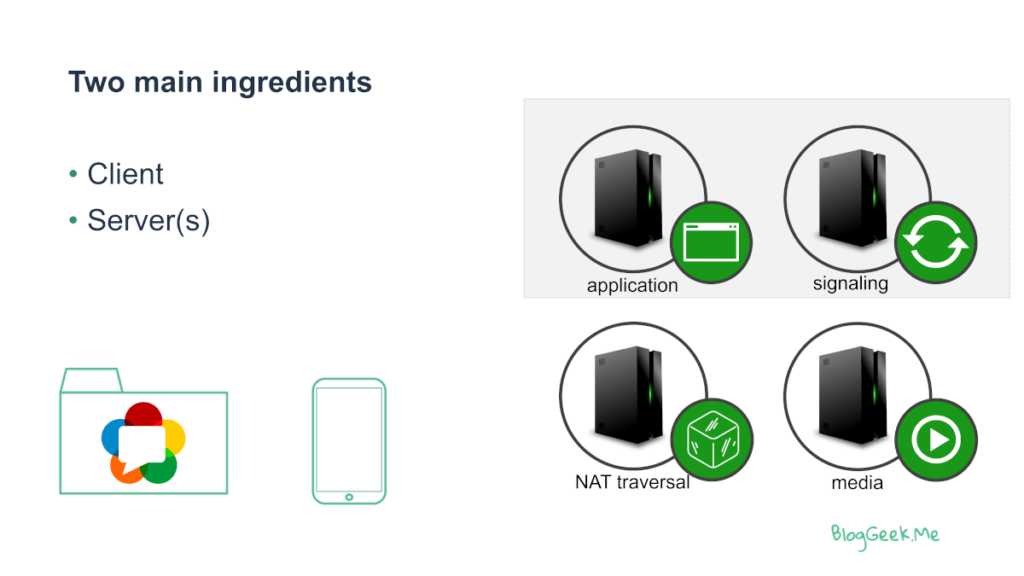

When someone says WebRTC Server – what does he really mean? There are 4 different WebRTC servers that you need to know about: application, signaling, NAT traversal and media.

WebRTC is a communications standard that enables us to build a variety of applications. The most common ones will be voice or video calling services (1:1 or group calls). You can use it for broadcasts, live streaming, private/secure messaging, etc.

To get it working requires using a multitude of “WebRTC servers” – machines that reside in the cloud (or at least remotely enough and reachable) and provide functionality that is necessary to get WebRTC sessions connected properly.

What I’d like to do here is explain what types of WebRTC servers exist, what they are used for and when will you be needing them. There are 4 types of servers detailed in this article:

- WebRTC application servers – essentially the website hosting the service

- WebRTC signaling servers – how clients find each other and connect to each other

- NAT traversal servers for WebRTC – servers used to assist in connecting through NATs and firewalls

- WebRTC media servers – media processing servers for group calling, recording, broadcasting and other more complex features

More of the audio-visual type? I’ve recorded a quick free 3-part video course on WebRTC servers.

WebRTC application servers

Not exactly a WebRTC server, but you can’t really have a service without it ????

Think of it as the server that serves you the web page when you open the application’s website itself. It hosts the HTML, CSS and JS files. A few (or many) images. Some of it might not even be served directly from the application server but rather from a CDN for the static files.

What’s so interesting about WebRTC application servers? Nothing at all. They are just there and are needed, just like in any other web application out there.

WebRTC signaling servers

Signaling servers for WebRTC are sometimes embedded or collocated/co-hosted with the application servers, but more often than not they are built and managed separately from the application itself.

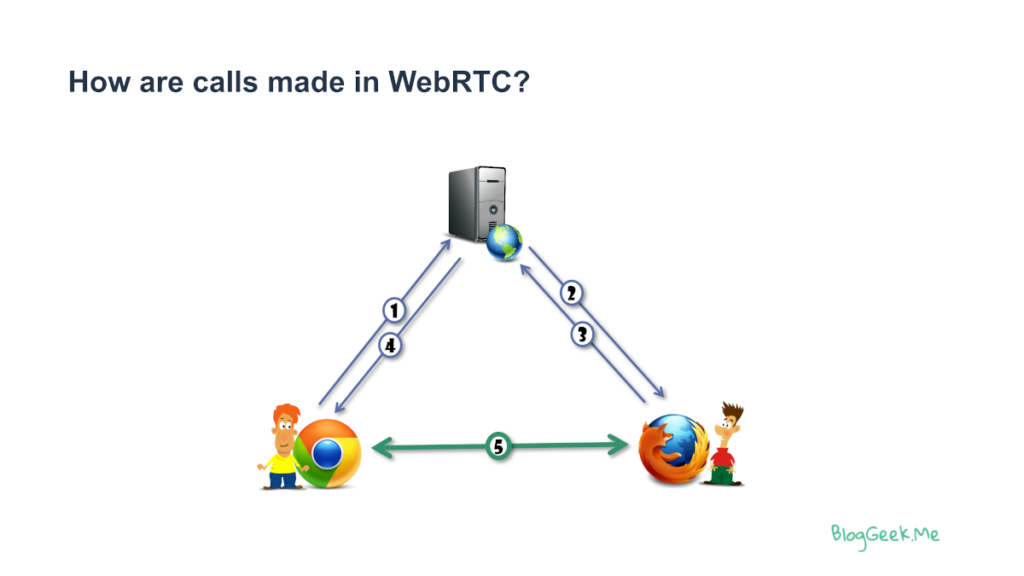

While WebRTC handles the media, it leaves the signaling to “someone else” to take care of. WebRTC will generate SDP – these are fragments of messages that the application needs to pass between the users. Passing these messages is the main concern of a signaling server.

There are 4 main signaling protocols that are used today with WebRTC, each lending itself to different signaling servers that will be used in the application:

- SIP – The dominant telecom VoIP protocol out there. When used with WebRTC, it is done as SIP over WebSocket. CPaaS and telecom vendors end up using it with WebRTC, mostly because they already had it in use in their infrastructure

- XMPP – A presence and messaging protocol. Some of the CPaaS vendors picked this one for their signaling protocol

- MQTT – Messaging protocol used mainly for IOT (Internet of Things). First time I’ve seen it used with WebRTC was Facebook Messenger, which makes it a very popular/common/widespread signaling server for WebRTC

- Proprietary – the most common approach of all, where people just implement or pick an alternative that just works for them

SIP, XMPP and MQTT all have existing servers that can be deployed with WebRTC.

The proprietary option takes many shapes and sizes. Node.js is quite a common server alternative used for WebRTC signaling (just make sure not to pick an outdated alternative – that’s quite a common mistake in WebRTC).

If you are going towards the proprietary route:

- Don’t use apprtc as the baseline for your WebRTC signaling server

- Consider my WebRTC the missing codelab course

NAT traversal servers for WebRTC

To work well, WebRTC requires NAT traversal servers. These WebRTC servers are in charge of making sure you can send media from one browser to another.

There are two types of NAT servers needed: STUN and TURN. TURN servers always implement STUN as well, so in all likelihood you’re looking at a single server here.

STUN is used to answer the question “what is my public IP address?” and then share the answer with the other user in the session, so he can try and use that address to send media directly.

TRUN is used to relay the media through it (so it costs more in bandwidth costs), and is used when you can’t really reach the other user directly.

A few quick thoughts here:

- You need both STUN and TURN to make WebRTC work. You can skip STUN if the other end is a media server. You will need TURN in WebRTC even if your other end of the session is a media server on a public IP address

- Don’t use free STUN servers in your production environment. And don’t never ever use “free” TURN servers

- If you deploy your own servers, you will need to place the TURN servers as close as possible to your users, which means handling TURN geolocation

- TURN servers don’t have access to the media. Ever. They don’t pose a privacy issue if they are configured properly, and they can’t be used by you or anyone else to record the conversations

- Prefer using paid managed WebRTC TURN servers instead of hosting your own if you can

- Make sure you configure NAT traversal sensibly. Here’s a free 3-part video course on effectively connecting WebRTC sessions

WebRTC media servers

WebRTC media servers are servers that act as WebRTC clients but run on the server side. They are termination points for the media where we’d like to take action. Popular tasks done on WebRTC media servers include:

- Group calling

- Recording

- Broadcast and live streaming

- Gateway to other networks/protocols

- Server-side machine learning

- Cloud rendering (gaming or 3D)

The adventurous and strong hearted will go and develop their own WebRTC media server. Most would pick a commercial service or an open source one. For the latter, check out these tips for choosing WebRTC open source media server framework.

In many cases, the thing developers are looking for is support for group calling, something that almost always requires a media server. In that case, you need to decide if you’d go with the classing (and now somewhat old) MCU mixing model or with the more accepted and modern SFU routing model. You will also need to think a lot about the sizing of your WebRTC media server and on how to allocate users and sessions on WebRTC media servers.

For recording WebRTC sessions, you can either do that on the client side or the server side. In both cases you’ll be needing a server, but what that server is and how it works will be very different in each case.

If it is broadcasting you’re after, then you need to think about the broadcast size of your WebRTC session.

A quick FAQ on WebRTC servers

Not really. You will need somehow to know who to communicate with and in many cases, you will need to somehow negotiate IP addresses and even route data through a server to connect your session properly.

That depends on the service you are using, as different implementations will put their focus on different features.

In general, signaling and NAT traversal servers in WebRTC don’t have access to the actual data. Media servers often have (and need) access to the actual data.

Yes. You can host your WebRTC servers on AWS. Many popular WebRTC services are hosted today on AWS, Google Cloud, Microsoft Azure and Digital Ocean servers. I am sure other hosting providers and data center vendors work as well.

WebRTC can be added to any WordPress, PHP or other website. In such a case, the PHP WordPress server will serve as the application server and you will need to add into the mix the other WebRTC servers: signaling server, NAT traversal server and sometimes media servers.

Know your WebRTC servers

No matter how or what it is you are developing with WebRTC, you should know what WebRTC servers are and what they are used for.

If you want to expand your knowledge and understanding of WebRTC, check out my WebRTC training courses.

Interesting. What is the best latency you can achieve with this technology? I am presently looking into ways for streaming HD quality CAM in real-time that is preferably in the region of 50 ms latency.

Thank you

Simon

Simon,

WebRTC is good enough for Google Stadia, which is high end cloud gaming (requiring low latency).

To make this work and get 50ms latency really depends on a lot of aspects – distance between the users, the servers, type of hardware you have (cameras and displays tend to add latency), etc.

Dear Tsahi,

we want to know how we can make money with WebRTC servers, do you may help us with technology.

Kind Regards

Helmut

Helmut, who am I to say how money is to be made from WebRTC servers?

Find a problem that requires solving and that people are willing to pay for.

Simon,

– the state of the art today seems to be around 150ms with stadia (ref: slides from justin uberti at the krankyGeek event).

– you should always do a sanity check: if everything was perfect, the round-trip between los angeles and new york at the speed of light would take almost 30ms. To go down to 50ms all-in-all you would have to be extremely close.

– Most of the media servers will add a latency of no less than 1 video frame (33ms if running at 30fps, 17ms at 60fps), and so will the displays (chrome has a 2 frames, frame buffer by default). 50ms seems difficult to achieve.

Just to say, thank you for this much clarifying article.

I have done your excellent course, but like to check your blogs too, as it reinforces topics and further horizons, but the basics of the course make it happen to get the pillars for understanding WebRTC world. Keep writing! 🙂

Thanks for the kind words Carlos 🙂

You mentioned “You need both STUN and TURN to make WebRTC work” . Don’t you need TURN only when STUN fails? Some stats I read mentioned for at least 70% connections, STUN is fine. For the remaining, TURN is required. Or are you just referring from a production standpoint?

You need TURN for your service to work properly.

The fact that it works for some people without TURN doesn’t mean you don’t need it.

I guess that’s a production standpoint.

You mentioned above “TURN servers always implement STUN as well”

So if I use a TURN it can also perform as a STUN?

It tries STUN first. If fails use TURN instead. Am I correct?

Thank you.

It means that when the WebRTC client goes to get a TURN binding, the TURN server will also share the public IP address it sees, ending up with STUN.

Other than that, coturn also has STUN built in to it.

And yes, if STUN is available it should be picked up first.

hey Tsahi,

following your updates for a long time now, you’ve always been the WebRTC go-to expert and your publications contributing a lot to the ecosystem.

I’ve been out of RTC play for a couple years focusing on another direction but recently a client needed some advice regarding WebRTC implementation in the project and I found this post to offer a fantastic summary to refresh the memory and summarise how it works really. Thank you!

Also wanted to ask you whether you have any opinion regarding using the ejabberd server for WebRTC signalling. Their newest version has a built-in TURN server now. They also claim to be supporting SIP and MQTT in addition to XMPP. Unfortunately ProcessOne doesn’t do a very good job in documenting it, maybe on purpose as they also offer a commercial version. Anyway, do you have any opinion on this one?

Don’t have an opinion about this one.

Hi Tsahi,

Thanks for this wonderful article. I am going to host an online conference of 6000 people. Basically it is one-many streaming. How can I start? What’s the hard requirements for the media servers and TURN servers? Is it possible to build that system totally with open source softwares?

Thanks a lot.

John, it is, but don’t go there until you improve your grasp of WebRTC a lot further. Going beyond a few 100’s of people with WebRTC takes more care and attention that just installing an open source project…

it’s possible to create webrtc servers without any opensource framework ?

i want to create a sfu webrtc but i don’t want use other framework

Sure you can. I wouldn’t advise doing that, especially if you ask such a question ????

Developing your own SFU requires you to know WebRTC inside and out. And if that was the case, you’d know if it is possible and what it takes to do that.

i create video conference , and i use this https://github.com/node-webrtc/node-webrtc for help routing all stream from each client. but every server have 18 more connection my service always hang dan every connection will diconnected.

i don’t know what is wrong in my code

this my code repository https://github.com/rootSectornet/video_conference.git

I would love if you could tell me what was wrong

The place to ask this would be at the github repo’s issues tab.

Dear Tsahi,

Thanks for the wonderful article. I just have one doubt.

I have one requirement. In there, I need to send my two cameras stream to the server and then show it in the windows application of user side.

So, do I have to use both application server and media server? or which one would be more effective in my case.

Thanks in advance.

Krish.

You need both as you’ve indicated you want the media to go through the server – which assumes a media server.

The signaling server may be the same server, though for me this is a different logical entity.

Hi Tsahi,

To your question “Can I host WebRTC servers on AWS?” your answer also has the line: “Many popular WebRTC services are hosted today on AWS, Google Cloud, Microsoft Azure and Digital Ocean servers.”

Amazon has introduced a WebRTC service called Chime which we were greatly interested in using. Unfortunately, this is unvavailable in our geographical region.

So, could you tell me what are the “many popular WebRTC services” currently available on AWS, Google Cloud, MS Azure, etc? Are these services readily available as plug-in or with some kind of API integration?

Thanks & best regards,

Jay

Not sure what your region is.

All cloud vendors today have and use data centers in India – not with the same spread they do over Europe or the US, but they do have data centers there.

hi.. where web hosting can i deploy my webrtc using simple-peer, it is created in laravel project. because I could not see the video from cam when I deployed it to heroku. the error is Uncaught (in promise) TypeError: Cannot read property ‘getUserMedia’ of undefined.

You should use HTTPS and not HTTP.

Thank you for response.Same issue although i’m using https, is there any solution for to that problem? Please..

Best places to go ask would be discuss-webrtc, the github project page or stackoverflow. A comment in a general article about WebRTC probably isn’t where you’ll get a technical answer about a problem with a specific API call.

thank you, i know already the issue, by applying ssl from my webhosting . but is there any cloud storage than AWS to make Turn/stun server active?

Can we create our own NAT traversal server using ubuntu?

Sure you can. Check coturn as an open source one to use.