What exactly is simulcast, how is it used in WebRTC and why is it a critical component in any SFU media server.

WebRTC simulcast is one of these things that is commonly used by WebRTC applications that have SFU media servers. If your media server doesn’t use simulcast – make sure to ask why and to understand the answer. And if it does, then you should know what it means exactly. Which is why we’re here now.

In this article, I want to explain what WebRTC simulcast is, when and how it is used AND some new advancements coming to simulcast.

Table of contents

- A crash course on video quality and bitrate

- SFU media servers and group video sessions

- Media quality: LCD or BAB

- Client side = Simulcast; Server side = Adaptive bitrate

- Advantages and weaknesses of using simulcast in WebRTC

- Who decides on bitrates in WebRTC simulcast

- Keyframes and switching costs in simulcast

- Temporal scalability improves WebRTC simulcast

- Decisions of highest layer bitrate in WebRTC simulcast

- WebRTC and multi-codec simulcast

- A word about SVC… and where to learn more

A crash course on video quality and bitrate

Before we begin, we need to understand the concept of bitrate. In a WebRTC video session, the first thing to look at and understand is the bitrate used. Video encoding requires sending a lot of data over the network, and WebRTC tries to match the bitrate it sends to the available bandwidth of the network.

See how I switched between talking about sending data to bitrate to bandwidth? For me, sending data is what we are trying to do. Bitrate is the actual (or target) amount of data we’re aiming for, and bandwidth is what is available for us on the network (assume that bandwidth should always be the same or preferably even higher than the bitrate).

When it comes to audio, we’re mostly working with bitrates that are static and known in advance. They are also low compared to video bitrates, so we just don’t care as much. Which leaves us with video streams.

For video streams:

- The higher the bitrate, the higher the quality (most of the time)

- The higher the bitrate, the higher the CPU and memory needed to encode and decode the data

This means that what we want to do is use as little bitrate as possible to get the highest possible quality. We’re trying to reach for the stars first by deciding our desired bitrate, and then we start lowering due to the constraints of the real world. Here are a few reasons for this:

- Our CPU is over-burdened, so we need to reduce the bitrate we encode or decode

- The resolution of the video that ends up being displayed is going to be quite rather small, so there’s no point in investing too much in bitrate. Same logic can be applied to the camera

- We can’t push through the network the bitrate we want, so we need to reduce it to fit the bandwidth available on the network

👉 If you want to learn more about this topic, then read this article on WebRTC video quality

SFU media servers and group video sessions

For video group sessions in WebRTC, we use SFU media servers. Not always, but most of the time. Why? Because SFUs route media – this ends up costing us less compared to MCUs and in many ways makes things more flexible for us on the viewer’s end.

The challenge though is that SFUs harbor a wee bit more complex logic and smarts than the alternatives and they also delegate a lot of the work to the clients themselves. A good SFU is one that has tight integration and optimization methods with the clients using it. And remember here that the implementation of the browser (Chrome) is optimized for Google Meet’s needs.

Simulcast was “invented” for SFUs. Let’s take a quick example to show what we mean here.

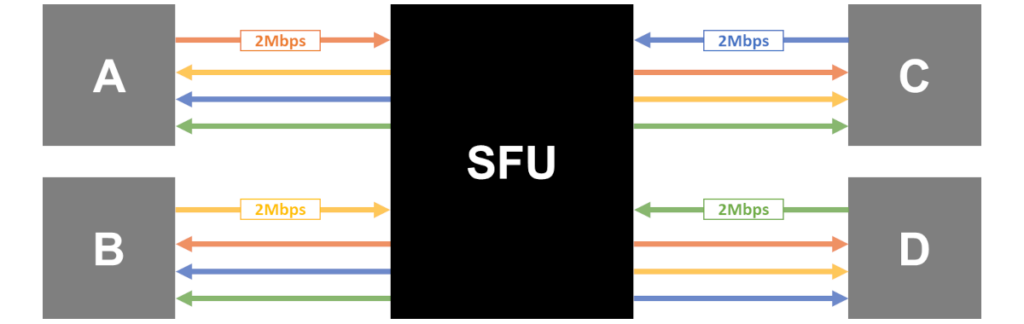

We have 4 people on a call. All connected to an SFU. Each participant is sending his video to the SFU, and the SFU routes that video to the other 3 participants in the call:

If everyone has a decent network, then we’re all happy. But what if D has poor network conditions on his downlink? Here are some assumptions for our scenario:

- All participants can send 2Mbps of video data towards the SFU

- A, B and C can receive up to 20Mbps in total on the downlink

- D can receive only 1Mbps in total on the downlink

If we want everyone to be displayed at the same quality on D’s screen, we need to give each one of them ~330Kbps. That’s instead of 2Mbps. So… do we just reduce the sending bitrate of everyone down to 330Kbps to accommodate for user D? Or do we drop him out of the call altogether?

Notice how we can still send 2Mbps from D to the rest of the participants? That’s just the nature and dynamics of the network and capabilities we have in this example.

Here’s where simulcast comes in…

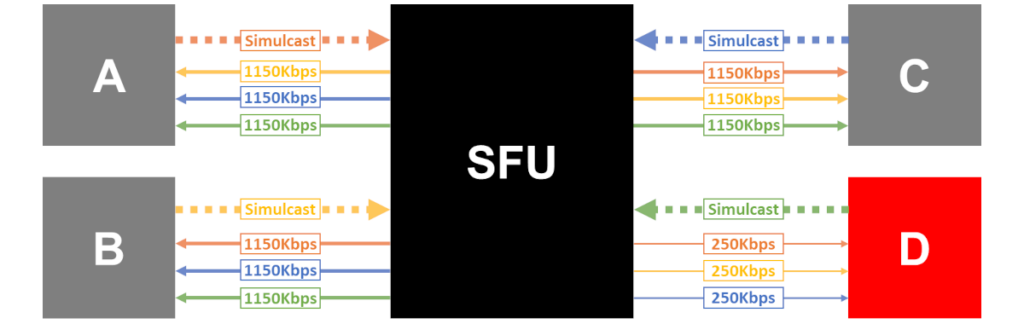

We’re going to engineer the solution so that each participant is going to create 3 separate bitstreams of their video data: 1150kbps, 600kbps and 250kbps, totalling 2Mbps. The exact numbers are less important than the concept itself, so please go with the flow here.

* Being lazy, I’ve denoted simulcast lines as dotted lines, indicating Simulcast instead of using a better notation like 1150/600/250.

Now that we do that, A, B and C get 1150Kbps video from everyone else and D receives the lower 250Kbps bitstreams (it can’t handle 1150kbps or 600kbps even for only one of the users without dropping one of the other video streams it is receiving altogether). Now each one is getting the most he can handle (or at the very least, closer to that than just lowering everyone down).

Media quality: LCD or BAB

I am going to use names that don’t necessarily exist. I am making them up here to explain the nature of simulcast a bit better.

What we’ve seen in the example above is how we move from LCD (Least Common Denominator) to BAB (Best Available Bandwidth).

We started with a naive implementation where the same video bitrate is being sent to everyone. So if there’s a hiccup somewhere along the session, everyone is going to be affected. When D had network issues, everyone had to lower their bitrate from 2Mbps down to 330Kbps… that’s quite a hit to media quality across the board for them all.

That’s our LCD – we’re going to need to accommodate the bitrate to the lowest common denominator of the available bandwidth we have across our meeting participants. And that sucks. Bigtime…

But then we went for BAB – we’re going to try and work with the best available bandwidth that each user is capable of receiving.

How did we do that? By asking the participants (nicely) to generate more than a single bitstream. Each bitstream has a different bitrate here, which gives the SFU the flexibility it needs to decide which bitrate to send to which user.

We use simulcast (or SVC, though not in this article) because there’s no equality in digital communications. Participants have different devices, they connect with different networks and they even see and focus on different things during the same meeting. Simulcast enables us to give different participants a different view of the meeting with varying degrees of quality based on the capabilities of each participant at any given moment AND based on each participants’ preference/desire.

How much flexibility and how high media quality we can reach is determined by the tools and optimizations we end up employing in our implementation. No two implementations of SFU with simulcast are exactly alike.

Client side = Simulcast; Server side = Adaptive bitrate

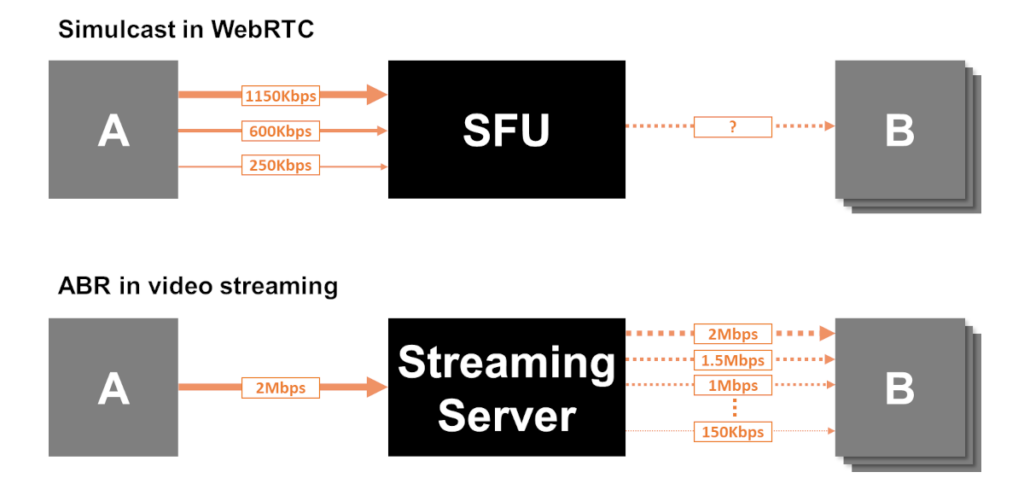

Simulcast as a concept and solution is about a client generating multiple streams so that a media server can use whichever of the streams it needs to send to other participants.

Video streaming had a similar(?) solution known as ABR – Adaptive Bitrate.

Here, the client sends a single media stream to the server and the server is the one that generates any number of streams in different bitrates as it sees fit. This makes sense when there are many viewers (thousands or more) and it can be useful to invest in server resources (these cost money to the vendor providing the service) for the given scenario.

Some use ABR as a term to simply say that the bitrate is variable in nature and adapts to the network. I use it to refer to server side adaptation, where there are multiple video streams generated (in advance or in realtime) and the server simply chooses the best to use per viewer.

For large scale live streaming broadcasts, you can start seeing solutions that incorporate ABR as a technology to transcode the stream to broadcast on the server and generate multiple bitrates with it. This can and is done sometimes in parallel to using simulcast from the client as well.

The way for me to compartmentalize and remember this? Simulcast is multiple bitrates generated by the client. ABR is multiple bitrates generated by the server.

👉 Your can learn more about ABR vs simulcast or just about simulcast

Advantages and weaknesses of using simulcast in WebRTC

Simulcast is great, but it isn’t a catchall solution.

What simulcast does as a concept is to offload some of the work from the media server. Offloading here means that for the client it comes at an increase in CPU use and outgoing bandwidth required.

WebRTC simulcast advantages

Here are some great things that simulcast brings with it:

- Reduces the costs of media servers drastically

- By not needing to decode and encode media streams, media servers need way less CPU power

- This means that scaling large deployments becomes easier and more feasible for a lot more use cases

- Different layouts for each participant

- Since each user ends up receiving multiple video streams (in different bitrates), the application is free to display a different layout for each participant

- Other media servers that mix media would need to invest even more CPU to support something like “encoder per participant” to achieve this

- Display participants’ video and other data in the same space

- Again, since each participant video is separate from the others, it is simpler to place additional visual items in the same area

- Mixing all videos into a single stream makes this harder and clunkier

WebRTC simulcast weaknesses

It isn’t all good though. There are weaknesses to the use of WebRTC simulcast:

- Higher bandwidth use on uplink of users

- Networks are asymmetric in bandwidth sometimes (think ADSL), and uplinks are usually lower in bandwidth than downlinks

- Simulcast has a higher uplink requirement (1.3125x to be precise) than not using simulcast, which means that there are scenarios when using simulcast can actually lower quality if not done properly

- Higher CPU use for user devices

- Clients generate 2-3 media streams in different bitrates with simulcast

- So they “invest” more in the encoding when it comes to CPU use

- Higher system complexity

- To really make use of simulcast in WebRTC, there should be a lot more synchronization between client and server code

- That means higher complexity of the overall system

- Dependency on client code

- With other solutions, especially media mixing ones (see MCU), the clients might not even know they are in a group call

- But when it comes to simulcast and group calling, clients have a huge role to play in making sure calls are of high quality (due to the complexity mentioned above)

Who decides on bitrates in WebRTC simulcast

There are usually two to three layers/streams when it comes to WebRTC simulcast. Each with a different bitrate, and from there, also with different resolutions, frame rates and quality. I am focusing on bitrate because for me, that’s the leading factor – everything else gets derived from it.

Which bitrates are we going to support and which ones get sent to whom are the most important questions for any SFU implementation that uses simulcast.

WebRTC by itself can’t make such decisions. It has its own default bitrates for simulcast, but this is only what they are – defaults. I wouldn’t recommend developers to use these without understanding their implications (they’re likely not useful for the use case you have at hand).

The decision which bitrates to support in simulcast to begin with should take into consideration the possible display layouts of the videos on the viewers’ end. By knowing at what resolutions the videos get displayed we can try to better estimate the desired bitrates to use while using simulcast. Factor into it things like number of videos in the layout (so that you take total bitrates and available bandwidth into consideration), importance of videos on the display (lower priority streams can manage with lower frame rates and resolutions), etc.

Here’s the thing though:

- The client is the one generating and encoding simulcast media streams. It knows best its own CPU and performance capabilities

- The SFU media server knows best the estimated bandwidth in front of all viewers. It also knows what media streams and at what bitrates it has at its disposal when the time comes to send media to viewers

- The viewer is the one that knows best how the video gets laid out on the display, along with its own CPU and performance capabilities

- Oh, and the viewer may change the layout on the display throughout the call, changing what’s best to send to it

The end result is that the application in charge of it all needs to orchestrate the clients and the media servers in order to optimize the session for higher media quality, taking into consideration all the information. It also means that your application needs to somehow share this out-of-band information with the application session logic so decisions can be made. And this part is proprietary – it isn’t something that we have written as a standard or even a best practice.

Keyframes and switching costs in simulcast

With all this goodness, there’s an achilles heel. One that stems from the way Google implemented simulcast in Chrome, but also by the realities of such a solution.

Here’s the thing: Whenever a viewer switches from one simulcast layer to another, there’s a change in the video stream that gets decoded. That change can only occur with a fresh keyframe on the layer that is being switched to, so that the video decoder will be able to decode the stream properly.

When there’s a need to generate a keyframe in simulcast, Chrome will automatically generate a keyframe across all simulcast layers. This isn’t a good thing, but it is what it is.

This also means that SFU media servers need to be conscious about this and not have viewers switch between the different layers all the time, limiting switches to the minimum necessary to maintain high video quality.

Temporal scalability improves WebRTC simulcast

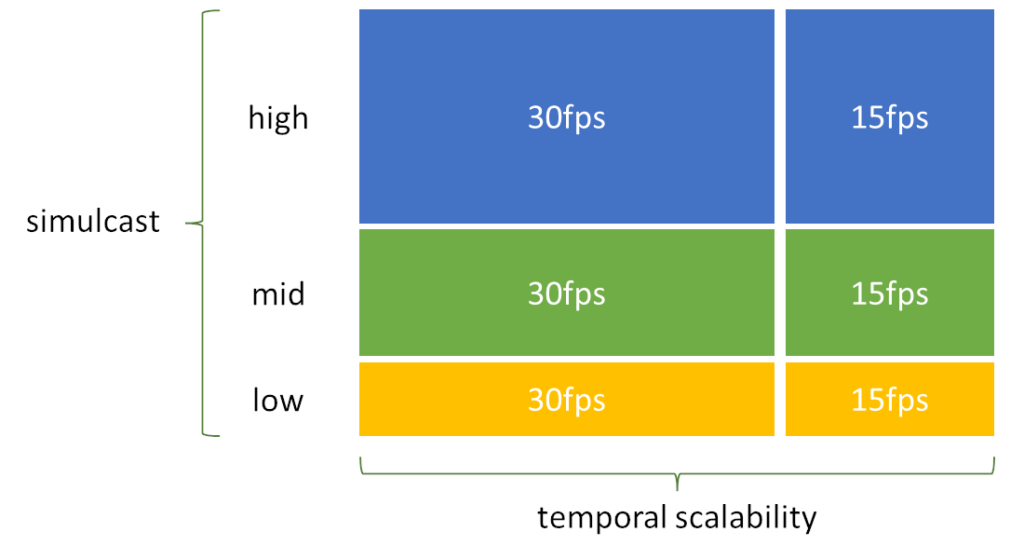

When using temporal scalability alongside simulcast in WebRTC it gives us another level of flexibility.

In temporal scalability, the frames of a video stream are encoded in such a way that their dependency chain enables us to decode some of the frames and not others – something that is usually impossible in video compression. WebRTC’s implementation has in Chrome temporal scalability in VP8 with 2 such “layers”, so if you’re sending 30 frames per second, the SFU media server can decide to send either 30 or 15 FPS to participants (the 15 frames per second is roughly 60% of the bitrate of the 30 frames per second).

Think of it like multiplying your simulcast streams without an additional cost:

And yes, like everything else, this depends on the codec you use, the browser and the fact that some layers might not have enough frames per second to begin with (for example, the lower layer might only produce 10 or 15 frames per second and then temporal scalability might be useless).

When using simulcast, the level and variety of tools you use will enable you to increase the media quality you offer your users.

Decisions of highest layer bitrate in WebRTC simulcast

Simulcast in WebRTC gives us another level of flexibility. One that Daily explains nicely in their post where they title their solution as adaptive bitrate.

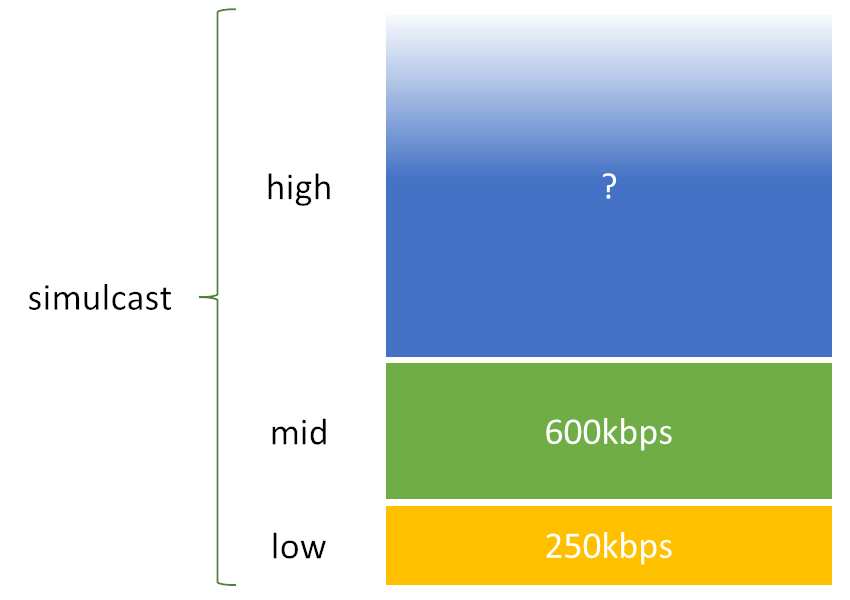

Let’s assume we’re going for the classic 3 media stream in our WebRTC simulcast solution:

Remember our example from before? Our smallest bitrate (250kbps) and medium sized bitrate (600kbps) are “static” in nature. The video encoder in our browser is going to generate these in such a way each and every time (assuming the CPU allows and bandwidth estimation is higher than the summation of these two).

That highest bitrate there isn’t really static. At least not by default. It will use as much bitrate as it needs, taking into consideration the CPU consumption and bandwidth estimation. Left to its own device, this highest bitrate layer is going to be greedy in its resource consumption. It can also get below the medium sized bitrate if there’s not enough CPU or bandwidth available, which beats the point of this being the highest layer. This all leads us to what we need to do…

Like everything else that WebRTC does in the browser though, it needs to be managed and taken into account by the SFU media server. In this case, deciding what that highest layer bitrate should be at any given point in time.

Here are some questions to ask yourself when making that decision in your SFU:

- Do you want the highest layer to have a static bitrate? (hint: no)

- The participants who need to get this user’s video at the highest quality – what’s the highest bitrate / resolution that they can cope with based on their device and network conditions?

- Do you need to limit the bitrate of this layer to accommodate for more of these participants?

- Are you willing to move some of these users to the mid bitrate in order to increase the quality for the other participants who have better conditions?

- Are you recording this stream?

- If you are, do you need it at the highest possible quality?

- Does it mean you can “sacrifice” some of your participant’s viewing quality to get a better recording out of this session?

- Or is the recording fine with lower bitrates or quality?

- I’ll finish off with a question about all the layers – which ones are actually used?

- If some of the layers aren’t being sent to any of the users in the meeting, you can decide to suspend them in the first place, practically “changing” the simulcast configuration dynamically for that specific participant. It will come at a cost when you’ll need to switch from one layer to another if the other layer is non-existent

- And if we decided not to send a specific bitrate, does it mean the other bitrates can change as well to accommodate for the extra headroom we now have of bitrate and CPU available?

These questions don’t have a single simple answer. The answer to these will vary based on the strategy you wish to employ, the use case you have, the video layouts you support, the level of your engineers, the media server you start with, …

At the end of the day, your answers are just a set of heuristics, and being able to compare one to another is going to be a challenging task. Make sure you get this right (or right enough) for your needs.

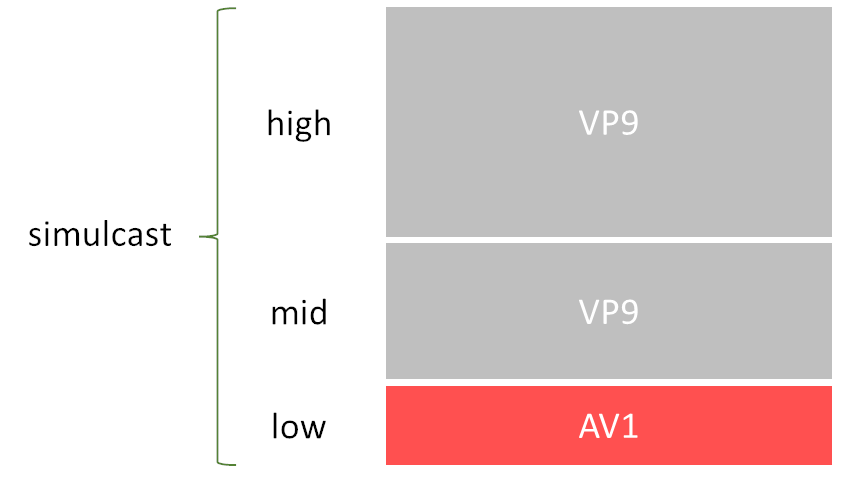

WebRTC and multi-codec simulcast

This is something that we’re just starting to see now.

Up until recently, as a developer, you chose a codec, used simulcast on it and that’s about it. The available alternatives were mostly VP8 and H.264. These days? With the introduction of the AV1 video codec a new idea started cropping:

- AV1 is a better codec when it comes to media quality per bitrate compared to the other codecs available

- But AV1 also takes up more CPU and there’s almost no hardware acceleration available in the market

- At very low bitrates, using AV1 is possible, since it won’t take up much CPU for that

- But using it at higher bitrates isn’t possible in most scenarios

So the above diagram was thought out in a way. Instead of using the same video codec in a simulcast session for WebRTC, why not use multiple codecs? Have AV1 used on the lowest bitrate and then another codec, say VP8 or VP9 on the higher bitrates?

This way, the machine’s CPU is capable of encoding the data, and the resulting media quality of the lowest bitrate in there is now higher than it would have been if we used a single codec for simulcast.

At the time of writing, this hasn’t been implemented in a workable fashion just yet.

In a way, this is our future for the coming years, until AV1 will become popular enough and its use made possible by commonplace hardware acceleration or better CPUs on the devices.

A word about SVC… and where to learn more

There are alternatives to using WebRTC simulcast:

- Deciding NOT to use simulcast but still using an SFU, moving towards a LCD (least common denominator) approach to media quality

- Not using SFU or media routing, going for mesh or mixing solutions

- Replacing simulcast with SVC

SVC stands for Scalable Video Coding. At its heart, it is quite similar to simulcast, just done on the codec level. The video encoder itself generates a bitstream that can be peeled like an onion into multiple bitrates. This gives a solution that is less wasteful than simulcast in bitrate and CPU. The downside here is an increase in complexity and in lack of availability of hardware encoders and decoders that know how to handle SVC.

There are video meeting solutions out there that use SVC. They can usually also use WebRTC simulcast – simply because SVC gets added later as an additional tool for further optimization and flexibility.

To learn more about simulcast, SVC and everything related to WebRTC, check out these services: