The Apple Vision pro is a new VR/AR headset. Here are my thoughts on if and how it will affect the metaverse and WebRTC.

There were quite a few interesting announcements and advances made in recent months that got me thinking about this whole area of the metaverse, augmented reality and virtual reality. All of which culminated with Apple’s unveiling last week of the Apple Vision Pro. For me, the prism from which I analyze things is the one of communication technologies, and predominantly WebRTC.

👉 A quick disclaimer: I have no clue about what the future holds here or how it affects WebRTC. The whole purpose of this article is for me to try and sort my own thoughts by putting them “down on paper”.

Let’s get started then 😃

Table of contents

The Apple Vision Pro

Apple just announced its Vision Pro VR/AR headset. If you’re reading this blog, then you know about this already, so there isn’t much to say about it.

For me? This is the first time that I had this nagging feeling for a few seconds that I just might want to go and purchase an Apple product.

Most articles I’ve read were raving about this – especially the ones who got a few minutes to play with it at Apple’s headquarters.

AR/VR headsets thus far have been taking one of the two approaches:

- AR headsets were more akin to “glasses” that had an overhead display on them which is where the augmentation took place with additional information being displayed on top of reality. Think Google Glass

- VR headsets which you wear a whole new world on top of your head, looking at a video screen that replaces the real world altogether

Apple took the middle ground – their headset is a VR headset since it replaces what you see with two high resolution displays – one for each eye. But it acts as an AR headset – because it uses external cameras on the headset to project the world on these displays.t

The end result? Expensive, but probably with better utility than any other alternative, especially once you couple it with Apple’s software.

Video calling, FaceTime, televisions and AR

Almost at the sidelines of all the talks and discussions around Apple Vision Pro and the new Mac machines, there have been a few announcements around things that interest me the most – video calling.

FaceTime and Apple TV

One of the challenges of video calling has been to put it on the television. This used to be called a lean back experience for video calling, in a world predominantly focused on lean forward when it comes to video calling. I remember working on such proof of concepts and product demos with customers ~15 years ago or more.

These never caught on.

The main reason was somewhere between the cost of the hardware, maintaining privacy with a livingroom camera and microphone positioning/noise.

By tethering the iPhone to the television, the cost of hardware along with maintaining privacy gets solved. The microphones are now a lot better than they used to – mostly due to better software.

Apple, being Apple, can offer a unique experience because they own and control the hardware – both of the phone and the set-top box. Something that is hard for other vendors to pull off.

There’s a nice concept video on the Apple press release for this, which reminded me of this Facebook (now Meta) Portal presentation from Kranky Geek:

Can Android devices pull the same thing, connected to Chromecast enabled devices maybe? Or is that too much to ask?

Do television and/or set-top box vendors put an effort into a similar solution? Should they be worried in any way?

Where could/should WebRTC play a role in such solutions, if at all?

FaceTime and Apple Vision Pro

How do you manage video calls with a clunky AR/VR headset plastered on your face?

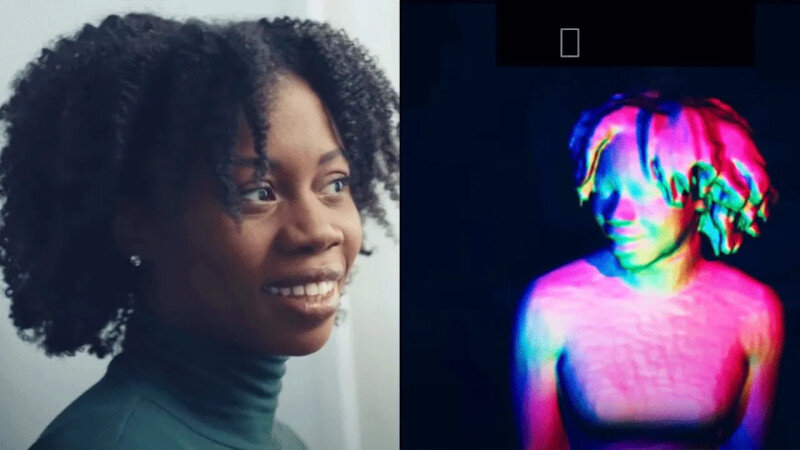

First off, there’s no external camera “watching you”, unless you add one. And then there’s the nagging thing of… well… the headset:

Apple has this “figured out” by way of generating a realistic avatar of you in a meeting. What is interesting to note here, is that in the Apple Vision Pro announcement video itself, Apple made a three important omissions:

- They don’t show how the other people in the meeting see the person with the Vision headset on

- There’s only a single person with a Vision headset on, and we have his worldview, so again, we can’t see how others with a Vision headset look like in such a call

- How do you maintain eye contact, or even know where the user is looking at? (a problem today’s video calling solutions have as well)

What do the people at the meeting see of her? Do they see her looking at them, or the side of her head? Do they see the context of her real-life surroundings or a virtual background?

I couldn’t find any person who played with the Apple Vision Pro headset and reported using FaceTime, so I am assuming this one is still a work in progress. It will be really interesting to see what they come up with once this is released to market, and how real life use looks and feels like.

Lifelike video meetings: Just like being there

Then there’s telepresence. This amorphous thing which for me translates into: “expensive video conferencing meeting rooms no one can purchase unless they are too rich”.

Or if I am a wee bit less sarcastic – it is where we strive to with video conferencing – what would be the ultimate experience of “just like being there” done remotely if we had the best technology money can buy today.

Google Project Starline is the current poster child of this telepresence technology.

The current iteration of telepresence strives to provide 3D lifelike experience (with eye contact obviously). To do so while maintaining hardware costs down and fitting more environments and hardware devices, it will rely on AI – like everything else these days.

The result as I understand it?

- Background removal/replacement

- Understanding depth, to be able to generate a 3D representation of a speaker on demand and fit it to what the viewer needs, as opposed to what the cameras directly capture

Now look at what FaceTime on an Apple Vision Pro really means:

Generate a hyper realistic avatar representation of the person – this sounds really similar to removing the background and using cameras to generate a 3D representation of the speaker (just with a bit more work and a bit less accuracy).

Both Vision Pro and Starline strive for lifelike experiences between remote people. Starline goes for a meeting room experience, capturing the essence of the real world. Vision Pro goes after a mix between augmented and virtual reality here – can’t really say this is augmented, but can’t say this is virtual either.

A telepresence system may end up selling a million units a year (a gross exaggeration on my part as to the size of the market, if you take the most optimistic outcome), whereas a headset will end up selling in the tens of millions or more once it is successful (and this is probably a realistic estimate).

What both of these ends of the same continuum of a video meeting experience do is they add the notion of 3D, which in video is referred to as volumetric video (we need to use big fancy words to show off our smarts).

And yes, that does lead me to the next topic I’d like to cover – volumetric video encoding.

Volumetric video coding

We have the metaverse now. Virtual reality. Augmented reality. The works.

How do we communicate on top of it? What does a video look like now?

The obvious answer today would be “it’s a 3D video”. And now we need to be able to compress it and send it over the network – just like any other 2D video.

The Alliance for Open Media, who has been behind the publication and promotion of the AV1 video codec, just published a call for proposals related to volumetric video compression. From the proposal, I want to focus on the following tidbits:

- The Alliance’s Volumetric Visual Media (VVM) Working Group formed in February 2022 👉 this is rather new

- It is led by Co-Chairs Khaled Mammou, Principal Engineer at Apple, and Shan Liu, Distinguished Scientist and General Manager at Tencent 👉 Apple… me thinking Vision Pro

- The purpose is the “development of new tools for the compression of volumetric visual media” 👉 better compression tools for 3D video

This being promoted now, on the same week Apple Vision Pro comes out might be a coincidence. Or it might not.

The founding members include all the relevant vendors interested in AR/VR that you’d assume:

- Apple – obviously

- Cisco – WebEx and telepresence

- Google – think Project Starline

- Intel & NVIDIA – selling CPUs and GPUs to all of us

- Meta – and their metaverse

- Microsoft – with Teams, Hololens and metaverse aspirations

The rest also have vested interest in the metaverse, so this all boils down to this:

👉 AR/VR requires new video coding techniques to enable better and more efficient communications in 3D (among other things)

👉 Apple Vision Pro isn’t alone in this, but likely the one taking the first bold steps

👉 The big question for me is this – will Apple go off with its own volumetric video codecs here, touting how open they are (think FaceTime open) or will they embrace the Alliance of Open Media work that they themselves are co-chairing?

👉 And if they do go for the open standard here, will they also make it available for other developers to use? Me thinking… WebRTC

Is the metaverse web based?

Before tackling the notion of WebRTC into the metaverse, there’s one more prerequisite – that’s the web itself.

Would we be accessing the metaverse via a web browser, or a similar construct?

For an open metaverse, this would be something we’d like to have – the ability to have our own identity(ies) in the metaverse go with us wherever we go – between Facebook, to Roblox, through Fortnite or whatever other “domain” we go to.

Last week also got us this sideline announcement from Matrix: Introducing Third Room TP2: The Creator Update

Matrix, an open source and open standard for decentralized communications, have been working on Third Room, which for me is a kind of a metaverse infrastructure for the web. Like everything related to the metaverse, this is mostly a work in progress.

I’d love the metaverse itself to be web based and open, but it seems most vendors would rather have it limited to their own closed gardens (Apple and Meta certainly would love it that way. So would many others). I definitely see how open standards might end up being used in the metaverse (like the work the Alliance of Open Media is doing), but the vendors who will adopt these open standards will end up deciding how open to make their implementations – and will the web be the place to do it all or not.

Where would one fit WebRTC in the metaverse, AR and VR?

Maybe. Maybe not.

The unbundling of WebRTC makes it both an option while taking us farther away from having WebRTC as part of the future metaverse.

Not having the web means no real reliance on WebRTC.

Having the tooling in WebRTC to assist developers in the metaverse means there’s incentive to use and adopt it even without the web browser angle of it.

WebRTC will need at some point to deal with some new technical requirements to properly support metaverse use cases:

- Volumetric video coding

- Improve its spatial audio capability

- The number of audio streams that can be mixed (3 are the max today)

We’re still far away from that target, and there will be a lot of other technologies that will need to be crammed in alongside WebRTC itself to make this whole thing happen.

Apple’s new Vision Pro might accelerate that trajectory of WebRTC – or it might just do the opposite – solidify the world of the metaverse inside native apps.

—

I want to finish this off with this short piece by Jason Fried: The visions of the future

It looks at AR/VR and generative AI, and how they are two exact opposites in many ways.

Recently I also covered ChatGPT and WebRTC – you might want to take a look at that while at it.