WebRTC is an important technology for cloud gaming and virtual desktop type use cases. Here are the reasons and the challenges associated with it.

Google launched and shut down Stadia. A cloud gaming platform. It used WebRTC (yay), but it didn’t quite fit into Google’s future it seems.

That said, it does shed a light on a use case that I’ve been “neglecting” in my writing here, though it was and is definitely top of mind in discussions with vendors and developers.

What I want to put in writing this time is cloud gaming as a concept, and then alongside it, all virtual desktops and cloud rendering use cases.

Let’s dig in 👇

Table of contents

The rise and (predictable?) fall of Google Stadia

Google Stadia started life as Project Stream inside Google.

Technically, it made perfect sense. But at least in hindsight, the business plan wasn’t really there. Google is far remote from gaming, game developers and gamers.

On the technical side, the intent was to run high end games on cloud machines that would render the game and then have someone play the game “remotely”. The user gets a live video rendering of the game and sends back console signals. This meant games could be as complex as they need be and get their compute power from cloud servers, while keeping the user’s device at the same spec no matter the game.

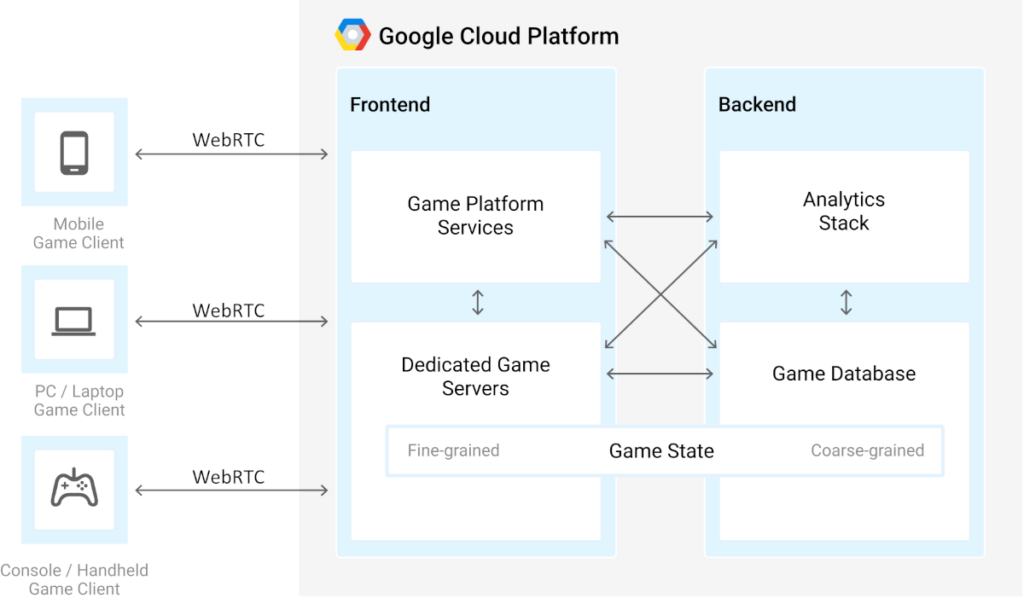

I’ve added the WebRTC text on the diagram from Google – WebRTC was called upon so that the player could use a modern browser to play the game. No installation needed. This can work nicely even on iOS devices, where Apple is adamant about their part of the revenue sharing on anything that goes through the app store.

Stadia wanted to solve quite a few technological challenges:

- Running high end console games on cloud machines

- Remotely serving these games in real time

- Playing the game inside a browser (or an equivalent)

And likely quite a few other challenges as well (scaling this whole thing and figuring out how to obtain and keep so many GPUs for example).

Technically, Stadia was a success. Businesswise… well… it shut down a little over 3 years after its launch – so not so much.

What Stadia did though, was show that this is most definitely possible.

WebRTC, Cloud gaming and the challenges of real time

To get cloud gaming right, Google had to do a few things with WebRTC. Things they haven’t really needed too much when the main thing for WebRTC at Google was Google Meet. These were lowering the latency, dealing with a larger color space and aiming for 4K resolution at 60 fps. What they got virtually for “free” with WebRTC was its data channel – the means to send game controller signals quickly from the player to the gaming machine in the cloud.

Lets see what it meant to add the other three things:

4K resolution at 60 fps

Google aimed for high end games, which meant higher resolutions and frame rates.

WebRTC is/was great for video conferencing resolutions. VGA, 720p and even 1080p. 4K was another jump up that scale. It requires more CPU and more bandwidth.

Luckily, for cloud gaming, the browser only needs to decode the video and not encode it. Which meant the real issue, besides making sure the browser can actually decode 4K resolutions efficiently, was to conduct efficient bandwidth estimation.

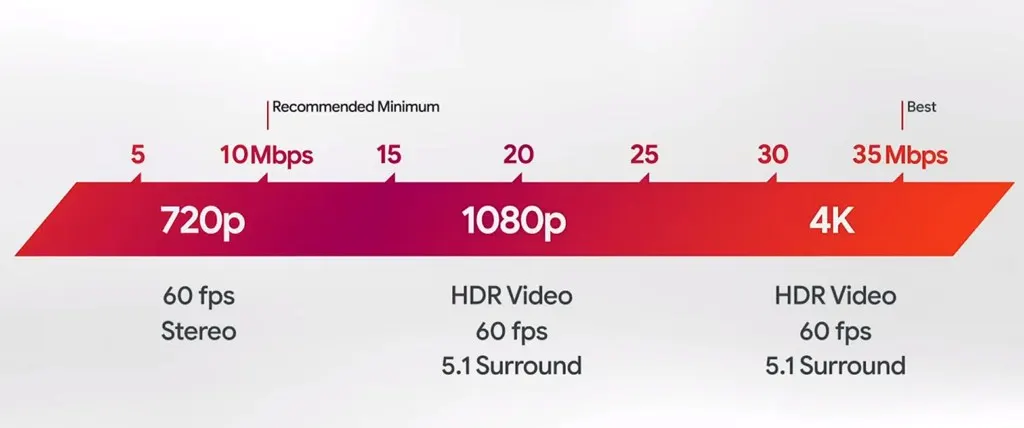

As an algorithm, bandwidth estimation is finely tuned and optimized for given scenarios. 4K and cloud gaming being a new scenario, meant that bitrates that were needed weren’t 2mbps or even 4mbps but rather more in the range of 10-35mbps.

The built-in bandwidth estimator in WebRTC can’t handle this… but the one Google built for the Stadia servers can. On the technical side, this was made possible by Google relying on sender-side bandwidth estimation techniques using transport-cc.

Lower latency: playout delay

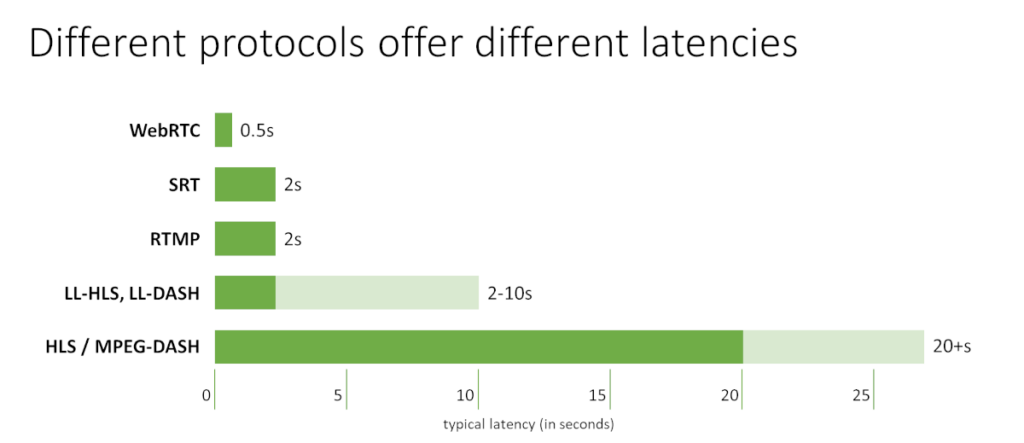

Remember this diagram?

It can be found in my article titled With media delivery, you can optimize for quality or latency. Not both.

WebRTC is designed and built for lower latency, but in the sub-second latency, how would you sort the latency requirements of these 3 activities?

- Nailing a SpaceX rocket landing

- Playing a first shooter game (as old as I am, that means Doom or Quake for me)

- Having an online meeting with a potential customer

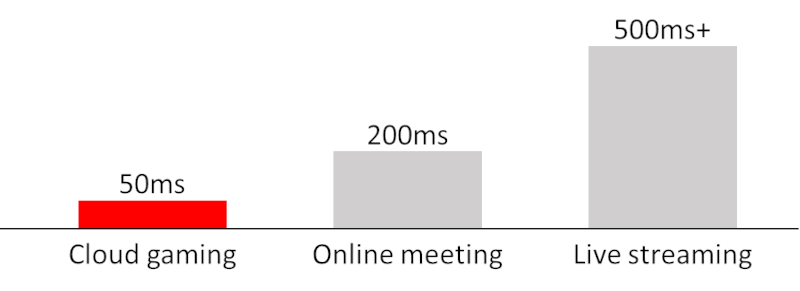

WebRTC’s main focus over the years has been online meetings. This means having 100 milliseconds or 200 milliseconds delay would be just fine.

With an online game? 100 milliseconds is the difference between winning and losing.

So Google tried to reduce latency even further with WebRTC by adding a concept of Playout Delay. The intent here is to let WebRTC know that the application and use case prefers playing out the media earlier and sacrificing even further in quality, versus waiting a bit for the benefit of maybe getting better quality.

Larger color space

Video conferencing and talking heads doesn’t need much. If you recall, with video compression what we’re after is to lose as much as we can out of the original video signal and then compress. The idea here is that whatever the eye won’t notice – we can make do without.

Apparently, for talking heads we can lose more of the “color” and still be happy versus doing something similar for an online game.

To make a point, if you’ve watched Game of Thrones at home, then you may remember the botch they had with the last season with some of the episodes that ended up being too dark for television. That was due to compression done by service providers…

While different from the color space issue here, it goes to show that how you treat color in video encoding matters. And it differs from one scenario to another.

When it comes to games, a different treatment of color space was needed. Specifically, moving from SDR to HDR, adding an RTP header extension in the process to express that additional information.

–

Oh, and if you want to learn more about these changes (especially resolution and color space), then make sure to watch this Kranky Geek session by YouTube about the changes they had to make to support Stadia:

What’s in cloud gaming anyway?

Here’s the thing. Google Stadia is one end of the spectrum in gaming and in cloud gaming.

Throughout the years, I’ve seen quite a few other reasons and market targets for cloud gaming.

Types of cloud games

Here are the ones that come out of the top of my head:

- High end gaming. That’s the Google Stadia use case. Play a high end game anywhere you want on any kind of device. This reduces the reliance and need to upgrade your gaming hardware all the time

- You’ll find NVIDIA, Amazon Luna and Microsoft xCloud focused in this domain

- How popular/profitable this is is still questionable

- Console gaming. PlayStation, Xbox, Switch. Whatever. Picking a game and playing without waiting to download and install is great. It also allows reducing/removing the hard drive from these devices (or shrinking them in size)

- Mobile games. You can now sample mobile apps and games before downloading them, running them in the cloud. Other things here? You could play games of other users 🤔 using their account and the levels they reached instead of slaving your way there

- Retro/emulated games. There’s a large and growing body of games that can’t be played on today’s machines because the hardware for them is too old. These can be emulated, and now also played remotely as cloud games. How about playing a PlayStation 2 game? Or an old and classing SEGA arcade game? Me thinking Golden Axe

Improved gameplay

Why not even play these games with others remotely?

My son recently had a sit down with 4 other friends, all playing on Xbox together a TMNT game. It was great having them all over, but you could do it remotely as well. If the game doesn’t offer remote players, by pushing it to the cloud you can get that feature simply because all users immediately become remote players.

At this stage, you can even add a voice conference or a video call to the game between the players. Just to give them the level of collaboration they can get out of playing the likes of Fortnite. Granted, this requires more than just game rendering in the cloud, but it is possible and I do see it happen with some of the vendors in this space.

Beyond cloud gaming – virtual desktop, remote desktop and cloud rendering

Lower latencies. Bigger color space. Higher resolutions. Rendering in the cloud and consuming remotely.

All these aren’t specific to cloud gaming. They can easily be extended to virtual desktop and remote desktop scenarios.

You have a machine in the cloud – big or small or even a cluster. That “machine” handles computations and ends up rendering the result to a virtual display. You then grab that display and send it to a remote user.

One use case can just be a remote desktop a-la VNC. Here we’re actually trying to get connected from one machine to another, usually in a private and secure peer-to-peer fashion, which is different from what I am aiming for here.

Another, less talked about is doing things like Photoshop operations in the cloud. For the poor sad people like me who don’t have the latest Mac Pro with the shiny M2 Ultra chip, I might just want to “rent” the compute power online for my image or video editing jobs.

I might want to open a rendered 3D view of a sports car I’d like to buy, directly from the browser, having the ability to move my view around the car.

Or it might just be a simple VDI scenario, where the company (usually a large financial institute, but not only) would like the employees to work on Chromebook machines but have nothing installed or stored in them – all consumed by accessing the actual machine and data in their own corporate data center or secure cloud environment.

A good friend of mine asked me what PC to buy for himself. He needed it for work. He is a lawyer. My answer was the lowest end machine you can find would do the job. That saved him quite a lot of money I am guessing, and he wouldn’t even notice the difference for what he needs it for.

But what if he needs a bit more juice and power every once in a while? Can renting that in the cloud make a difference?

What about the need to use specialized software that is hard to install and configure? Or that requires a lot of collaboration on large amounts of data that need to be shared across the collaborators?

Taking the notion and capabilities of cloud gaming and applying them to non-gaming use cases can help us with multiple other requirements:

- CPU and memory requirements that can’t be met with a local machine easily

- The need to maintain privacy and corporate data in work from home environments

- Zero install environment, lowering maintenance costs

Do these have to happen with WebRTC? No

Can they happen with WebRTC? Yes

Would changing from proprietary VDI environments to open standard WebRTC in browsers improve things? Probably

Why use WebRTC in cloud gaming

Why even use WebRTC for cloud gaming or more general cloud rendering then?

With cloud gaming, we’re fine doing it from inside a dedicated app. So WebRTC isn’t really necessary. Or is it?

In one of our recent WebRTC Insights issues we’ve highlighted that Amazon Luna is dropping the dedicated apps in favor of the web (=WebRTC). From that article:

“We saw customers were spending significantly more time playing games on Luna using their web browsers than on native PC and Mac apps. When we see customers love something, we double down. We optimized the web browser experience with the full features and capabilities offered in Luna’s native desktop apps so customers now have the same exact Luna experience when using Luna on their web browsers.”

Browsers are still a popular enough alternative for many users. Are these your users too?

If you need or want web browser access for a cloud gaming / cloud rendering application, then WebRTC is the way to go. It is a slightly different opinion than the one I had with the future of live streaming, where I stated the opposite:

“The reason WebRTC is used at the moment is because it was the only game in town. Soon that will change with the adoption of solutions based on WebTransport+WebCodecs+WebAssembly where an alternative to WebRTC for live streaming in browsers will introduce itself.”

Why the difference? It is all about the latency we are willing to accommodate:

Your mileage may vary when it comes to the specific latency you’re aiming for, but in general – live streaming can live with slightly higher latency than our online meetings. So something other than WebRTC can cater for that better – we can fine tune and tweak it more.

Cloud gaming needs even lower latency than WebRTC. And WebRTC can accommodate for that. Using something else that is unproven yet (and suffers from performance and latency issues a bit at the moment) is the wrong approach. At least today.

Enter our WebRTC Protocols courses

Got a use case where you need to render remote machines using WebRTC? These require sitting at the cutting edge of WebRTC, or more accurately and a slightly skewed angle versus what the general population does with WebRTC (including Google).

Taking upon yourself such a use case means you’ll need to rely more heavily on your own expertise and understanding of WebRTC.

Over a year ago I launched with Philipp Hancke the Low-level WebRTC Protocols course. We’re now recording our next course – Higher-level WebRTC Protocols.

—

Oh, and I’d like to thank Midjourney for releasing version 5.2 – awesome images