Explore the concept of WebRTC latency and its impact on real-time communication. Discover techniques to minimize latency and optimize your application.

WebRTC is about real time. Real time means low latency, low delay, low round trip – whatever metric you want to relate to (they are all roughly the same).

Time to look closer at latency and how you can reduce it in your WebRTC application.

Table of contents

Latency, delay and round trip time

Let’s do this one short and sweet:

Latency sometimes gets confused with round trip time. Let’s put things in order quickly here so we can move on:

- Latency (or delay, and sometimes lag) is the time it takes a packet sent to be received on the other side. We relate to it also as “half a round-trip”

- Round trip time is the time from the moment we send out a packet until we receive a response to it – a full round trip

- Jitter is the variance in delay between received packets. It isn’t latency, but usually if latency is high, jitter is more likely to be high as well

Need more?

👉 I’ve written a longer form post on Cyara’s blog – What is Round-trip Time and How Does it Relate to Network Latency

👉 Round trip time (RTT) is one of the 7 top WebRTC video quality metrics

Latency isn’t good for your WebRTC health

When it comes to WebRTC and communications in general, latency isn’t a good thing. The higher it is, the worse off you are in terms of media quality and user experience.

That’s because interactivity requires low latency – it needs the ability to respond quickly to what is being communicated.

Here are a few “truths” to be aware of:

- The lower the latency, the higher the quality

- For interactivity, we need low latency

- The faster data flows, the faster we can respond to it

- The more connected we feel due to this

- If the round trip time is low enough, we can add retransmissions

- Assume latency is 20 milliseconds

- A retransmission can take 30-40 milliseconds to get by from the moment we notice a missing packet, request a retransmission and getting one back

- 40 milliseconds is still great, so we can now better handle packet losses

- Just because latency is really low in our session

- Indirectly, there are going to be less packet losses

- Low latency usually means shorter routes on the network

- Shorter routes means less switches and routers and other devices

- There’s less “time” and “place” for packets to get lost

- So expectation is usually that with lower latency there will be on average less packet loss

- You are going to discard less packets

- WebRTC will discard packets if their time has passed (among other reasons)

- The less time packets spend over the network, the more time we have to decide if and what to do with them

- The more time packets spend on the network, the higher the likelihood is that we’re going to not be able to use them (due to timing)

- Lower latency usually means lower jitter

- Jitter is the variance in delay between packets received

- If the latency is low in general, there’s less “room” for packets to jitter around and daddle

- Like the packet loss and packet discarding, this isn’t about hard truths – just things that tend to happen when latencies are lower

👉 One of the main things you should monitor and strive to lower is latency. This is usually done by looking at the round trip time metrics (which is what we can measure better than latency).

What are we measuring when it comes to latency?

When you say “latency” – what do you mean exactly?

- We might mean the latency of a single leg in a session – from one device to another

- It might be the latency “end to end” – from one participant to another across all network devices along the route

- WebRTC stats latency related metrics? They focus and deal with network latency between two WebRTC entities that communicate directly one with the other

- Sometimes we’re interested in what is known as “glass-to-glass” latency – from the camera’s lens (or microphone) to the other side’s display (or speaker)

Latency starts with defining what part of the session are we measuring

And within that definition, there might be multiple pieces of processing in the pipeline that we’d want to measure individually. Usually we’d want to do that to decide where to focus our energies in optimizing and reducing the latency.

Here are two recent posts that talk about latency in the WebRTC-LLM space:

- Daily, looking at latency reduction in the audio-LLM piece

- Agora, where the focus is on the device and network

👉 You can decide to improve latency of the same use case, and take very different routes in how you end up doing that.

Different use cases deal with latency differently

Latency is tricky. There are certain physical limits we can’t overcome – the most notable one used as an example is the speed of light: trying to pass a message from one side of the globe to the other will take considerable milliseconds no matter what you do, even not accounting for the need to process the data along its route.

Each use case or scenario has different ways to deal with these latencies. From defining what a low enough value is, through where in the processing pipeline to focus on optimizations, to the techniques to use to optimize latency.

Here are a few industries/markets where we can see how these requirements vary.

👉 Interested in the Programmable Video market, where vendors take care of your latency and use case? Check out my latest report: Video APIs: market status

Conferencing

Video conferencing has a set of unique challenges:

- You can’t change the location of the edges. Telling people to relocate to conduct their meetings just isn’t possible

- Everything is real time. These are conversations between people. They need to be interactive

👉 Latency in conferencing? Below 200 milliseconds you’re doing great. 400 or 500 milliseconds is too high, but can be lived with if one must (though grudgingly).

Streaming

Streaming is more lenient than video conferencing. We’re used to seconds of latency for streaming. You click on Netflix to start a movie and it can take a goodly couple of seconds at times. Nagging? Yes. Something to cancel the service for? No.

That said, we are moving towards live streaming, where we need more interactivity. From auctions, to sports and betting, to webinars and other use cases. Here are a few of the challenges seen here:

- You can’t change the location of the edges. The broadcast is live, so the source is fixed. The viewers won’t move elsewhere either

- Latency here depends on the level of interactivity you’re after. Most scenarios will be fine with 1-2 seconds (or more) of latency. Some, mostly revolving around gambling, require sub second latency

👉 For live streaming? 500 milliseconds is great. 1-2 seconds is good, depending on the scenario.

Gaming

Gaming has a multitude of scenarios where WebRTC is used. What I want to focus on here is the one of having the game rendered by a cloud server and played “remotely” on a device.

The games here vary as well (which is critical). These can be casual games, board games (turn by turn), retro games, high end games, first person shooters, …

Often, these games have a high level of interaction that needs to be real time. Online gamers would pick an ISP, equipment and configuration that lowers their latency for games – just in order to get a bit more reaction time to improve their performance and score in the game. And this has nothing to do with rendering the whole game in the cloud – just about passing game state (which is smaller). Here’s an example of an article by CenturyLink for gamers on latency on their network. Lots of similar articles out there.

Cloud gaming, where the game gets rendered on the server in full and the video frames are sent via WebRTC over the network? That requires low latency to be able to play properly.

👉 In cloud gaming 50-60 milliseconds latency will be tolerable. Above that? Game over. Oh, and if you play against someone with 30 milliseconds? You’re still dead at 50 milliseconds. The lower the better at any number of milliseconds

Conversational AI

Conversational AI is a hot topic these days. Voice bots, LLM, Generative AI. Lots of exciting new technologies. I’ve covered LLM and WebRTC recently, so I’ll skip the topic here.

Suffice to say – conversational AI requires the same latencies as conferencing, but brings with it a slew of new challenges by the added processing time needed in the media pipeline of the voice bot itself – the machine that needs to listen and then generate responses.

I know it isn’t a fare comparison to latencies in conferencing (because there we don’t add it the human participant time or even the time it takes him to understand what is being sent his way, but at the moment, the response time of most voice bots is too slow for high levels of interaction).

👉 In conversational AI, the industry is striving to reach sub 500 milliseconds latencies. Being able to get to 200-300 milliseconds will be a dream come true.

Reducing latency in WebRTC

Different use cases have different latency requirements. They also have different architecture and infrastructure. This leads to the simple truth that there’s no single way to reduce latency in WebRTC. It depends on the use case and the way you’ve architected your application that will decide what needs to be done to reduce the latency in it.

If you split the media processing pipeline in WebRTC to its coarse components, it makes it a bit easier to understand where latency occurs and from there, to decide where to focus your attention to optimize it.

Browsers and latency reduction

When handling WebRTC in browsers there’s not much you can do on the browser side to reduce latency. That’s because the browser controls and owns the whole media processing stack for you.

There are still areas where you and and should take a look at what you’re doing. Here are a few questions you should ask yourself:

- Should you reduce the playout delay to zero? This is supported in Chrome and usually used in the cloud gaming use cases – less in conversational use cases

- Are you using insertable streams? If you are, then the code you use there to reshape the frames received and/or sent might be slow. Check it out

The most important thing in the browser is going to be the collection of latency related measurements. Ones you can use later on for monitoring and optimizing it. These would be rtt, jitter and packet loss that we mentioned earlier.

Mobile and latency reduction

Mobile applications, desktop applications, embedded applications. Any device side application that doesn’t run on a browser is something where you have more control of.

This means there’s more room for you to optimize latency. That said, it usually requires specialized expertise and more resources than many would be willing to invest.

Places to look at here?

- The code that acquires raw audio and video from the microphone and the camera

- Code that plays the incoming media to the speaker and the display

- Codec implementations. Especially if these can be “replaced” by hardware variants

- Data passing and processing within the media pipeline itself. Now that you have access to it, you can decide not to wait for Google to improve it but rather attempt to do it on your own (good luck!)

When taking this route, also remember that most optimizations here are going to be device and operating system specific. This means you’ll have your hands full with platforms to optimize.

Infrastructure latency reduction

This is the network latency that most of the rtt metric in WebRTC statistics come from.

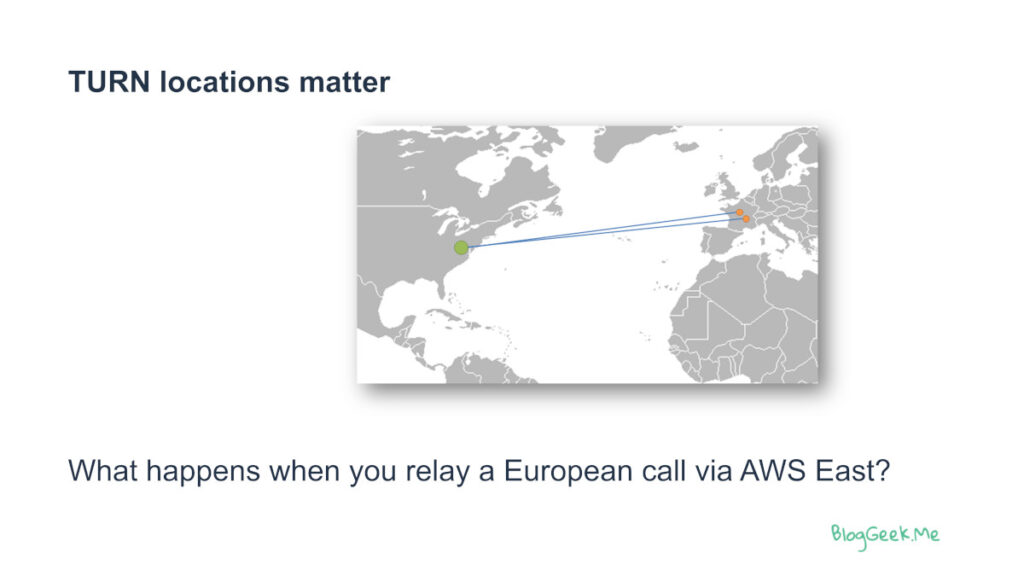

Where your infrastructure is versus the users has a huge impact on the latency.

The example I almost always use? Two users in France connected via a media or TURN server in the US.

Figuring out where your users are, what ISPs they are using, where to place your own servers, through which carriers to connect them to the users, how to connect your servers to one another when needed – all these are things you can optimize.

For starters, look at where you host your TURN servers and media servers. Compare that to where your users are coming from. Make sure the two are aligned. Also make sure the servers allocated for users are the ones closest to them (closest in terms of latency – not necessarily geography).

See if you need to deploy your infrastructure in more locations.

Rinse and repeat – as your service grows – you may need to change focus and infrastructure locations.

Other areas of improvement here are using Anycast or network acceleration that is offered by most large IaaS vendors today (at higher network delivery prices).

Media server processing and latencies

Then there are the media servers themselves. Most services need them.

Media servers are mainly the SFUs and MCUs that take care of routing and mixing media. There are also gateways of many shapes and sizes.

These media servers process media and have their own internal media processing pipelines. As with other pipelines, they have inherent latencies built into them.

Reducing that latency will reduce the end to end latency of the application.

The brave (new) world of generative AI, conversation AI and… LLMs

Remember where we started here? Me discussing latency because WebRTC-LLM use cases had to focus on reducing latency in their own pipeline.

This got the industry looking at latency again, trying to figure out how and where you can shave a few more milliseconds along the way.

Frankly? This needs to be done throughout the pipeline – from the device, through the infrastructure and the media servers and definitely within the TTS-LLM-STT pipeline itself. This is going to be an ongoing effort for the coming year or two I believe.

Know your latency in WebRTC

We can’t optimize what we don’t measure.

The first step here is going to be measurements.

Here are some suggestions:

- Measure latency (obvious)

- Break down the latency into pieces of your pipeline and measure each piece independently

- Decide which pieces to focus and optimize each time

- Be scientific about it

- Create a testbed that is easy to setup and operate

- Something you can run tests in CI/CD to get results quickly

- This will make it easier to see if optimizations you introduce work or backfire

- Do this at scale

- Don’t do it only in your lab. Run it directly on production

- This will enable you to find bottlenecks and latency issues for users and not only synthetic scenarios

Did I mention that testRTC has some of the tools you’ll need to set up these environments? 👉 And if you need assistance with this process, you know where to find me.