Not a cookbook, but should be useful to plan yor multipoint service offering.

WebRTC is usually coined as a peer-to-peer solution – something that allows browsers to skip the server altogether. There’s nothing new in that approach, and I am not talking about Skype, but rather about pure VoIP as we know it.

WebRTC is usually coined as a peer-to-peer solution – something that allows browsers to skip the server altogether. There’s nothing new in that approach, and I am not talking about Skype, but rather about pure VoIP as we know it.

The “peer-to-peerness” of WebRTC is compared to that of that of a browser, where all communication flows through a server. With VoIP, from the very beginning, there was the option of sending media directly between the endpoints.

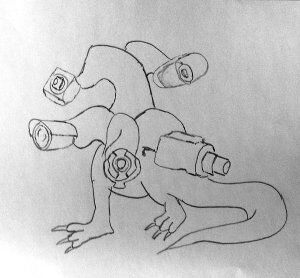

This is also why there’s nothing new in how you deal with multipoint video calling with WebRTC than the ways this was done with other VoIP solutions in the past – there’s just the need to understand it by those who are now venturing into the realm of multipoint with little knowledge of video conferencing.

I am starting a short post series to deal exactly with this. There will be little reference to WebRTC per-se on the subject and more about video flows. I leave it to you to do the relevant modifications in actual implementations.

What am I going to talk about?

- How to broadcast video streams?

- How to implement small group video conferences?

- How to implement large scale video conferences?

In each, I will try to provide the main reasoning behind the suggested architecture, its advantages and disadvantages.

The analysis is going to revolve around these aspects:

Client side bandwidth

How much bandwidth are we going to need on the client side? This is going to be split between incoming and outgoing bandwidth, as most networks today are not symmetric in nature.

You can add to that data cap when mobile devices are of interest.

Server side bandwidth

How much bandwidth are we going to need on the server side? This is going to affect network needs, scaling capabilities and also the cost of bandwidth we will need to pay for our data center.

Client side processing

Besides bandwidth, there’s a need to understand the amount of work we expect the web browser to deal with, if and how it is affected from specific WebRTC implementations and the type of user experience we can strive to get with it.

Server side processing

On the server, there’s work to be done besides passing data sent from the clients in the session in some of these use cases. If that happens, there’s a need to understand the cost associated with it.

–

See you all next week, when we cover the broadcasting use case.

A few simple (short) documents for WebRTC learners! https://plus.google.com/106306286430656356034/posts/HnwhrQcz3DY

Thanks Muaz!

What I want to explore in this series of post is less the APIs of WebRTC and more the thought processes and required infrastructure/backend components for different multipoint use cases. I haven’t seen such a thing covered anywhere, and I do get questions around this topic.

OneKlikStreet.com is a bridge service for webrtc clients. The server architecture is video mixing service. We can do four party conference at less than 300 kbps today. Do try out the service and let me know your thoughts. If you have any queries regarding our service architecture, send us an email at info@turtleyogi.com

i have a question :

is there a webRTC api enable us to create a multipeers chat , or is it necessary to use some libraries ?

Fatima,

For the most part, if you want multiparty voice and video you will need a media server. APIs and even libraries that run on the frontend with no media server in the backend to process the media will not work.

Tsahi

what are pre requisites to learn webrtc…?

Depends for what purpose.

To develop with it? You’ll need JS skills and an understanding in networking.

Then learning WebRTC means learning multiple disciplines. Depending on where you want to take it, you’ll end up understanding different parts of it.