WebRTC media server is an optional component in a WebRTC application. That said, in most common use cases, you will need one.

There are different types of WebRTC servers. One of them is the WebRTC media server. When will you be needing one and what exactly it does? Read on.

Table of contents

- Servers in WebRTC

- The role of a WebRTC media server

- How is a WebRTC media server different from TURN servers

- Types of WebRTC media servers

- A quick exercise: What WebRTC media servers are used by Google Meet?

- When will you need a WebRTC media server?

- E2EE and WebRTC media servers

- WebRTC media servers and open source

- Video APIs, CPaaS and WebRTC media servers

- What about LLM, Generative AI and Agentic AI?

- Taking a deep dive into WebRTC protocols

Servers in WebRTC

There are quite a few moving parts in a WebRTC application. There’s the client device side, where you’ll have the web browsers with WebRTC support and maybe other types of clients like mobile applications that have WebRTC implementations in them.

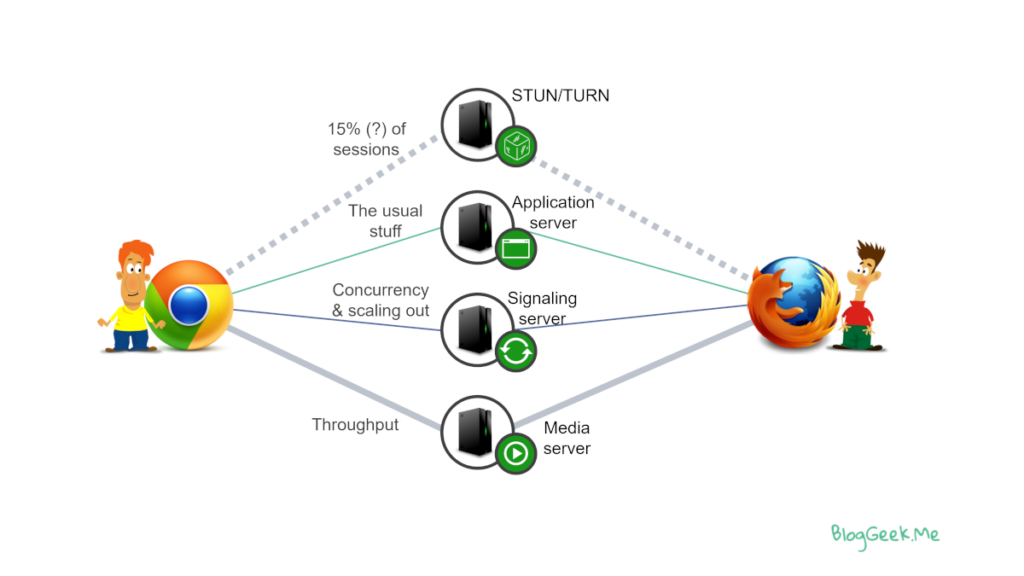

And then there are the server side components and there are quite a few of them. The illustration above shows the 4 types of WebRTC servers you are likely to need:

- Application servers where the application logic resides. Unrelated directly to WebRTC, but there nonetheless

- Signaling servers used to orchestrate and control how users get connected to one another, passing WebRTC signaling across the devices (WebRTC has no signaling protocol of its own)

- TURN (and STUN) servers that are needed to get media routed through firewalls and NATs. Not all the time, but frequently enough to make them important

- WebRTC media servers processing and routing WebRTC media packets in your infrastructure when needed

The illustration below shows how all of these WebRTC servers connect to the client devices and what types of data flows through them:

What is interesting, is that the only real piece of WebRTC infrastructure component that can be seen as optional is the WebRTC media server. That said, in most real-world use-cases you will need media servers.

The role of a WebRTC media server

At its conception, WebRTC was meant to be “between” browsers. Only recently, did the good people at the W3C see it fit to change it to something that can work also in browsers. We’ve known that to be the case all along 😉

What does a WebRTC media server do exactly? It processes and routes media packets through the backend infrastructure – either in the cloud or on premise.

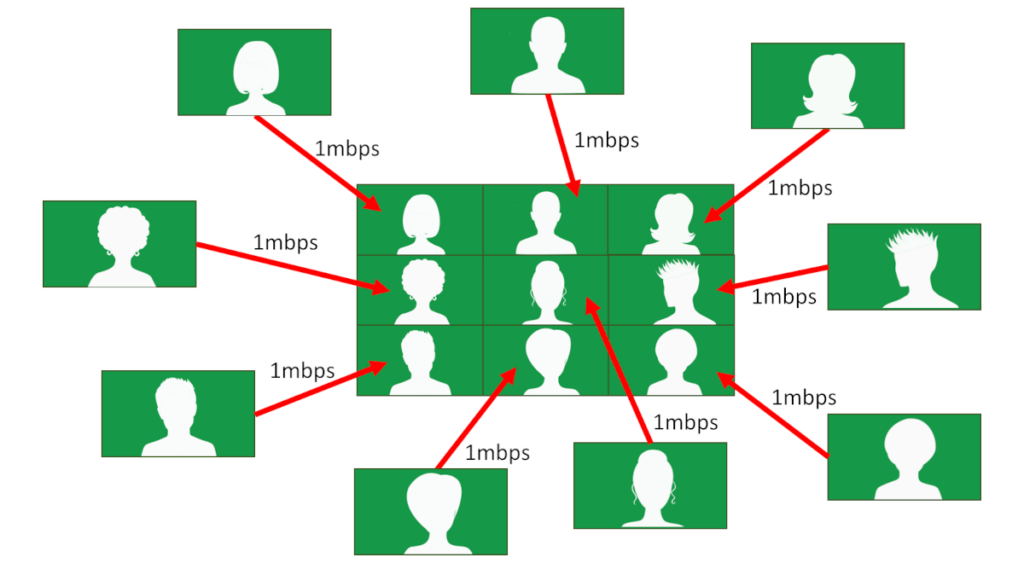

Let’s say you are building a group calling service and you want 10 people to be able to join in and talk to each other. For simplicity’s sake, assume we want to get 1Mbps of encoded video from each participant and show the other 9 participants on the screen of each of the users:

How would we go about building such an application without a WebRTC media server?

To do that, we will need to develop a mesh architecture:

We’d have the clients send out 1Mbps of their own media to all the other participants who wish to display them on their screen. This amounts to 9*1Mbps = 9Mbps of upstream data that each participant will be sending out. Each client receives streams from all 9 other participants, getting us to 9Mbps of downstream data.

This might not seem like much, but it is. Especially when sent over UDP in real time, and when we need to encode and encrypt each stream separately for each user, and to determine bandwidth estimation across the network. Even if we reduce the requirement from 1Mbps to a lower bitrate, this is still a hard problem to deal with and solve.

It becomes devilishly hard (impossible?) when we crank up the number to say 50 or a 100 participants. Not to mention the numbers we see today of 1,000 or more participants in sessions (either active participants or passive viewers).

Enter the WebRTC media server

This is where a WebRTC media server comes in. We will add it here to be able to do the following tasks for us:

- Reduce the stress on the upstream connection of clients

- Now clients will send out fewer media streams to the server

- The server will be distributing the media it receives to other clients

- Handle bandwidth estimation

- Each client takes care of bandwidth estimation in front of the server

- The server takes care of the whole “operation”, understanding the available bandwidth and constraints of all clients

Here’s what’s really going on and what we use these media servers for:

👉 WebRTC media servers bridge the gaps in the architecture that we can’t solve with clients alone

How is a WebRTC media server different from TURN servers

Before we continue and dive in to the different types of media servers, there’s something that must be said and discussed:

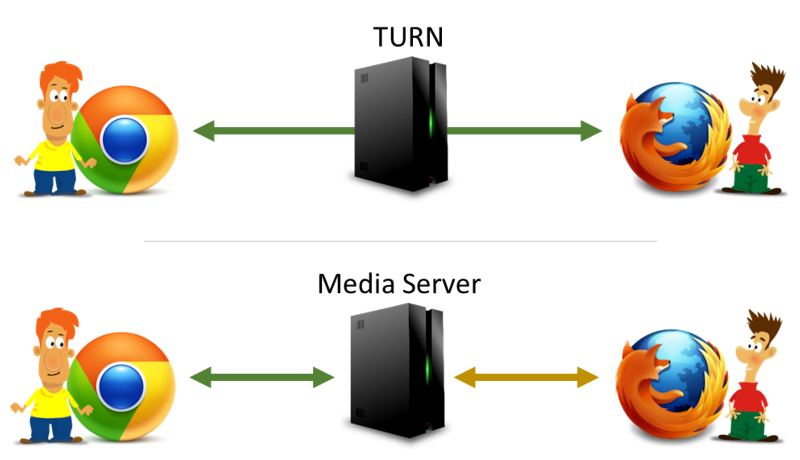

WebRTC media server != TURN server

I’ve seen people try to use the TURN server to do what media servers do. Usually that would be things like recording the data stream.

This doesn’t work.

TURN servers route media through firewalls and NAT devices. They aren’t privy to the data being sent through them. WebRTC privacy is maintained by having data encrypted end to end when passing via TURN servers – the TURN servers don’t know the encryption key so can’t do anything with the media.

WebRTC media servers are implementations of WebRTC clients in a server component. From an architectural point of view, the “session” terminates in the WebRTC media server:

A WebRTC media server is privy to all data passing through it, and acts as a WebRTC client in front of each of the WebRTC devices it works with. It is also why it isn’t so well defined in WebRTC but at the same time so versatile.

Types of WebRTC media servers

This versatility of WebRTC media servers means that there are different types of such servers. Each one works under different architectural assumptions and concepts. Lets review them quickly here.

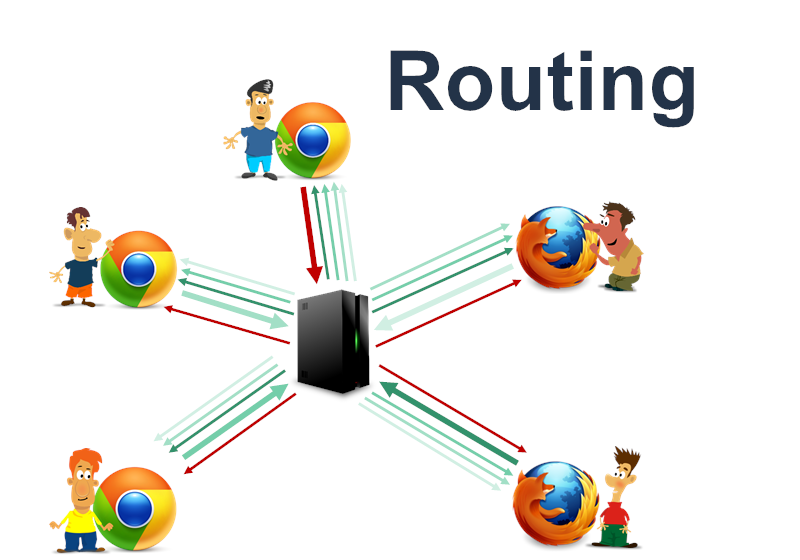

Routing media using an SFU

The most common and popular WebRTC media server is the SFU.

An SFU routes media between the devices, doing as little as possible when it comes to the media processing part itself.

The concept of an SFU is that it offloads much of the decision making of layout and display to the clients themselves, giving them more flexibility than any other alternative. At the same time, it takes care of bandwidth management and routing logic to best fit the capabilities of the devices it works with.

To do all that, it uses technologies such as bandwidth estimation, simulcast, SVC and many others (things like DTX, cascading and RED).

At the beginning, SFUs were introduced and used for group calls. Later on, they started to appear as live streaming and broadcast components.

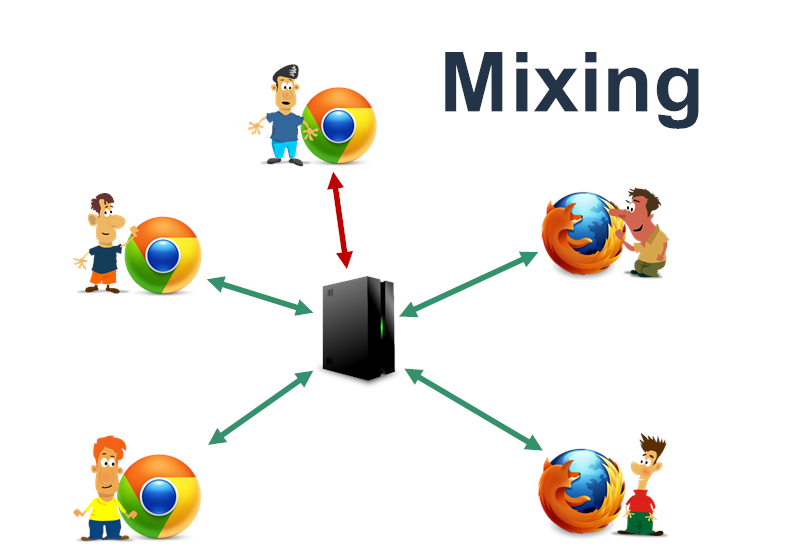

Mixing media with an MCU

Probably the oldest media server solution is the MCU.

The MCU was introduced years before WebRTC, when networks were limited. Telephony systems had/have voice conferencing bridges built around the concept of MCUs. Video conferencing systems required the use of media servers simply because video compression required specialized hardware and later too much CPU from client devices.

👉 In telephony and audio, you’ll see this referred to as mixers or audio bridges and not MCUs. That said, they still are one and the same technically.

What MCUs do is to receive and mix the media streams it receives from the various participants, sending a single stream of media towards the clients. For clients, an MCU looks like a call between 2 participants – it is the only entity the client really interacts with directly. This means there’s a single audio and a single video stream coming into and going out of the client – regardless of the number of participants and how/when they join and leave the session.

MCUs were less used in WebRTC from the get go. Part of it was the simple economies of scale – MCUs are expensive to operate, requiring a lot of CPU power (encoding and decoding media is expensive). It is cheaper to offer the same or similar services using SFUs. There are vendors who still rely on MCUs in WebRTC for group calling, though in most cases, you will find MCUs providing the recording mechanism only – where what they end up doing is taking all inputs and mixing them into a single stream to place in storage.

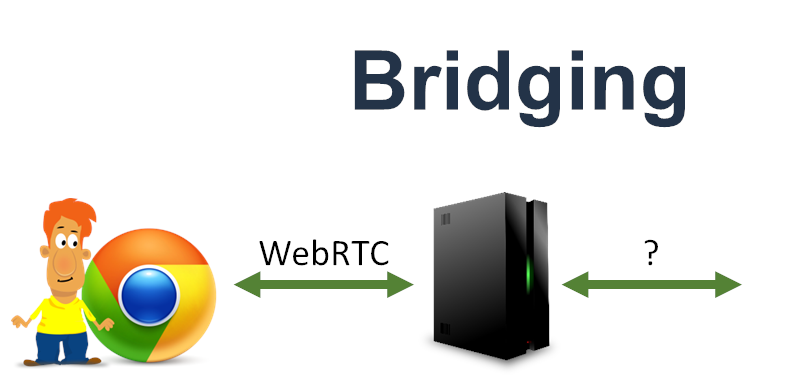

Bridging across standards using a gateway

Another type of media server that is used in WebRTC is a gateway.

In some cases, content – rendered, live or otherwise – needs to be shared in a WebRTC session – or a WebRTC session needs to be shared on another type of a protocol/medium. To do so, a gateway can be used to bridge between the protocols.

The two main cases where these happen are probably:

- Connecting surveillance cameras that don’t inherently support WebRTC to a WebRTC application

- Streaming a WebRTC session into a social network (think Twitch, YouTube Live, …)

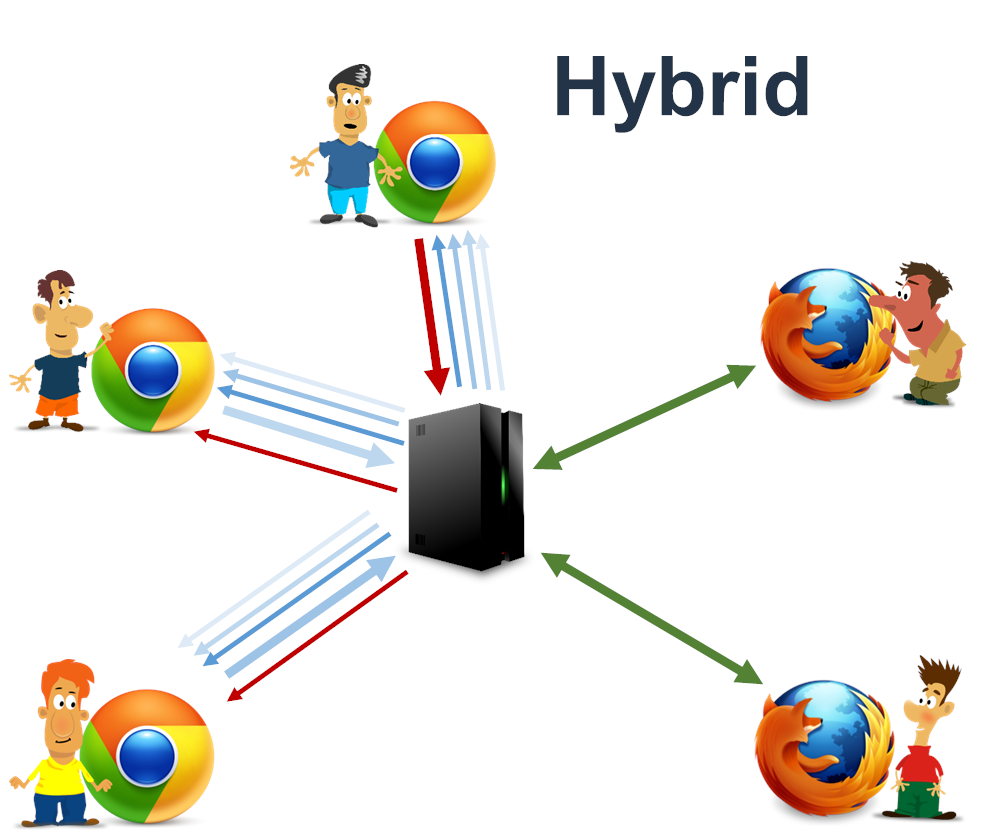

The hybrid media server

One more example is a kind of a hybrid media server. One that might do routing and processing together. A group calling service that also records the call into a single stream for example. Such solutions are becoming more and more popular and are usually deployed as multiple media servers of different types (unlike the illustration above), each catering for a different part of the service. Splitting them up makes it easier to develop, maintain and scale them based on the workload needed by each media server type.

Cloud rendering

This might not be a WebRTC media server per se, but for me this falls within the same category.

Sometimes, what we want is to render content in the cloud and share it live with a user on a browser. This is true for things like cloud gaming or cloud application delivery (Photoshop in the cloud for hourly consumption). In such a case, this is more like a peer-to-peer WebRTC session taking place between a user on a browser and a cloud server that renders the content.

I see it as a media server because many of the aspects of development and scaling of the cloud rendering components are more akin to how you’d think about WebRTC media servers than they are about browser or native clients.

A quick exercise: What WebRTC media servers are used by Google Meet?

Let’s look at an example service – Google Meet. Why Google Meet? Well, because it is so versatile today and because if you want to trace capabilities in WebRTC, the best approach is to keep close tabs with what Google Meet is doing.

What WebRTC media servers does Google Meet use? Based on the functionality it offers, we can glean out the types that make up this service:

- Supports large group meetings – this is where SFU servers are used by Google Meet to host and orchestrate the meeting. Each user has different layouts during the same session and can flexibly control what it views

- Recording meetings – Google Meet recordings shows a single participant/screen share and mixes all audio streams. For the audio this means using an MCU server and for the video this is more akin to a switching SFU server (always picking out a single video stream out of those available and not aiming for a “what you see is what you get” kind of recording)

- Connect to YouTube live – here, they connect between Google Meet and YouTube Live using an RTMP gateway in real-time instead of storing it in a file like it is done while recording

- Dialing in from regular telephones – this one requires a hybrid gateway bridging server as well as an MCU to mix the audio into the meeting

- Cloud based noise suppression – Google decided to implement noise suppression in Google Meet using servers. This requires an SFU/bridging gateway to connect to servers that process the media in such a way

- Cloud based background removal – For low performing devices, Google Meet also runs background removal in the server, and like noise suppression, this requires an SFU/bridging gateway for this functionality

A classic meeting service in WebRTC may well require more than a single type of a WebRTC media server, likely deployed in hybrid mode across different hardware configurations.

When will you need a WebRTC media server?

As we’ve seen earlier, the answer to this is simple – when doing things with WebRTC clients only isn’t possible and we need something to bridge this gap.

We may lack:

- Bandwidth on the client side, so we will alleviate that by adding WebRTC servers

- CPU, memory or processing power, delegating that to the cloud

- Conduct certain machine learning algorithms, where having them run in cloud services may make more sense (due to CPU, memory, availability of training data, speed, certain AI chips, …)

- Bridging between WebRTC and other components that don’t use WebRTC, such as connecting to telephony systems, surveillance cameras, social media streaming services, etc

- When we need the data on servers – so we record the sessions (we can also do this without a WebRTC server, but there will be a media server in the cloud there nonetheless)

What I usually do when analyzing the needs of a WebRTC application is to find these gaps and determine if a WebRTC media server is needed (it usually is). I do so by thinking of the solution as a P2P one, without media servers. And then based on the requirements and the gaps found, I’ll be adding certain WebRTC media server elements into the infrastructure needed for my WebRTC application.

E2EE and WebRTC media servers

We’ve seen a growing interest in recent years in privacy. The internet has shifted to encryption first connections and WebRTC offers encrypted only media. This shift towards privacy started as privacy from other malicious actors on the public internet but has since shifted also towards privacy from the service provider itself.

Running a group meetings service through a service provider that cannot access the meeting’s content himself is becoming more commonplace.

This capability is known as E2EE – End to End Encryption.

When introducing WebRTC media servers into the mix, it means that while they are still a part of the session and are terminating WebRTC peer connections (=terminating encrypted SRTP streams) on their own, they shouldn’t have access to the media itself.

This can be achieved only in the SFU type of WebRTC media servers by the use of insertable streams. With it, the application logic can exchange private encryption keys between the users and have a second encryption layer that passes transparently through the SFU – enabling it to do its job of packet routing without the ability to understand the media content itself.

WebRTC media servers and open source

Another important aspect to understand about WebRTC media servers is that most of those using media servers in WebRTC do so using open source frameworks for media servers.

I’ve written at length about WebRTC open source projects – there are details there about the market state and open source WebRTC media servers there.

Also be sure to read my thoughts on open source media servers in 2024.

What is important to note is that more often than not, projects who don’t use managed services for their WebRTC media servers usually pick open source WebRTC media servers to work with and not develop their own from scratch. This isn’t always the case, but it is quite common.

Video APIs, CPaaS and WebRTC media servers

WebRTC Video API and CPaaS is another area I cover quite extensively.

Vendors who decide to use a CPaaS vendor for their WebRTC application will mainly do it in one of two situations:

- They need to bridge audio calls to PSTN to connect them to regular telephony

- There’s a need for a WebRTC media server (usually an SFU) in their solution

Both cases require media servers…

This leads to the following important conclusion: there’s no such thing as a CPaaS vendor doing WebRTC that isn’t offering a managed WebRTC media server as part of its solution – and if there is, then I’ll question its usefulness for most potential customers.

What about LLM, Generative AI and Agentic AI?

We are moving into a world dominated by artificial intelligence.

With the advancements in Generative AI and its use everywhere, WebRTC is just another place where we are bound to bump into it.

Getting this new and exciting technology to work with WebRTC means focusing on the reduction of latency in the media processing pipeline. It also implies the fact that we have media servers who cater our needs when connecting users to the cloud based LLMs – these things can’t take place on device today simply because the memory and CPU requirements they have are humongous.

In most cases, implementations of LLM solutions is accomplished by connecting them via SFU media servers or by having a WebRTC client implementation in the cloud, connected to the LLM directly, serving as the remote peer in a WebRTC session to the human on the other side of the connection.

Taking a deep dive into WebRTC protocols

Last year, I released the Low-level WebRTC protocols course along with Philipp Hancke.

The Low-level WebRTC protocols course has been a huge success, which is why we’re starting to work on our next course in this series: Higher level WebRTC protocols

Before we go about understanding WebRTC media servers, it is important to understand the inner-workings of the network protocols that WebRTC employs. Our low-level protocols course covers the first part of the underlying protocols. This second course, looks at the higher level protocols – the parts that look and deal a bit more with network realities – challenges brought to us by packet losses as well as other network characteristics.

Things we cover here include retransmissions, forward error correction, codecs packetization and a myriad of media processing algorithms.

Great explanation of WebRTC media servers! I didn’t realize how essential they are for managing real-time communication. The examples really helped clarify their role in bridging connections. Thanks for breaking it down so clearly!