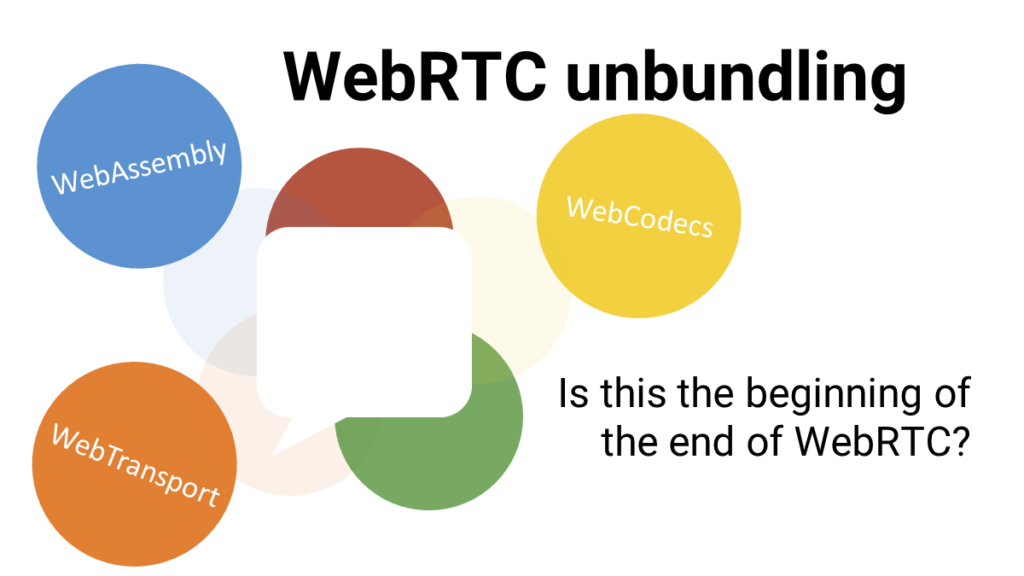

2020 marks the point of WebRTC unbundling. It seems like the new initiatives are the beginning of the end of WebRTC as we know it as we enter the era of differentiation.

Life is interesting with WebRTC. One moment, it is the only way to get real time media towards a web browser. And the next, there are other alternatives. Though no one is quite announcing them the way they should.

We’re at the cusp of getting WebRTC 1.0 officially released. Seriously this time. For real. I think. Well… maybe.

Towards differentiation

If I were to chart our path through this crazy world of WebRTC, it would look something like this:

Towards the end of 2019, and at greater force during the pandemic, we’ve seen how the future of WebRTC looks like. It is all about differentiation.

Up until now, all vendors had access to the same WebRTC stack, as it is implemented by Google (and the other browser vendors), with the exact same capabilities in the browser.

No more.

I’ve alluded to it in my article about Google’s private WebRTC roadmap. Since then, many additional signals came from Google marking this as the way forward.

Today, there are 2 separate WebRTC stacks – the one available to all, and the one used internally by Google in native applications. While this is something everyone can do, Google is now leveraging this option to its fullest.

The interesting thing that is happening is taking place somewhat elsewhere though. WebRTC is now being unbundled so that Google (and others) don’t need to maintain two separate versions, but rather can have their own “differentiation” implemented on top of “WebRTC”.

Unbundling WebRTC

At this point, you’re probably asking yourselves what does that mean exactly. Before we continue, I suggest you watch the last 15 minutes from web.dev LIVE Day Two:

That’s where Google is showing off the progress made in Chrome and what the future holds.

The whole framing of this session feels “off”. Google here is contemplating how they can bring a solution that can fit Zoom, that when 99% of all vendors have figured out already how to be in the browser – by using WebRTC.

The solution here is to unbundle WebRTC into 3 separate components:

- WebTransport – enables sending bidirectional low latency UDP-like traffic between a client and a “web server”, which in our context is a media server

- WebCodecs – gives the browser the ability to encode and decode audio and video independently of WebRTC

- WebAssembly – a browser accelerator for running code and an enabler for machine learning

While these can all be used for new and exciting use cases (think Google Stadia, with a simpler implementation), they can also be used to implement something akin to what WebRTC does (without the peer-to-peer capability).

WebTransport replaces SRTP. WebCodecs does the encoding/decoding. WebAssembly does all the differentiation and some of the heavy lifting left (things like bandwidth estimation). Echo cancellation and other audio algorithms… not sure where they end up with – maybe inside WebCodecs or implemented using WebAssembly.

What comes after the unbundling of WebRTC?

This isn’t just a thought. It is an active effort taking place at Google and in standardization bodies. The discussion today is about enabling new use cases, but the more important discussion is about what that means to the future of WebRTC.

As we unbundle WebRTC, do we need it anymore?

With Google, as they have switched gears towards differentiation already, it is not that hard to see how they shift away from WebRTC in their own applications:

Google Stadia

Google Stadia is all about cloud gaming. WebRTC is currently used there because it was the closest and only solution Google had for low latency live streaming towards a web browser.

What does Google Stadia need from WebRTC?

- The ability to decode video in real time in the browser

- Send back user actions from the remote control towards the cloud at low latency

That’s a small portion of what WebRTC can do, and using it as the monolith that it is is probably hurting Google’s ability to optimize the performance further.

Sending back user actions were already implemented in Stadia on top of QUIC and not SCTP. That’s because Google has greater control over QUIC’s implementation than it does over SCTP. They are probably already using an early implementation of WebTransport, which is built on top of QUIC in Stadia.

The decoding part? Easier to just do over WebTransport as well and be done with it instead of messing around with the intricacies of setting up WebRTC peer connections and maintaining them.

For Stadia, unbundling WebRTC will result moving away from WebRTC to a WebTransport+WebCodecs combo is the natural choice.

Google Duo & Google Meet

For Duo and Meet things are a bit less apparent.

They are built on top of WebRTC and use it to its fullest. Both have been optimized during this pandemic to squeeze every ounce of potential out of what WebRTC can do.

But is it enough?

Differentiation in WebRTC

Google has been adding layers of differentiation and features on top and inside of WebRTC recently to fit their requirements as the pandemic hit. Suddenly, video became important enough and Zoom’s IPO and its huge rise in popularity made sure that management attention inside Google shifted towards these two products.

This caused an acceleration of the roadmap and the introduction of new features – most of them to catch up and close the gap with Zoom’s capabilities.

These features ranged from simple performance optimizations, through beefing up security (Google Duo doing E2EE now), towards machine learning stuff:

- Proprietary packet loss concealment algorithm in native Duo app

- Cloud based noise suppression for Meet

- Upcoming background replacement for Meet

- …

Advantages of unbundling WebRTC for Duo/Meet

Can Google innovate and move faster if they used the unbundled variant? Instead of using WebRTC, just make use of WebTransport+WebCodecs+WebAssembly?

What advantages would they derive out of such a move?

- Faster time to market on some features, as there’s no need to haggle with standardization organizations on how to introduce them (E2EE requires the introduction of Insertable Streams to WebRTC)

- Google Meet is predominantly server based, so the P2P capability of WebRTC isn’t really necessary. Removing it would reduce the complexity of the implementation

- More places to add machine learning in a differentiated way, instead of offering it to everyone. Like the new WaveNetEQ packet loss concealment was added outside of WebRTC and only in native apps, it could theoretically now be implemented without the need to maintain two separate implementations

If I were Google, I’d be planning ahead to migrate away from WebRTC to this newer alternative in the next 3-5 years. It won’t happen in a day, but it certainly makes sense to do.

Can/should Google maintain two versions of WebRTC?

Today, for all intent and purpose, Google maintains two separate versions of WebRTC.

The first is the one we all know and love. It is the version found in webrtc.org and the one that is compiled into Chrome.

The other one is the one Google uses and promotes, where it invests in differentiation through the use of machine learning. This is where their WaveNetEQ can be found.

Do you think Google will be putting engineers to improve the packet loss concealment algorithm in the WebRTC code in Chrome or would it put these engineers to improve its WaveNetEQ packet loss concealment algorithm? Which one would further its goal, assuming they don’t have the manpower to do both? (and they don’t)

I can easily see a mid-term future where Google invests a lot less in WebRTC inside Chrome and shifts focus towards WebTransport+WebCodecs with their own proprietary media engine implementation on top of it powered by WebAssembly.

Will that happen tomorrow? No.

Should you be concerned and even prepare for such an outcome? That depends, but for many the answer should be Yes.

The end of a level playing field and back to survival of the fittest

WebRTC brought us to an interested world. It leveled the playing field for anyone to adopt and use real-time voice and video communication technologies with a relatively small investment. It got us as far as where we are today, but it might not take us any further.

For this to be sustainable, browser vendors need to further invest in the quality of their WebRTC implementations and make that investment open for general use. Here’s the problem:

Apple (Safari)

Doesn’t really invest in anything of consequence in WebRTC.

- They seem to care more about having an HEVC implementation than in getting their audio to work properly in mobile Safari in WebRTC

- To date, they have taken the libwebrtc implementation from Google and ported to work inside Safari, making token adjustments to their own media pipelines

- I am not aware of any specific improvements Apple made in Safari’s WebRTC implementation to quality via the media algorithms used by libwebrtc itself

Apple cares more about FaceTime than all of that WebRTC nonsense anyways…

Mozilla (Firefox)

Actually have a decent implementation.

- While Firefox uses libwebrtc as the baseline, they replaced components of it with their own

- This includes media capturer and renderer for audio and video

- They have invested a lot in improving the audio pipeline in Firefox, which affects quality in WebRTC

Microsoft (Edge)

Their latest Edge release is Chromium based.

- They aren’t doing much at the moment in the WebRTC part of it is far as I am aware

- They could improve the media pipeline implementation of Chromium (and by extension Edge) for Windows 10

- But…

- Do they have an incentive?

- Would they contribute such a thing back to Google or keep it in their Edge implementation?

- Would Google take it if Microsoft gave it to them?

And then there’s Microsoft Teams, which offers a sub par experience in the browser than it does in the native application. All of the investment of Teams is going towards improving quality and user experience in the app. The web is just an afterthought at the moment

Google (Chrome)

Believe WebRTC is good enough.

- There are some optimizations and improvements that are now finding their way into WebRTC in the browser

- But a lot of what is done now is kept out of the web and the open source community. WaveNetEQ is but an example of things to come

- It is their right to do that, but does this further the goal of WebRTC as a whole and the community around it?

Now that we’re heading towards differentiation, the larger vendors are going to invest in gutting WebRTC and improving it while keeping that effort to themselves.

No more level playing field.

Prepare for the future of WebRTC

What I’ve outlined above is a potential future of WebRTC. One that is becoming more and more possible in my mind.

There’s a lot you can do today to take WebRTC and optimize around it. Making your application more scalable. Offering better media quality as well as use experience. Growing call sizes to hundreds or participants or more.

Investing in these areas is going to be important moving forward.

You can look further at how WebRTC trends in 2022 are shaping out, and maybe also attend my workshop.

> Investing in these areas is going to be important moving forward.

You always had to invest in these, the assumption that you would be able to spin up a great app with minimal investment in a matter of days has always been rather naive.

While correct, I think that the level of investment needed in the media processing layer is about to rise considerably.

It depends. The quality you will get from “stock webrtc” will remain at a very high level. However the difference to the top tier will grow.

Whether the market will care remains to be seen. Most likely it will.

While it is possible to build a proper WebRTC based solution, for a number of reasons as explained by Tsahi above and more, it has not happened. I do not see WebRTC going the way most other standards / open source projects do. As articulated, there are too many constrains w.r.t. Co-Dec (browser is not leveraging h/w acceleration by default), AI/ML is going to be essential and running in browser is… does one wait for WebRTC to catchup? or do what Zoom did via native application. Is value proposition of running in Browser articulated well over application that does a better job where people are willing to install app? Two forks may emerge, WebRTC for P2P non-scalable solution and non-WebRTC or Custom(ized) solution to support use cases like ‘Zoo’ Meetings with 10+ talking heads… Interesting times.

🙂

Thank you for this information.

This is a fantastic article. Probably my favorite in a long time, WebRTC+1 are the best conversations. Curious your thoughts on this Tsahi.

* Does Zoom/WebTransport suggest that NetEq etc… was overhyped?

With Pion I am constantly getting told that nothing that could compete with Google’s WebRTC implementation. So no reason to use GStreamer, Pion etc…

But Zoom’s success would suggest that maybe that isn’t true?

From my perspective it seems that WebRTC in the browser stagnated because only a few small companies can change anything. I am happy that WebTransport can democratize it some more, but I am sad that it could dramatically raise the bar to build things.

* Do you think we will look back on the move to a binary web with regret?

I would hate to load a page and just get WASM + Canvas some day. On one hand it is great for innovation, but the barrier of entry is just going to get so much higher.

* Is there a precedent for APIs that aren’t useful without supplying your own parts?

If there is no congestion control with WebTransport by default that seems pretty rough. I guess you can just accept the loss or latency. Do you know of any another APIs that are like that.

I would be curious what the answer from the W3C on that question would be.

The W3C WebTransport WG is in the process of being formed. The Proposed Charter is here: https://github.com/w3c/webtransport-charter

Note that the Charter is focused on client/server.

The question of how WebTransport congestion control state would be fed back to WebCodecs is still open. WebCodecs isn’t based on WHAT WG streams, so “backpressure” from a WebTransport stream can’t be propagated to a WebCodecs encoder. Insertable Streams is based on WHATWG Streams, but the interaction with congestion control is based on RTP transport, so you can’t just have an Insertable Stream “pipeTo” a WebTransport writable stream and expect WebTransport congestion control and the PeerConnection encoder to work together.

Too many questions to answer here 🙂

NetEq isn’t overhyped. I think some of it can be implemented in WASM. In such a case, why not?

I don’t think this has anything to do with reasoning behind using or not using PION. It as well as GStreamer have their place in the world (mostly in embedded and client on a server side scenarios is my guess).

Zoom started alongside WebRTC and decided not to adopt it. It worked for them. Didn’t work for others like WebEx that did… almost the same. Execution is a lot more than the specific technology being chosen.

I think the binary web is a necessity. I prefer H.323 over SIP but prefer WebRTC over both of them. Does that make any sense?

I also think the binary web will have a lot of JS around it to stitch it together and keep the higher logic. WASM is better served at speeding up certain tasks than replacing everything is my guess.

APIs are never useful without your own parts. If they were, we’d call them SaaS… a full service.

Congestion control will either be added to WebTransport or be enabled on top of it so you’ll be able to WASM your way into it. Again – my guess on where this will go.

W3C probably doesn’t look at such an eventuality. It will happen in small steps while focusing on other use cases and scenarios and then finding out that vendors adopted it for real time communications.

Can you explain how WebTransport + WebCodecs is going to replace WebRTC?

WebRTC is P2P, but WebTransport is client/server. You can’t support peer-to-peer communications without NAT traversal. It makes more sense to say that WebTransport + WebCodecs could replace Data Channel + MSE or WebSockets + MSE in a client/server scenario.

Replacing WebRTC would require P2P support, such as via WebRTC-ICE (https://w3c.github.io/webrtc-ice/) and WebRTC-QUIC (see: https://w3c.github.io/webrtc-quic/). However, no “intent to ship” statement has been filed since the RTCQuicTransport Origin Trial expired in 2019.

Bernard,

P2P is nice, but mostly not needed by the larger vendors in the market. The leading deployment model for almost everyone today is an SFU, with globally distributed media servers. This being the case, what would be the long term benefit of either Google or Microsoft to use WebRTC and not the other combination of technologies for their own services?

The biggest deal about WebRTC is interop.

If unbundling can damage communication between me on mobile Chrome and the peer on MS Edge behind the call-center firewall, then it would be a bad move for the community as whole.

On the other hand, if unbundling provides more opportunities for independent vendors to come up with browser-based and/or native solutions for their challenges, this would be most welcome, and increase the market demand for us, software engineers who understand something about RTC.

Alex,

Everything I portrayed is interoperable in theory as it all relies on web standards and not proprietary tech – the proprietary parts are added on top, mostly via WASM.

Hey Tsahi,

It’s Carl.

If Chromium still has WebRTC in it. Would it still be Chrome, but suboptimized or would it be deliberately removed?

Kind Regards,

Carl

There is no change for Chromium. WebRTC is part of HTML5 and this isn’t going to change, so Chrome and Chromium will continue supporting it.

This is interesting, but all analysis they do is around big conferences. They fight how would you say vulgarly “Who has it bigger” and all new components it’s seems to go there. But currently, the small video conference one-one or maximum 4 participants is growth an example of that is CPaaS industries, whiteboards, etc., that allows creating a multimedia or data channels in a P2P connection over the browser without worrying about big infrastructure and optimization over the packets.

I completely agree with Tsahi about this changes will increase the barriers to develop applications into the “new WebRTC” also can segregate fast and short communications like a simple phone call.

Well, let’s see what could happen in the future because new use cases incoming because COVID introduced a new very big needs of society.

While 1:1 remains a very important use-case it is boring. You can’t tweak and differentiate much, the game there is reliability.

Webrtc is over 10 years old and mature. That's why it is getting deprecated. Got it.

It isn’t being deprecated. It is just that new technologies that are part of WebRTC get their own API surface.